There is another ASP.Net MVC sample app, this time it is official, passed the proper review procedure, and is explicitly marketed as “intended to guide you with the planning, architecting, and implementing of Web 2.0 applications and services.”

I am saying all of that in order to distinguish it from Oxite, which was non of this things. There have been a couple of reviews of Kobe already. Frankly, I don’t really care for them, mostly because I think that they dealt too much with nitty gritty details of the app that doesn’t really matter much. I don’t much care for extra using or the use of System.Int32 vs. int, the naming convention used or even what sort of HTML formatting they used. I mostly care about the code and architecture. So I decided to take a look myself.

This post is going to go over the nuts & bolts, looking at low level coding details. I am going to have another that is going to look at the architecture and probably a few others that talk about some additional concerns that I have.

Let us start by looking at the code via some tools. Simian reports:

And that is when the similarity threshold is 6 lines, I usually run it with three, if we try that, we get:

Found 5138 duplicate lines in 873 blocks in 67 files

Next, the most important code metric to me in most cases, Cyclomatic Complexity. Two methods literally jump out of the solution and beg for mercy.

- HomeController.Discovery with CC index of 35(!)

- GroupController.Profile with CC index of 22

I am going to show them both in all their glory, and let you be the judge of them:

public ActionResult Discovery(string query) { query = query.Trim(); query = Server.HtmlEncode(query); List<PresentationSummary> presentationsAll = null; List<PresentationSummary> presentationsMostPopular = null; List<PresentationSummary> presentationsMostViewed = null; List<PresentationSummary> presentationsMostDownload = null; List<PresentationSummary> presentationsNew = null; List<UserSummary> activeusers = null; List<UserSummary> allusers = null; List<Group> activegroups = null; List<Group> allgroups = null; int iNewMemberPageCount = 0; int iNewGroupPageCount = 0; int ipresentationsAll = 0; int ipresentationsMostPopular = 0; int ipresentationsMostViewed = 0; int ipresentationsMostDownload = 0; int ipresentationsNew = 0; UserSummary user = _userService.GetUserByName(query); if (user != null) { presentationsAll = _userService.GetAuthoredPresentations(user.UserName); presentationsMostPopular = presentationsAll.OrderByDescending(p => p.Favorites).ToList(); presentationsMostViewed = presentationsAll.Where(p => p.Views > 0).OrderByDescending(p => p.Views).ToList(); presentationsMostDownload = presentationsAll.Where(p => p.Downloads > 0).OrderByDescending(p => p.Downloads).ToList(); presentationsNew = presentationsAll.OrderByDescending(p => p.InsertedDate).ToList(); ipresentationsAll = decimal.Divide(presentationsAll.Count, 10).ToInt(); ipresentationsMostPopular = decimal.Divide(presentationsMostPopular.Count, 10).ToInt(); ipresentationsMostViewed = decimal.Divide(presentationsMostViewed.Count, 10).ToInt(); ipresentationsMostDownload = decimal.Divide(presentationsMostDownload.Count, 10).ToInt(); ipresentationsNew = decimal.Divide(presentationsNew.Count, 10).ToInt(); activeusers = new List<UserSummary> { user }; allusers = new List<UserSummary> { user }; iNewMemberPageCount = decimal.Divide(allusers.Count, 8).ToInt(); allgroups = _userService.GetGroups(user.UserName); activegroups = allgroups.OrderByDescending(g => g.InsertedDate).ToList(); iNewGroupPageCount = decimal.Divide(allgroups.Count, 8).ToInt(); } else { Group group = _groupService.GetGroupByName(query); if (group != null) { presentationsAll = _groupService.GetPresentations(group.GroupName); presentationsMostPopular = presentationsAll.OrderByDescending(p => p.Favorites).ToList(); presentationsMostViewed = presentationsAll.Where(p => p.Views > 0).OrderByDescending(p => p.Views).ToList(); presentationsMostDownload = presentationsAll.Where(p => p.Downloads > 0).OrderByDescending(p => p.Downloads).ToList(); presentationsNew = presentationsAll.OrderByDescending(p => p.InsertedDate).ToList(); ipresentationsAll = decimal.Divide(presentationsAll.Count, 10).ToInt(); ipresentationsMostPopular = decimal.Divide(presentationsMostPopular.Count, 10).ToInt(); ipresentationsMostViewed = decimal.Divide(presentationsMostViewed.Count, 10).ToInt(); ipresentationsMostDownload = decimal.Divide(presentationsMostDownload.Count, 10).ToInt(); ipresentationsNew = decimal.Divide(presentationsNew.Count, 10).ToInt(); allusers = _groupService.GetMembers(group.GroupName); activeusers = allusers.OrderByDescending(u => u.DateOfJoining).ToList(); iNewMemberPageCount = decimal.Divide(allusers.Count, 8).ToInt(); allgroups = new List<Group> { group }; activegroups = new List<Group> { group }; iNewGroupPageCount = decimal.Divide(allgroups.Count, 8).ToInt(); } else { presentationsAll = _presentationService.GetAllPresentationsByKewordTimeLine(query, "Day", "0", 10, 1); presentationsMostPopular = _presentationService.GetMostPopularPresentationsByKewordTimeLine(query, "Day", "0", 10, 1); presentationsMostViewed = _presentationService.GetMostViewedPresentationsByKewordTimeLine(query, "Day", "0", 10, 1); presentationsMostDownload = _presentationService.GetMostDownloadedPresentationsByKewordTimeLine(query, "Day", "0", 10, 1); presentationsNew = _presentationService.GetNewPresentations(query, "Day", "0", 10, 1); ipresentationsAll = decimal.Divide(_presentationService.GetAllPresentationsByKewordTimeLine(query, "Day", "0").Count, 10).ToInt(); ipresentationsMostPopular = decimal.Divide(_presentationService.GetMostPopularPresentationsByKewordTimeLine(query, "Day", "0").Count, 10).ToInt(); ipresentationsMostViewed = decimal.Divide(_presentationService.GetMostViewedPresentationsByKewordTimeLine(query, "Day", "0").Count, 10).ToInt(); ipresentationsMostDownload = decimal.Divide(_presentationService.GetMostDownloadedPresentationsByKewordTimeLine(query, "Day", "0").Count, 10).ToInt(); ipresentationsNew = decimal.Divide(_presentationService.GetNewPresentations(query, "Day", "0").Count, 10).ToInt(); activeusers = _userService.GetMostActiveUsers(query, 8, 1); allusers = _userService.GetAllUsers(query, 8, 1); iNewMemberPageCount = decimal.Divide(_userService.GetMostActiveUsers(query).Count,8).ToInt(); activegroups = _groupService.GetMostActiveGroupByKeyword(query, 8, 1); allgroups = _groupService.GetAllGroupByKeyword(query, 8, 1); iNewGroupPageCount = decimal.Divide(_groupService.GetMostActiveGroupByKeyword(query).Count, 8).ToInt(); } } ViewData.Add("membersList-mostactive", activeusers); ViewData.Add("membersList-all", allusers); ViewData.Add("groupsList-mostactive", activegroups); ViewData.Add("groupsList-all", allgroups); ViewData.Add("presentations-all",presentationsAll); ViewData.Add("presentations-mostpopular",presentationsMostPopular); ViewData.Add("presentations-mostviewed",presentationsMostViewed); ViewData.Add("presentations-mostdownload",presentationsMostDownload); ViewData.Add("presentations-new",presentationsNew); ViewData.Add("Query", query); //ViewData.Add("Presentations", presentations); ViewData.Add("members-totalcount", iNewMemberPageCount); ViewData.Add("groups-totalcount", iNewGroupPageCount); ViewData.Add("presentations-alltotalcount", ipresentationsAll); ViewData.Add("presentations-mostpopulartotalcount", ipresentationsMostPopular); ViewData.Add("presentations-mostviewedtotalcount", ipresentationsMostViewed); ViewData.Add("presentations-mostdownloadtotalcount", ipresentationsMostDownload); ViewData.Add("presentations-newtotalcount", ipresentationsNew); return View(); }

This is… a very busy method, I must say. But in a way, the Profile method is much worse:

[AcceptVerbs(HttpVerbs.Post)] public ActionResult Profile(string gname, string type, string section, string subSection, string page) { if (string.IsNullOrEmpty(gname)) return new ContentResult { Content = "" }; if (type != "widget") return new ContentResult { Content = "" }; Group group = null; try { group = _groupService.GetGroupByName(gname); } catch (Exception) { return new ContentResult { Content = "" }; } if (group == null) { return new ContentResult { Content = "" }; } string groupName = group.GroupName; AddUserLevelToViewData(groupName); int pageNo = 1; Int32.TryParse(page, out pageNo); if (pageNo == 0) pageNo = 1; if (section == "div-GroupPresentations") { List<PresentationSummary> presentations = null; switch (subSection) { case "div-GroupPresentations-RecentltAdded": presentations = _groupService.GetRecentlyAddedPresentations(groupName, 5, pageNo); break; case "div-GroupPresentations-MostViewed": presentations = _groupService.GetMostViewedPresentations(groupName, 5, pageNo); break; case "div-GroupPresentations-MostDownloaded": presentations = _groupService.GetMostDownloadedPresentations(groupName, 5, pageNo); break; case "div-GroupPresentations-All": presentations = _groupService.GetPresentations(groupName, 5, pageNo); break; } return View("PresentationsList", presentations); } else if (section == "div-GroupWall") { switch (subSection) { case "div-GroupWall-Messages": ViewData["GroupMessages"] = _groupService.GetMessages(groupName, 5, pageNo); return View("GroupMessageList", ViewData["GroupMessages"]); case "div-GroupWall-MemberRequests": ViewData["GroupJoiningRequests"] = _groupService.GetGroupJoiningRequests(groupName, 5, pageNo); return View("GroupJoiningRequestList", ViewData["GroupJoiningRequests"]); } } else if (section == "div-GroupInfoExtended") { switch (subSection) { case "div-GroupInfoExtended-GroupMembers": ViewData["GroupMembers"] = _groupService.GetMembers(groupName, 4, pageNo); return View("MembersList", ViewData["GroupMembers"]); } } return new ContentResult { Content = "" }; }

Just look at the code. I thought that the whole point of MVC was to separate the logic from the view. Having the view strongly tied to the controller output is fine by me, but having the controller strongly tied to the HTML format of the page? That isn’t right.

Another thing that isn’t right is HomeController.Index():

public ActionResult Index() { GetFeaturedPresentations(); //*** Dummy call the the Database to activate the Connection. List<PresentationSummary> featured = _presentationService.GetFeaturedPresentations(); List<PresentationSummary> beingViewed = _presentationService.GetPresentationRecentlyViewed(); List<PresentationSummary> mostDownloaded = _presentationService.GetMostDownloadedPresentation(); PresentationSummary presentationOfDay = _presentationService.GetPresentationOfDay(); ViewData.Add("FeaturedPresentations", featured.ToArray()); ViewData.Add("RecentlyViewedPresentations", beingViewed.ToArray()); ViewData.Add("MostDownloadedPresentations", mostDownloaded.ToArray()); ViewData.Add("presentationsOfDay", presentationOfDay); ViewData["Tags"] = _presentationService.GetPopularTags(); return View(); }

Notice the first call?

private void GetFeaturedPresentations() { try { //*** Dummy call to the Presentation Service to get the Featured presentations, //*** this call is place because, an exception thrown from the Data layered on first hit to the DB (failed to open DB) //*** and the second hit to the DB gets success. _presentationService.GetFeaturedPresentations(); } catch (Exception) { /*do nothing with this exception*/ } }

I really like it when you work around a bug instead of actually fix it.

Moving on, let us look at the service layer. Most of it looks like this:

public ADs GetAdById(string adId) { try { string key = "Ads-" + adId; ADs data = cacheService.Get<ADs>(key); if (data == null) { data = provider.GetAdById(adId.ToGuid()); if (data != null && data.Image != null) { cacheService.Add(key, data as Object, null, DateTime.MaxValue, new TimeSpan(0, 10, 0), System.Web.Caching.CacheItemPriority.Normal, null); } } return data; } catch (Exception ex) { bool rethrow = ExceptionPolicy.HandleException(ex, "Service Policy"); if (rethrow && WebOperationContext.Current == null) { throw; } return null; } }

I am not joking about all of them looking alike, by the way, it is obviously has been cut & paste a lot.

Nitpick, “data as Object” – that is not something you often see. But this is even better (from CacheService):

I like how we have a cacheService but we coupled its interface with System.Web.Caching, or the fact that most of this code is just a very long winded way of calling GetAdById.

But wait, I spared you the method documentation, which is a real masterpiece:

/// <summary> /// Returns Advertisment. /// </summary> /// <param name="adId"> GUID of an Advertisment</param> /// <returns>Ads</returns> public ADs GetAdById(string adId)

Yes, the adId is a string which is a guid. We are so lucky to work with VARIANT again, right?

Let us take another method, just to see what is going on in a typical method inside the project. I intentionally avoid the ones that we already looked at. I took a peek at CommunityController and found the Index method:

[AcceptVerbs(HttpVerbs.Post)] public ActionResult Index(string type, string section, string subSection, string page) { if (type != "widget") return new ContentResult { Content = "" }; int pageNo = 1; Int32.TryParse(page, out pageNo); if (pageNo == 0) pageNo = 1; if (section == "members") { List<UserSummary> users = null; switch (subSection) { case "members-new": users = _communityService.GetNewUsers(8, pageNo); break; case "members-mostactive": users = _communityService.GetMostActiveUsers(8, pageNo); break; case "members-all": users = _communityService.GetAllUsers(8, pageNo); break; default: users = _communityService.GetAllUsers(8, pageNo); break; } return View("MembersList", users); } else if (section == "groups") { List<Group> groups = null; switch (subSection) { case "groups-new": groups = _communityService.GetNewGroups(8, pageNo); break; case "groups-mostactive": groups = _communityService.GetMostActiveGroups(8, pageNo); break; case "groups-all": groups = _communityService.GetAllGroups(8, pageNo); break; default: groups = _communityService.GetAllGroups(8, pageNo); break; } return View("GroupsList", groups); } else if (section == "favourites") { List<PresentationSummary> favouritePresentation = _communityService.GetCommunityFavorite(10, pageNo); return View("PresentationsView", favouritePresentation.ToArray()); } return new ContentResult { Content = "" }; }

Let me see how many things I can find in a cursory examination:

- Hard coding galore

- Long method

- Complex method

- Controller method return several different views

And note that I am still not even trying for the architectural concepts or code quality metrics. That I’m going to leave to another post.

Frankly, I am seeing way too much bad things in the code to overview all of them. I am going to stop with a goodie, though.

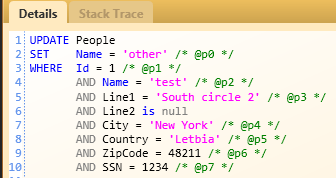

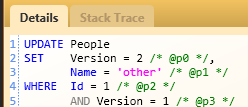

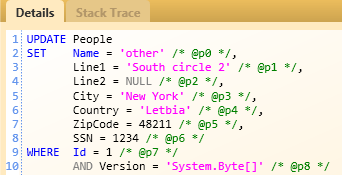

Let us explore GroupRepository.GetGroup, shall we?

private Group GetGroup(Guid groupId, KobeEntities Context) { try { Group group = Context.GroupSet .Where(g => g.GroupId == groupId) .FirstOrDefault(); if (group == null) throw new DataException("Invalid Group Id", null); return group; } catch (Exception ex) { bool rethrow = ExceptionPolicy.HandleException(ex, "Data Policy"); if (rethrow) { throw; } return null; } }

On the face of it, except for the repeated stupid error handling, there doesn’t seems to be something wrong here, right?

Take note for the different parameter casing on the GetGroup, though. Why is KobeEntities PascalCase? Well, that is because there is also a property called Context on the GroupRepository that you might use by accident. So, what is this Context parameter all about? GetGroup is a private method, who is calling it?

Here is one such callsite:

public void AddGroupInterests(Guid groupId, string[] interests, Guid userId) { try { KobeEntities _context = Context; Group group = GetGroup(groupId, _context); User user = GetUser(userId, _context);

So, we take the Context property, put it in a _context local variable. Then we pass it to GetGroup, which uses it.

I must say that I am at a loss to understand what was going on in the mind of whoever wrote it. Was he trying to optimize the number of time we access the property?

As I said, I am currently writing a few other posts about Kobe, this was just to get to see the code itself, so we have an impression about its quality.

I am… not impressed.