The question came up in the Mailing list, and I thought it would be a good thing to post about.

How do you handle reference data in RavenDB? The typical example in most applications would be something like the list of states, their names and abbreviations. You might want to refer to a state by its abbreviation, but still allow for something like this:

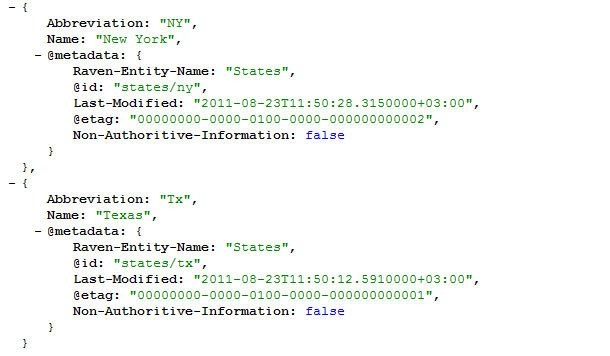

How do you handle this in RavenDB? Using a relational database, you would probably have a separate table just for states, and it is certainly possible to create something similar to that in RavenDB:

The problem with this approach is that is it a very wrong headed approach one for a document database. In a relational database, you have no choice but to threat anything that has many items as a table with many rows. In a document database, we have much better alternatives.

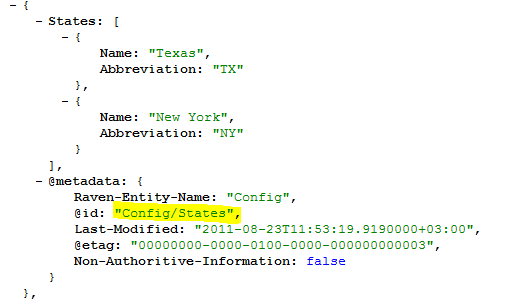

Instead of threating each state as an individual document, we can treat them as a whole value, like this:

In the sample, I included just a couple of states, but I think that you get the idea. Note the name of this document “Config/States”.

What are the benefits of this approach?

- We only have to retrieve a single document.

- We are almost always going to treat the states as a list, never as individual items.

- There is never a transactional boundary for modifying just a single state.

In fact, I would usually say that this isn’t good enough. I would try to centralized any/all of the reference data that we use in the application into a single document. There is a high likelihood that it would be a fairly small document even after doing so, and it would be insanely easy to cache.

![image_thumb[2] image_thumb[2]](http://ayende.com/blog/Images/Windows-Live-Writer/Challenge-Recent-Comments-with-Future-Po_17DA/image_thumb%5B2%5D_thumb.png)