Another example, which is naturally asynchronous, is the way most web sites create a new user. This is done in a two stage process. First, the user fills in their details and initial validation is done on the user information (most often, that we have no duplicate user names).

Then, we send an email to the user and ask them to validate their email address. It is only when they validate their account that we will create a real user and let them login into the system. If a certain period of time elapsed (24 hours to a few days, usually), we need to delete any action that we perform and make that user name available again.

When we want to solve the problem with messaging, we run into an interesting problem. The process of creating the user is a multi message process, in which we have to maintain the current state. Not only that, but we also need to deal with the timing issues build into this problem.

It gets a bit more interesting when you consider the cohesiveness of the problem. Let us consider a typical implementation.

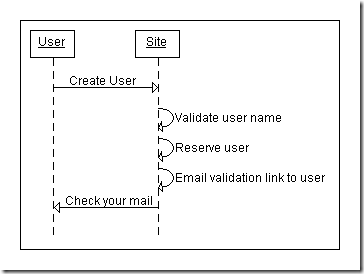

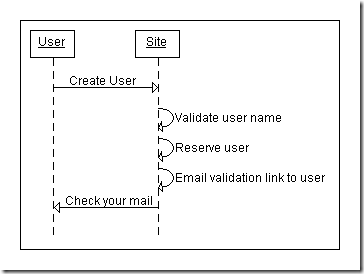

First, we have the issue of the CreateUser page:

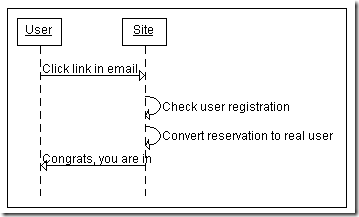

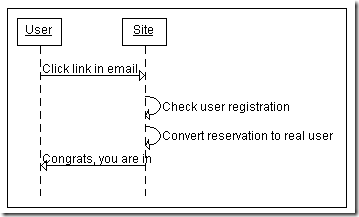

Then we have the process of actually validating the user:

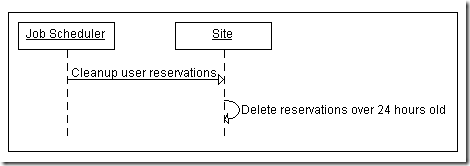

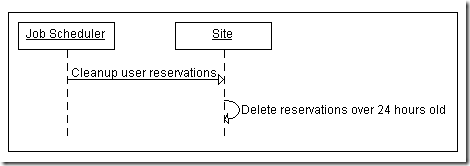

And, lest us forget, we have a scheduled job to remove expired user account reservations:

We have the logic for this single feature scattered across three different places, which execute in different times, and likely reside in different projects.

Not only that, but if we want to make the experience pleasant for the user, we have a lot to deal with. Sending an email is slow. You don’t want to put this as a part of synchronous process, if only because of the latency it will add to showing a response to the user. It is also an unreliable process. And we haven’t even started to discuss error handling yet.

For that matter, sending an email is not something that you should just new an SmtpClient for. You have to make sure that someone doesn’t use your CreateUser page to bomb someone else’s mailbox, you need to keep track of emails for regulatory reasons, you need to detect invalid emails (from SMTP response), etc.

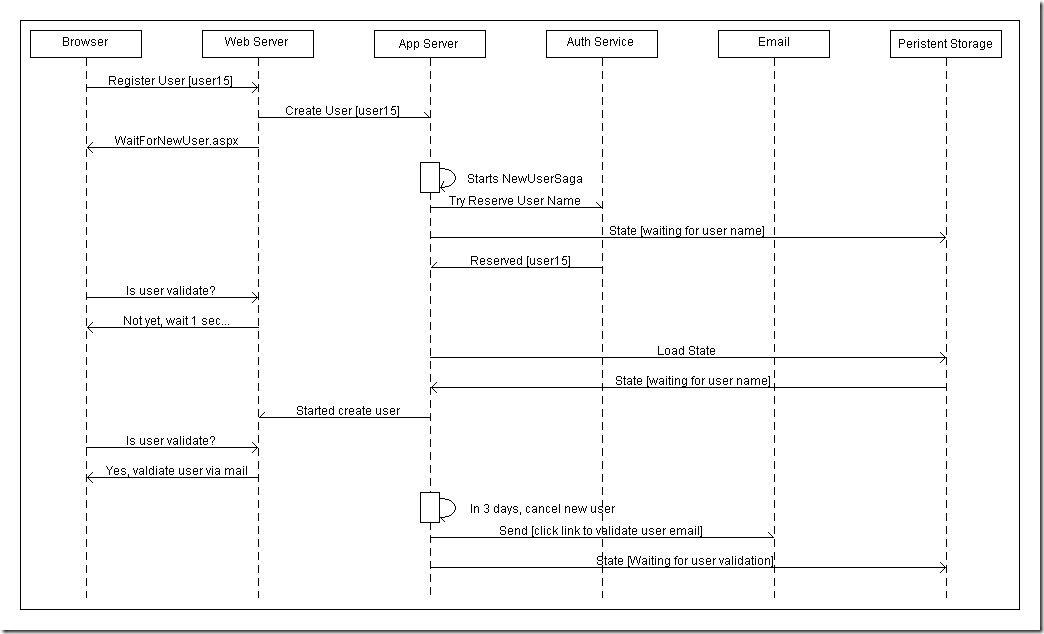

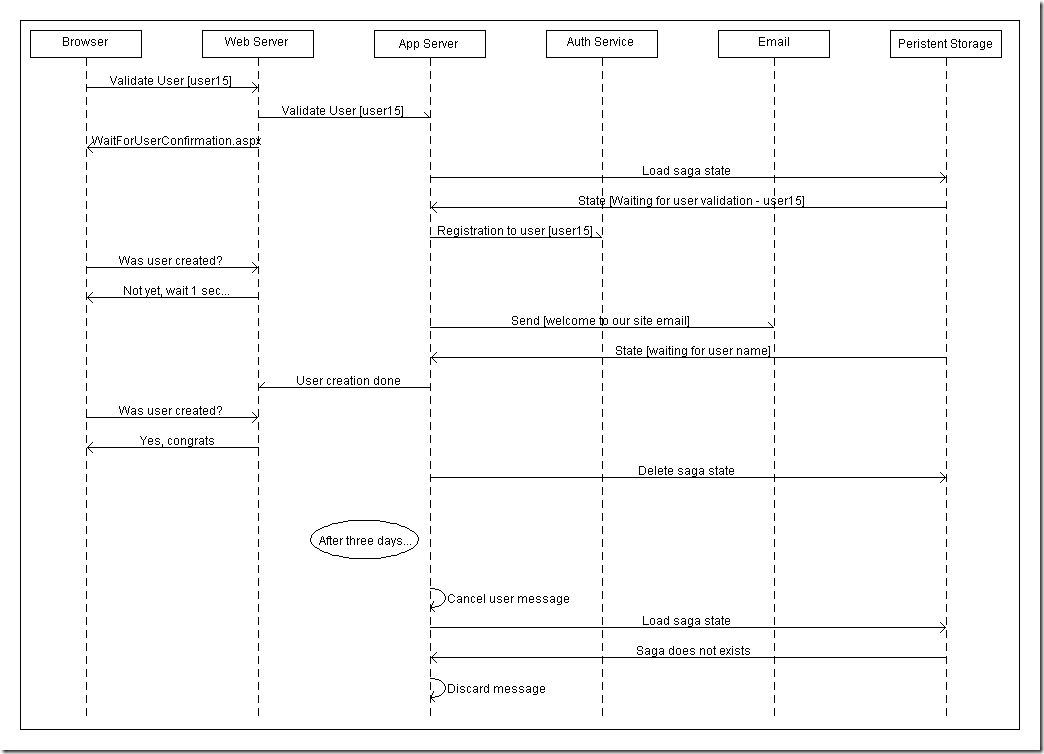

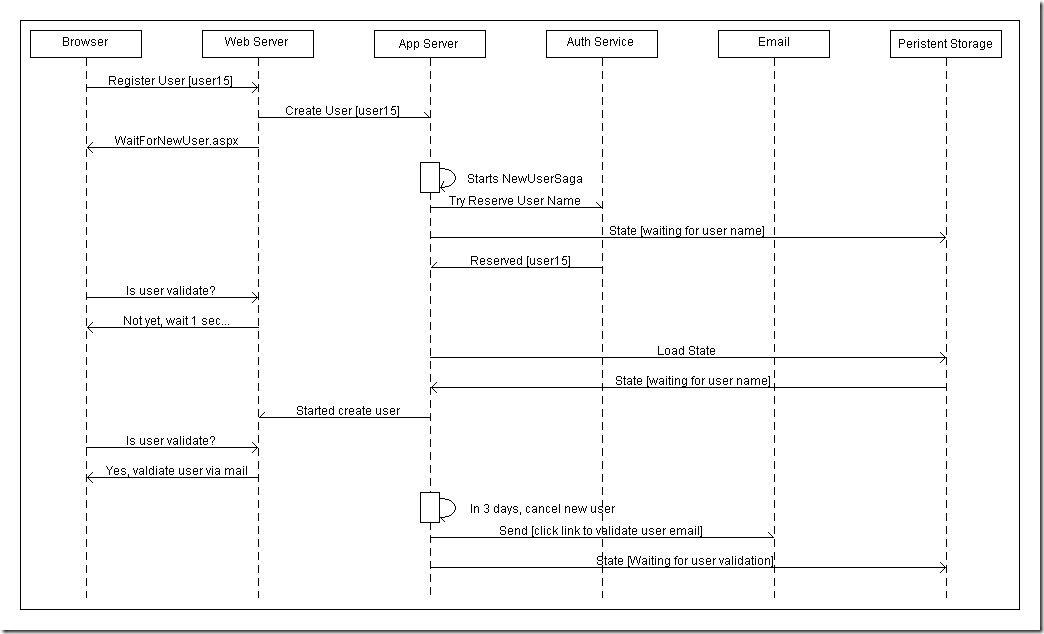

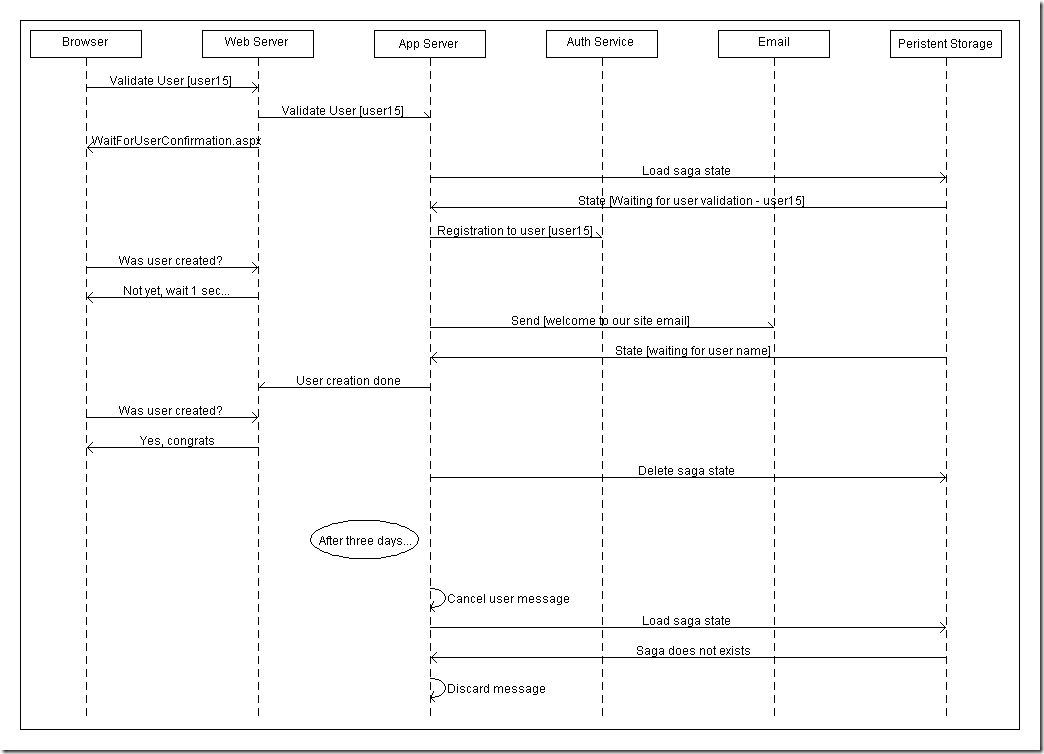

Let us see how we can do this with async messaging, first we will tackle the register user and send an email to validate their email:

When the user click on the link in their email, we have the following set of interactions:

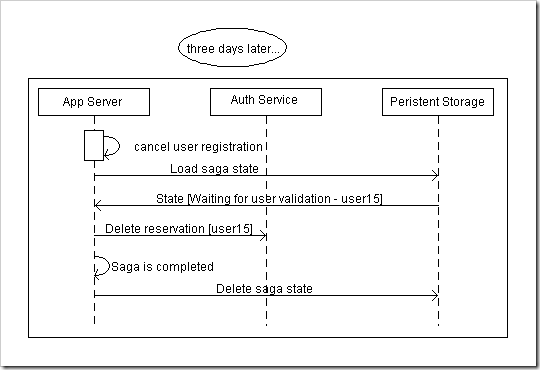

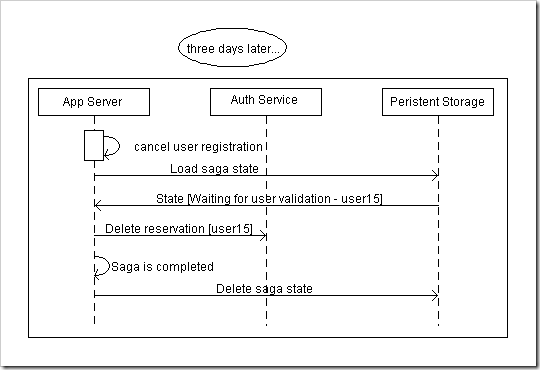

And, of course, we need to expire the reserved username:

In the diagrams, everything that happens in the App Server is happening inside the context of a single saga. This is a single class that manages all the logic relating to the creation of a new user. That is good in itself, but I gain a few other things from this approach.

Robustness from errors and fast response times are one thing, of course, but there are other things that I am not touching here. In the previous example, I have shown a very simplistic approach to handling the behavior, where everything is happening inline. This is done because, frankly, I didn’t have the time to sit and draw all the pretty boxes for the sync example.

In the real world, we would want to have pretty much the same separation in the sync example as well. And now we are running into even more “interesting” problems. Especially if we started out with everything running locally. The sync model make it really hard to ignore the fallacies of distributed computing. The async model put them in your face, and make you deal with them explicitly.

The level of complexity that you have to deal with with async messaging remains more or less constant when you try to bring the issues of scalability, fault tolerance and distribution. They most certainly do not stay the same when you have sync model.

Another advantage of this approach is that we are using the actor model, which make it very explicit who is doing what and why, and allow us to work on all of those in an independent fashion.

The end result is a system compromised of easily disassembled parts. It is easy to understand what is going on because the interactions between the parts of the system are explicit, understood and not easily bypassed.