I run across the follow on Twitter:

And that resonated very strongly with me, but from the other side. I actually talked about it quite a lot in the past. You should design your system so it can adapt more easily for changes in the business process.

About 15 years ago I was tasked with building a system for scheduling and managing at-home nursing aid. One of the key reasons for the system I was building was that management wanted to enforce their vision of how things should be on the organization and have chosen to do that by ensuring the new system will only allow things to happen That Way.

To my knowledge, they spent over three years speccing the system, but after looking at what we delivered (according to the spec, mind you), the people who were supposed to be using that revolted. I ended up working closely with a couple of employees that were supervisors in a local branch and would actually be using the system day in and day out. That project and others like it has taught me a lot about how to design a system that would enable rather than limit what you can do.

Let’s take a paper based system. A client comes in and wants to order something. They have no last name (Cher, Madonna, etc). The person handle the order leave that field empty and process the order. However, in a computerized system, that is going to fail validation and require you to reject the order. The person handling the order? Nothing they can do about it “the system won’t let me”. Admittedly, this is the simplest case that I can think of, but there are many cases where you have to jump through illogical hops to satisfy inflexible rules in the system (leave a comment with the most egregious example you have run into, please).

If you approach this properly, there are relatively simple solution. Implement a human level decision making in the process, you can do that by developing a human level AI or by grabbing a human. Let’s take a simple example from the past few months.

We just had the launch of RavenDB Cloud, during which we focused primarily on the backend operations, getting everything setup properly, monitoring, etc. At the same time, I have the sales team talking to customers. One of the things that kept popping up is that they wanted and needed a pretty flexible pricing system. For example, right now we have a pricing model for: On Demand, Yearly Contract and Upfront Yearly Contract. We are talking to customers that want 3 and 5 years contracts, but that isn’t implemented in the system. I have customers that want to pay by Credit Card (the main supported way), but others can only pay via a Purchase Order and some (still!) want to cut me a physical check.

There are a few ways you can handle something like this:

- Tell customers that it is a credit card or the highway. Simplest to implement, kinda harsh on the bottom line.

- Tell customers that of course we can do that, and then go to the dev team and press the Big Red Sales Button. I was the guy who responded the Big Red Sales Button, and I hated that.

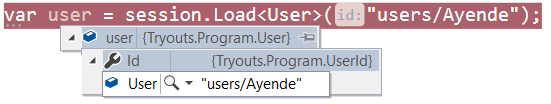

- Tell customers that we can handle their payment needs, go to the system and enable this:

When this is checked, the usual payment processing will run, but before we actually charge the user, we insert a human in the loop. This allows us to apply any specific customizations to the payment. For example, one scenario would be to zero the amount remaining to be charged and send a Purchase Order to the finance department for that particular customer.

The key here is to take Jimmy’s notion, of looking into the actual usage of the system and not consider this to be a failing of the system. The system, when created, was probably pretty good, but it changed over time. And even if you re-create it perfectly today, it is going to change again tomorrow, necessitating the same workarounds. If you embrace this from the get go, you end up in a different place. Because now you can employ smarts in the system without having to deploy a new version.

In another case, we had a way for the user to say: “I want to circumvent these checks and proceed anyway”. We accepted the change, even if they violated some rules of the system. We would raise a flag and have another person review and authorize those changes. If the original user wasn’t supposed to use this, there was a… discussion on that and changes implemented in the policy. No need to go through the software for such things, and infinitely more flexible. We had a scenario where one of the integration points failed (it was down for a couple of weeks, IIRC), which was required step for processing an order.

The users could skip this step, proceed normally and then come back later, when the 3rd party integration was available again and do any fixes required. Compared that to an emergency change in production and then frantic fixes afterward when the system is up again. Instead, we have a baked process to handle outliers.

In other words, don’t try to make your humans into computers. As any techie will tell you, computers will do what you told them to. Humans will do what you meant*.

* Well, usually.