I was talking to a developer recently and had a really interesting discussion around the notion of consistency. For simplicity’s sake, let’s imagine that we are talking about a game and we need to deal with awarding achievements.

I was talking to a developer recently and had a really interesting discussion around the notion of consistency. For simplicity’s sake, let’s imagine that we are talking about a game and we need to deal with awarding achievements.

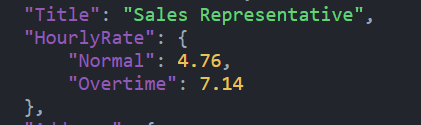

The story in question begins with a seemingly innocent business requirement:

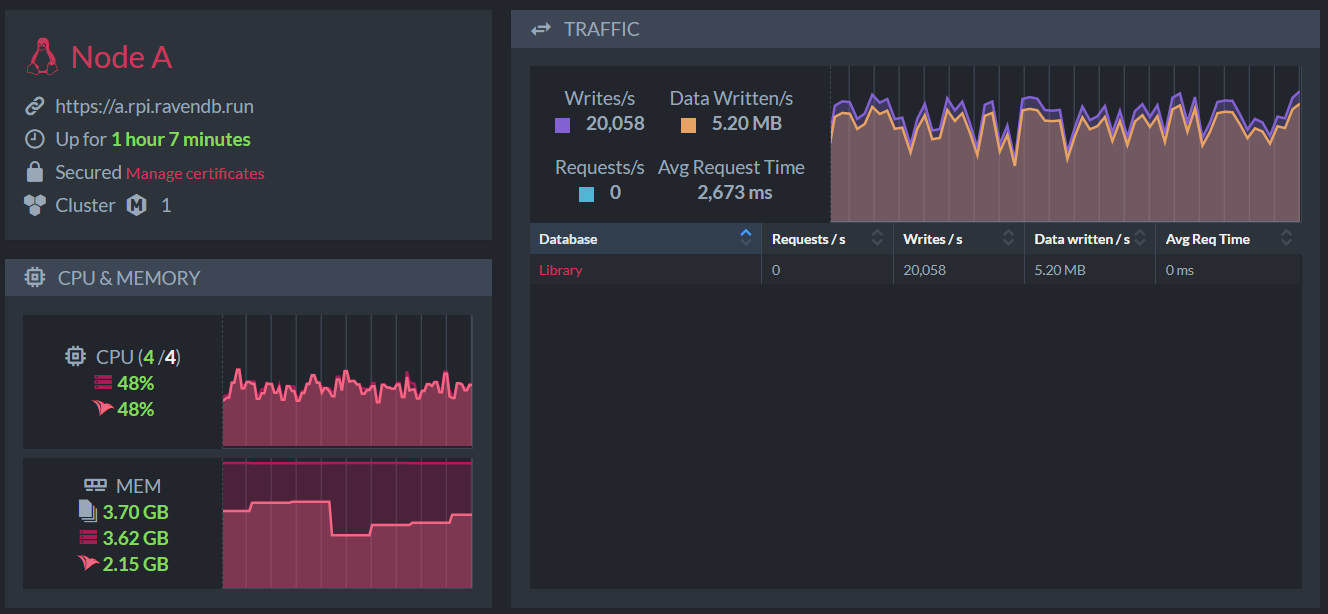

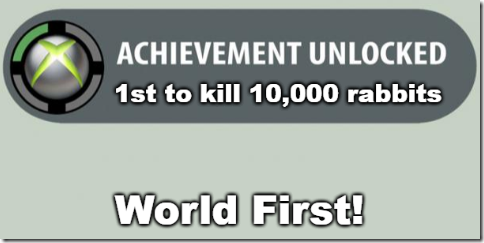

We want to announce a unique achievement in the game. The first player to kill 10,000 rabbits will get a unique class-appropriate item.

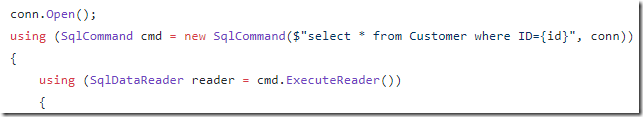

Conceptually, that means that we need to write the following code:

The code is simple, easy and trivial. I wish we could end the post at this point, but as you can imagine, the situation is a bit more complex.

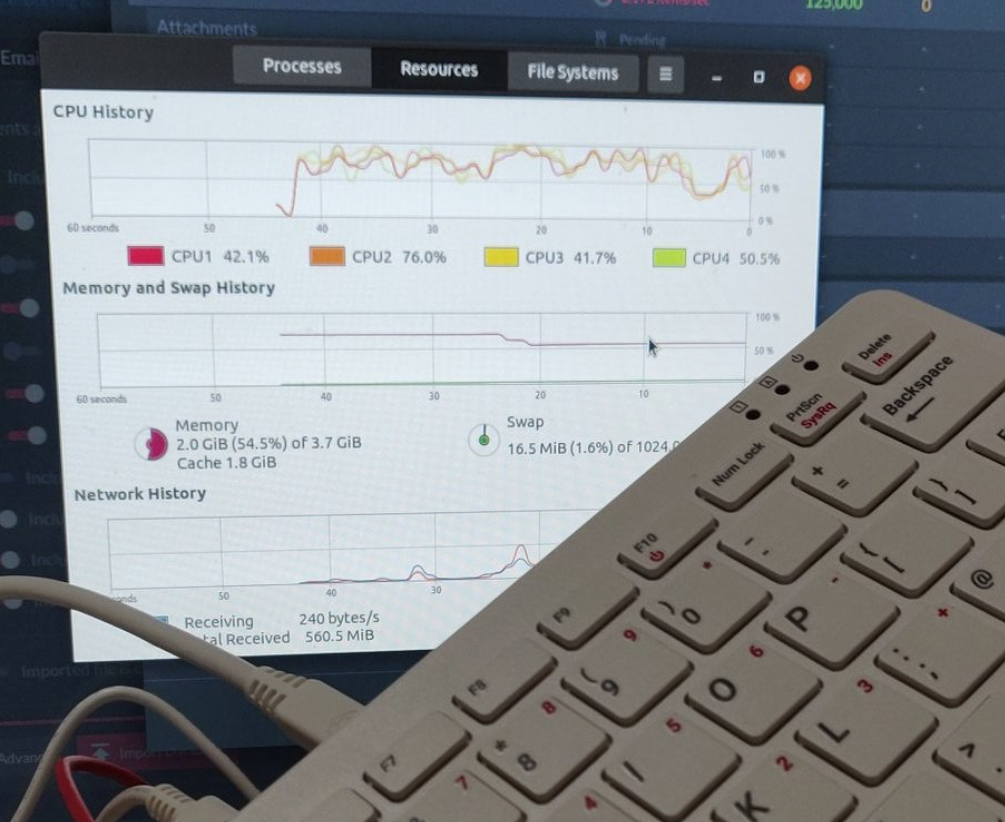

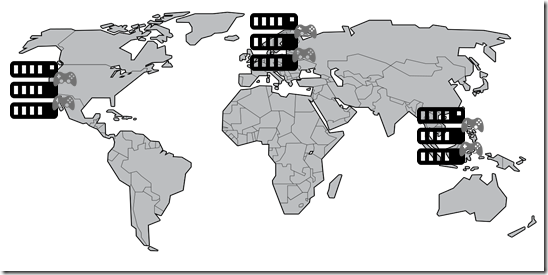

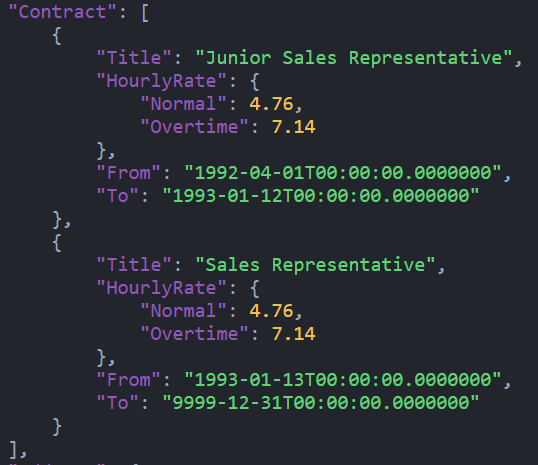

Consider the typical architecture of a game, we have the game world, which is composed of multiple servers, often located in different parts of the world. In this case, we can assume that we have three data centers, in separate continents.

Notice that we have a world first scenario here? That means that we need to synchronize the state across all of them in some manner, including when there are issues in the network, failures, etc.

For that matter, when we are talking about an achievement for a character, we can at least be sure that there is a single chain of events that we can follow, but what happens if we were to apply this achievement to a guild? In this case, multiple players may be competing to complete this achievement. If we allow to to also run on different servers (and regions), the situation now become quite complex.

Are there are technical ways to resolve this? Of course there are.

We can use distributed transactions to handle this, at first glance. However, that introduce an utterly unworkable spanner in the works (See what I did here  ). Games care a lot about performance and latency, forcing us to run a globally distributed transaction on every rabbit kill is not a possible solution. There are stiff performance costs associated with it. For that matter, in a partial failure scenario, you still want to allow players to kill rabbits, even if you lost some servers in another continent. That lead to several additional scenarios that you need to cover:

). Games care a lot about performance and latency, forcing us to run a globally distributed transaction on every rabbit kill is not a possible solution. There are stiff performance costs associated with it. For that matter, in a partial failure scenario, you still want to allow players to kill rabbits, even if you lost some servers in another continent. That lead to several additional scenarios that you need to cover:

- What happened if a player killed rabbits but wasn’t able to record that using the world scope state? Do we also store that in a character / server level state? What happens if we already awarded the achievement and then find out that there are delayed updates in the mix?

- What happens when two players kill their 10,000th rabbit at times T1 and T2 (where T2 > T1), but this happens near enough to one another that the write about the 2nd player is committed first?

- Note that this can happen on the same server, on the same region or across different regions.

- What happens when two players kill their 10,000th rabbit at the same instant?

- Note that this can happen on the same server, on the same region or across different regions.

- Note that this can actually happen at different times, but clock divergence will say it happened at the same time

- What happens when a player kill their 10,000 rabbit, but we had a network hiccup and couldn’t record the action in time?

In fact, there are a multitude of issues that we have to deal with, if we accept the scenario as is. And the impact on the entire system because of the unstated requirements of a single achievement are huge.

That is likely not what the intended result is. We can do some minimal changes to the system and get pretty much what we want at a much reduced cost. We start by giving up the implicit assumption that we have to award the achievement for the 10,000th within the same tick as the actual kill. If we give up this requirement, it means that we have a far more relaxed environment to deal with.

We can say that we’ll process the achievements at the end of the next hour, for example. That gives us enough time to get updates from the rest of the system, settle the dust and avoid millisecond level decision making processes. In almost all businesses, there is no such thing as a race condition. The best example of that is “the check is in the mail”. The payment date for a check is not when you cashed it but when you posted it. My uncle used to go to the post office at 4:50 PM on a Friday to post checks. They would get the right time stamp, but would sit in the post office over the weekend before actually being delivered. That gave his 3 – 5 extra days before the check was cashed.

In the case of our game, giving us the time for things to settle make sense. It also make sense from a marketing and community perspective. World wide announcements don’t just happen, they can be scheduled for a particular time frame to maximize impact. Delaying the announcement of such an achievement make it a lot easier from a technical perspective but also give us more business level benefits to work with.

In many situation, we jump to assumptions about the kind of requirements that we have to meet, but usually there is a lot more flexibility. And discovering where you can get some slack can be of tremendous value.

Possible reaction from the business in this scenario can be:

- We don’t actually care if this is exact. We want to give the achievement when this happens but it is okay if there is a little fudge factor and the “wrong” person won if there is a tiny amount of time difference.

- It is actually fine if two players get the achievement when it happens at the “same” time.

- Oh, I didn’t realize that this is so hard. Can we make it a server-wide achievement, then? Would that be easier?

Any of those responses will translate to a great simplification of what we have to deliver. In the first case, we can track the state of bunny killing without the use of distributed transactions and only apply a distributed transaction when we get to the 10,000th rabbit. That is going to be a rare event, so it is fine to get to pay a little extra there.

For that matter, a good question is to ask is how important the operation is. What happened when we have a failure in the distributed transaction when we record the 10,000th rabbit? I’m not talking about someone else getting there first, I”m talking bout a network hiccup or a faulty wire that cause the operation to fail. Do we retry? Do we output an error (and if so, to where?) or just ignore this? Do we need to have a secondary mechanism for checking for errors in the process?

The answer is that it depends, you can get into effectively infinite loop of trying to solve ever more unlikely scenarios. The question is what is the impact on the system at large and is it worth the cost?

For a game achievement, the answer is probably no (but then again, I’m not a gamer). People usually draw the line at money as the place where they’ll do their utmost to avoid issues. This is a strange scenario, because money specifically has so many ways that you can run compensating actions as part of the normal workflow that you shouldn’t bend yourself into a pretzel to avoid that.

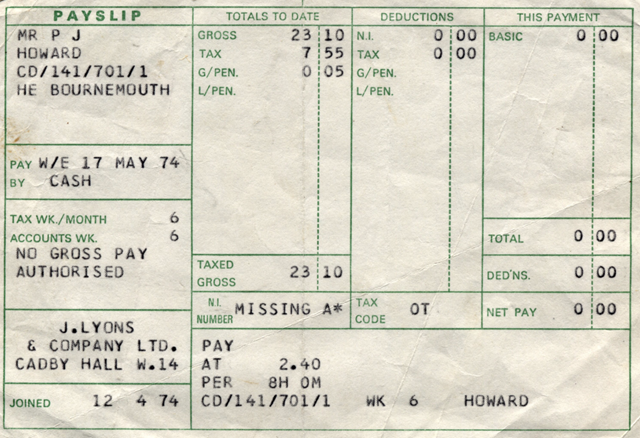

Let’s say that we are offering three unique mounts in our game, selling them for 9.99$. We obviously have to deal with race conditions here. There are only three mounts, but there are more users who want it and are willing to pay for the privilege. We are dealing with money here, so we can assume that we want to be careful about that, the question is, how careful?

We have three mounts, but more users. The payment process itself takes at least a few seconds, so how do you deal with it?

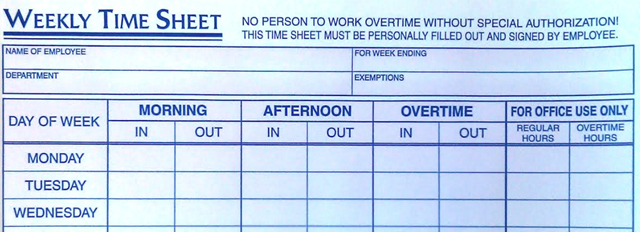

- Throw the purchase attempts into a queue.

- Pull the first three offers from the queue and attempt to charge them.

- If charging failed, we mark the purchase attempt as errored and move on to the next item in the queue.

- Once we successfully processed three purchases, we mark all the other purchase attempts as failed.

Simple, right?

What happens when a charge took longer than expected and a request timed out, but the charge actually happened? This can happen if you have SMS authentication in the process. So the card company will send a message to the card holder and they have to send an SMS back to approve the transaction. That can take long enough that the transaction will time out. But the user did authorize the transaction, funds were transferred, but you already sold the unique mount to someone else, with worse credit card security features.

What happens then? You can refund the money, provide another unique mount, etc.

The key here is that even for something that may consider critical, the number of failure modes is too high. Trying to handle them and ensuring a consistent world is usually too expensive. It is far better to have the hooks in place to handle failures and apply compensations. They are far rarer and much easier to deal with in isolation.

When you start seeing that something happens on a frequent basis, that is when you want to figure out a way to automate that failure mode.

![]() .

.