The development workflow refers to how a developer decides what to do next, how tasks are organized, assigned and worked on.

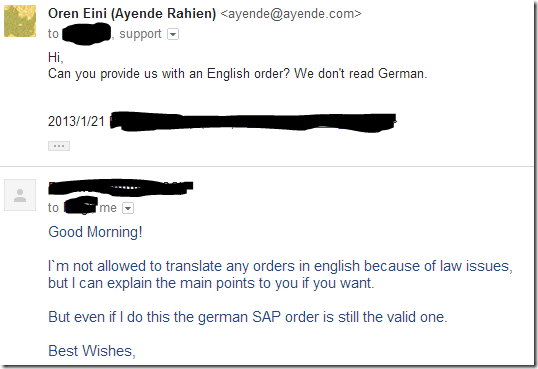

Typically, we dedicate a lot of the Israeli’s team time to doing ongoing support and maintenance tasks. So a lot of the work are things that show up on the mailing lists. We usually triage them to one of four levels:

- Interesting stuff that is outside of core competencies, or stuff that is nice to have that we don’t have resources for. We would usually handle that by requesting a pull request, or creating a low priority issue.

- Feature requests / ideas – usually go to the issuer tracker and wait there until assigned / there is time to do them.

- Bugs in our products – depending on severity, usually they are fixed on the spot, sometimes they are low priority and get to the issue tracker.

- Priority Bugs – usually get to the top of the list over anything and everything else.

It is obviously a bit more complex, because if we are working on a particular area already, we usually also take the time to cover the easy-to-do stuff from the issue tracker.

Important things:

- We generally don’t pay attention to releases, unless we have one pending for a product (for example, upcoming stable release for RavenDB).

- We don’t usually try to prioritize issues. Most of them are just there, and get picked up by whoever gets them first.

We following slightly different workflows for Uber Prof & RavenDB. With Uber Prof, every single push generate a client visible build, and we have auto update to make sure that most people run on the very latest.

With RavenDB, we have the unstable builds, which is what every single push translates to, and the stable builds, which have a much more involved release process.

We tend to emphasize getting things out the door over the Thirteen Steps to Properly Release Software.

An important rule of thumb, if you are still the office by 7 PM, you have better showed up at 11 or so, just because zombies are cool nowadays doesn’t mean you have to be one. I am personally exempted from the rule, though.

Next, I’ll discuss pairing, testing and decision making.