For a database engine, controlling the amount of work that is being executed is a very important factor for the stability of the system. If we can’t control the work that is being done, it is quite easy to get to the point where we are overwhelmed.

Consider the case of a(n almost) pure computational task, which is completely CPU bound. In some cases, those tasks can easily be parallelized. Here is one such scenario:

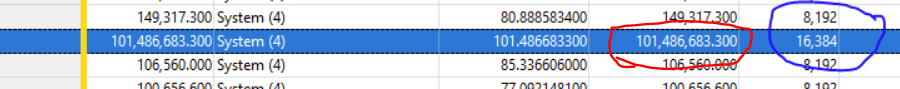

This example seems pretty obvious, right? This is complete CPU bound (sorting), and leaving aside that sort itself can be done in parallel, we have many arrays that we need to sort. As you can imagine, I would much rather this task to be done as quickly as possible.

One way to make this happen is to parallelize the work. Here is a pretty simple way to do that:

Parallel.ForEach(arrayOfArrays, array => Array.Sort(array));

A single one liner and we are going to see much better performance from the system, right?

Yep, this particular scenario will run faster, depending on the sizes, that may be much faster. However, that single one liner is a nasty surprise for the system stability as a whole. What’s the issue?

Under the covers, this is going to use the .NET thread pool to do the work. In other words, this is going to be added to the global workload on the system. What else is using the same thread pool? Well, pretty much everything. For example, processing requests in Kestrel is done in the thread pool, all the async machinery uses the thread pool under the covers as well as pretty much everything else.

What is the impact of adding a heavily CPU bounded work to the thread pool, one may ask? Well, the thread pool would start executing these on its threads. This is heavy CPU work, so likely will run for a while, displacing other work. If we consider a single instance of this code, there is going to be a limit of the number of concurrent work that is placed in the thread pool. However, if we consider whatever we run the code above in parallel… we are going to be placing a lot of work on the thread pool. That is going to effectively starve the rest of the system. The thread pool will react by spawning more threads, but this is a slow process, and it is easy to get into a situation where all available threads are busy, leaving nothing for the rest of the application to run.

From the outside, it looks like a 100% CPU status, with the system being entirely frozen. That isn’t actually what is going on, we are simply holding up all the threads and can’t prioritize the work between request handling (important) and speeding up background work (less important). The other problem is that you may be running the operation in an otherwise idle system, and the non parallel code will utilize a single thread out of the many that are available.

In the context of RavenDB, we are talking here about indexing work. It turns out that there is a lot of work here that is purely computational. Analyzing and breaking apart text for full text search, sorting terms for more efficient access patterns, etc. The same problem above remains, how can we balance the indexing speed and the overall workload on the system?

Basically, we have three scenarios that we need to consider:

- Busy processing requests – background work should be done in whatever free time the system has (avoiding starvation), with as little impact as possible.

- Busy working on many background tasks – concurrent background tasks should not compete with one another and step on each other’s toes.

- Busy working on a single large background task – which should utilize as much of the available computing resources that we have.

For the second and third options, we need to take into account that the fact that there isn’t any current request processing work doesn’t matter if there is incoming work. In that case, the system should prioritize the request processing over background work.

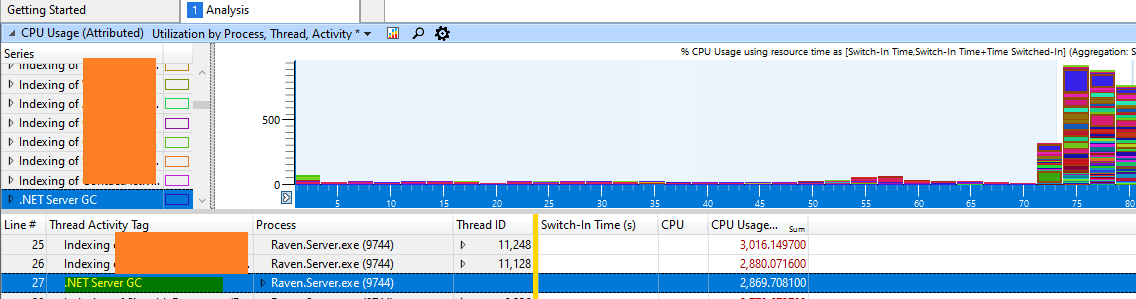

Another important factor that I haven’t mentioned is that it would be really good for us to be able to tell what work is taking the CPU time. If we are running a set of tasks on multiple threads, it would be great to be able to see what tasks they are running in a debugger / profiler.

This sounds very much like a class in operating systems, in fact, scheduling work is a core work for an operating system. The question we have here, how do we manage to build a system that would meet all the above requirements, given that we can’t actually schedule CPU work directly.

We cannot use the default thread pool, because there are too many existing users there that can interfere with what we want. For that matter, we don’t actually want to have a dynamic thread pool. We want to maximize the amount of work we do for computational heavy workload. Instead, for the entire process, we will define a set of threads to handle work offloading, like this:

This creates a set of threads, one for each CPU core on the machine. It is important to note that these threads are running with the lowest priority, if there is anything else that is ready to run, it will get a priority. In order to do some background work, such as indexing, we’ll use the following mechanism:

Because indexing is a background operation in RavenDB, we do that in a background thread and we set the priority to below normal. Request process threads are running at normal priority, which means that we can rely on the operating system to run them first and at the same time, the starvation prevention that already exists in the OS scheduler will run our indexing code even if there is extreme load on the system.

So far, so good, but what about those offload workers? We need a way to pass work from the indexing to the offload workers, this is done in the following manner:

Note that the _globalWorkQueue is process wide. For now, I’m using the simple sort an array example because it make things easier, the real code would need a bit more complexity, but it is not important to explain the concept. The global queue contains queues for each task that needs to be run.

The index itself will have a queue of work that it needs to complete. Whenever it needs to start doing the work, it will add that to its own queue and try to publish to the global queue. Note that the size of the global queue is limited, so we won’t use too much memory there.

Once we published the work we want to do, the indexing thread will start working on the work itself, trying to solicit the aim of the other workers occasionally. Finally, once the indexing thread is done process all the remaining work, we need to wait for any pending work that is currently being executed by the workers. That done, we can use the results.

The workers all run very similar code:

In other words, they pull a queue of tasks from the global tasks queue and start working on that exclusively. Once they are done processing a single index queue to completion, the offload worker will try pick another from the global queue, etc.

The whole code is small and fairly simple, but there are a lot of behavior that is hidden here. Let me try to explore all of that now.

The indexing background work push all the work items to its local queue, and it will register the queue itself in the global queue for the offloading threads to process. The indexing queue may be registered multiple times, so multiple offloading threads will take part in this. The indexing code, however, does not rely on that and will also process its own queue. The idea is that if there are offloading threads available, they will help, but we do not rely on them.

The offloading thread, for its part, will grab an indexing thread queue and start processing all the items from the queue until it is done. For sorting arbitrary arrays, it doesn’t matter much, but for other workloads, we’ll likely get much better locality in terms of task execution by issuing the same operation over a large set of data.

The threads priority here is also important, mind. If there is nothing to do, the OS will schedule the offloading threads and give them work to do. If there is a lot of other things happening, it will not be scheduled often. This is fine, we are being assisted by the offloading threads, they aren’t mandatory.

Let’s consider the previous scenarios in light of this architecture and its impact.

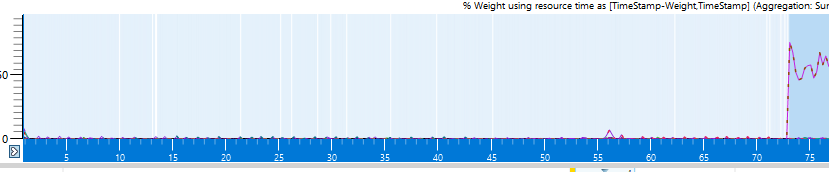

If there are a lot of requests, the OS is going to mostly schedule the request processing threads, the indexing threads will also run, but it is mostly going to be when there is nothing else to do. The offload threads are going to get their chance, but mostly that will not have any major impact. That is fine, we want most of the processing power to be on the request processing.

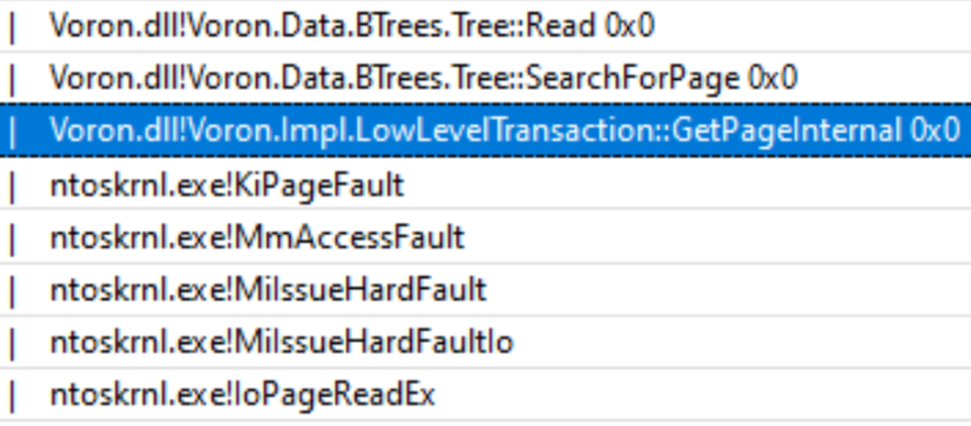

If there is a lot of work, on the other hand, the situation is different. Let’s say that there are 25 indexes running and there are 16 cores available for the machine. In this case, the indexes are going to compete with one another. The offloading threads again not going to get a lot of chance to run, because there isn’t anything that adding more threads in this context will do. There is already competition between the indexing threads on the CPU resources. However, the offloading threads are going to be of some help. Indexing isn’t pure computation, there are enough cases where it needs to do I/O, in which case, the CPU core is available. The offloading threads can then take advantage of the free cores (if there aren’t any other indexing threads that are ready to run instead).

It is in the case that there is just a single task running on a mostly idle system that this really shines. The index is going to submit all its work to the global queue, at which point, the offloading threads will kick in and help it to complete the task much sooner than it would be able to other wise.

There are other things that we need to take into account in this system:

- It is, by design, not fair. An index that is able to put its queue into the global queue first may have all the offloading threads busy working on its behalf. All the other threads are on their own. That is fine, when that index will complete all the work, the offloading threads will assist another index. We care about overall throughput, not fairness between indexes.

- There is an issue with this design under certain loads. An offloading thread may be busy doing work on behalf of an index. That index completed all other work and is now waiting for the last item to be processed. However, the offloading thread has the lower priority, so will rarely get to run. I don’t think we’ll see a lot of costs here, to be honest, that requires the system to be at full capacity (rare, and admins usually hate that, so prevent it) to actually be a real issue for a long time. If needed, we can play with thread priorities dynamically, but I would rather avoid it.

This isn’t something that we have implemented, rather something that we are currently playing with. I would love to hear your feedback on this design.

Hold up,I can hear you say. Did you just called out PHP as an architectural best practice? Indeed I did, and more than that, the more basic the PHP application in question, the closer it is to the ideal of Open Closed Principle.

Hold up,I can hear you say. Did you just called out PHP as an architectural best practice? Indeed I did, and more than that, the more basic the PHP application in question, the closer it is to the ideal of Open Closed Principle.

If you build any kind of non trivial system, one of the absolutely best things that you can do for the long term health of your system is to move all significant processing to sit behind a queue. That is one of those things that is going to pay massive dividends down the line as your system grows. Basically, any time that you want to do something that isn’t “pull data and show it to the user” or “store the data immediately”, throwing a queue at the problem is going to make things easier in the long run.

If you build any kind of non trivial system, one of the absolutely best things that you can do for the long term health of your system is to move all significant processing to sit behind a queue. That is one of those things that is going to pay massive dividends down the line as your system grows. Basically, any time that you want to do something that isn’t “pull data and show it to the user” or “store the data immediately”, throwing a queue at the problem is going to make things easier in the long run.