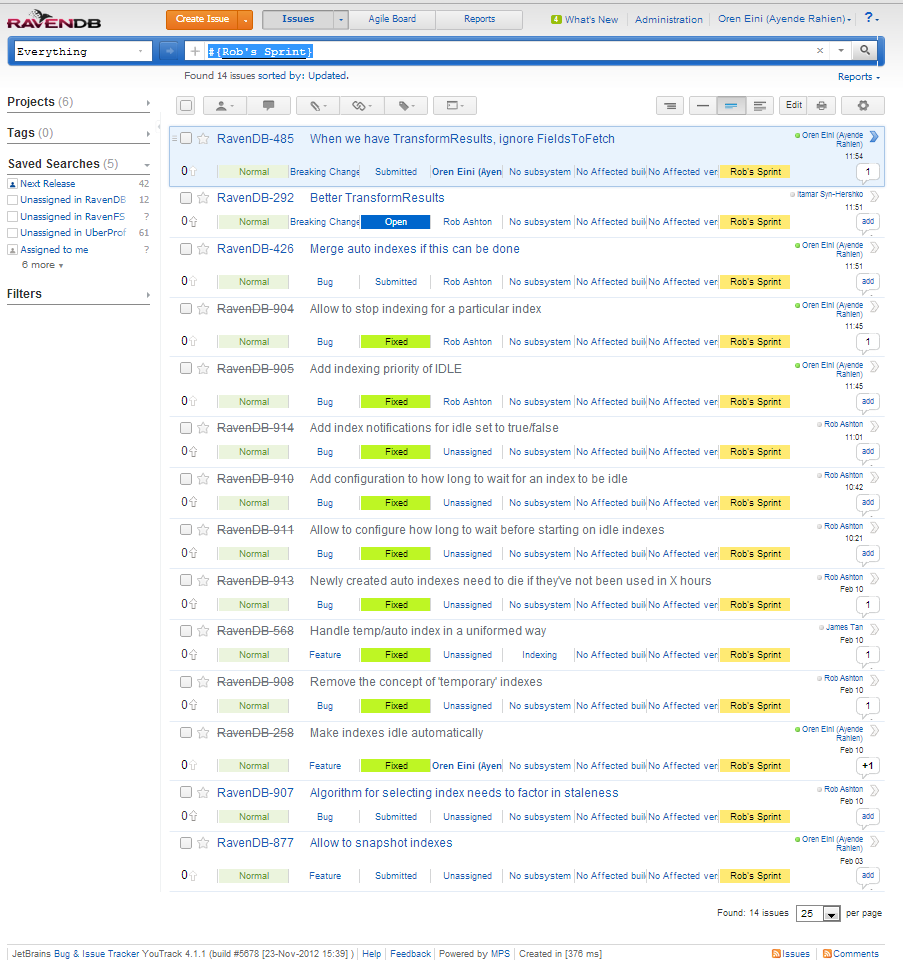

As part of my ongoing reviews efforts, I am going to review the BitShuva Radio application.

BitShuva Radio is a framework for building internet radio stations with intelligent social features like community rank, thumb-up/down songs, community song requests, and machine learning that responds to the user's likes and dislikes and plays more of the good stuff.

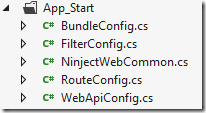

I just cloned the repository and opened it in VS, without reading anything beyond the first line. As usual, I am going to start from the top and move on down:

We already have some really good indications:

- There is just one project, not a gazillion of them.

- The folders seems to be pretty much the standard ASP.NET MVC ones, so that should be easy to work with.

Some bad indications:

- Data & Common folders are likely to be troublesome spots.

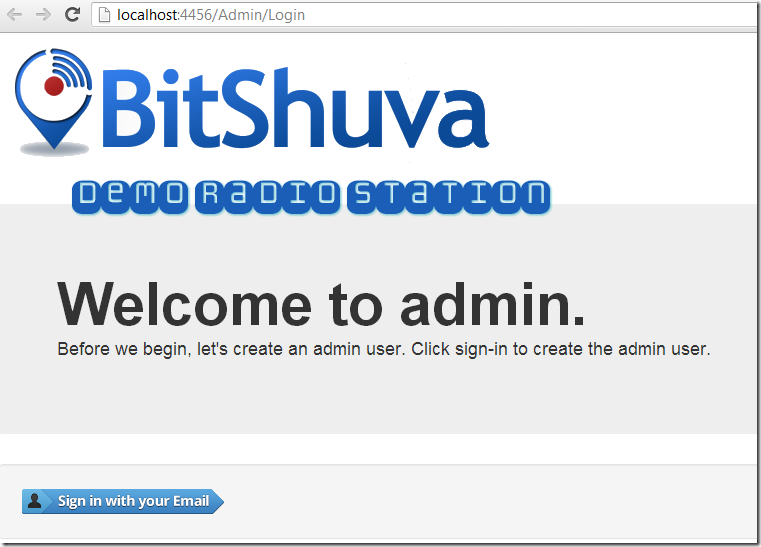

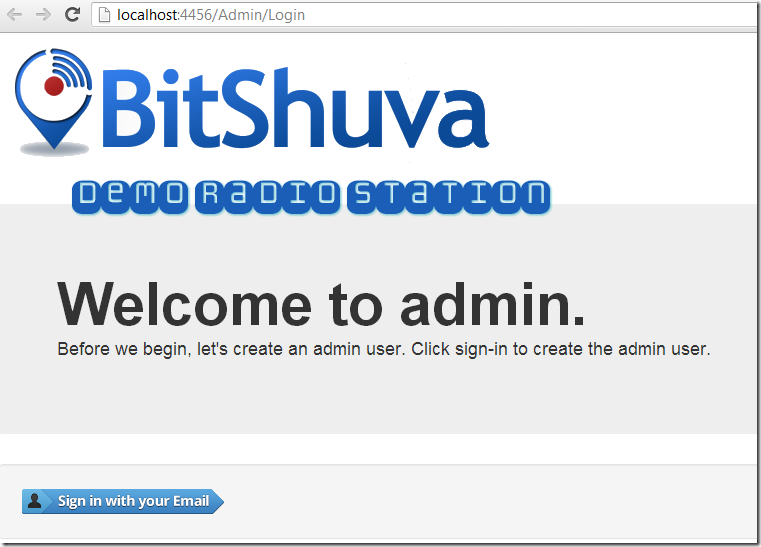

Hit Ctrl+F5, and I got this screen, which is a really good indication. There wasn’t a lot of setup required.

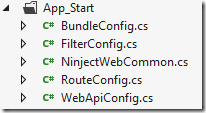

Okay, enough with the UI, I can’t really tell if this is good or bad anyway. Let us dive into the code. App_Start, here I come.

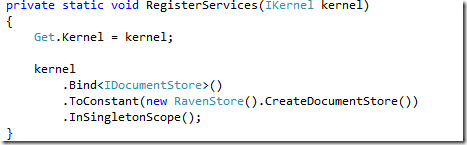

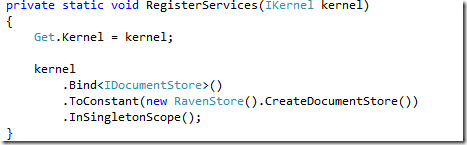

I get the feeling that WebAPI and Ninject are used here. I looked in the NinjectWebCommon file, and found:

Okay, I am biased, I’ll admit, but this is good.

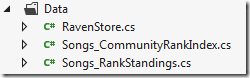

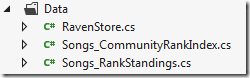

Other than the RavenDB stuff, it is pretty boring, standard and normal codebase. No comments so far. Let us see what is this RavenStore all about, which leads us to the Data directory:

So it looks like we have the RavenStore and a couple of indexes. And the code itself:

1: public class RavenStore

2: { 3: public IDocumentStore CreateDocumentStore()

4: { 5: var hasRavenConnectionString = ConfigurationManager.ConnectionStrings["RavenDB"] != null;

6: var store = default(IDocumentStore);

7: if (hasRavenConnectionString)

8: { 9: store = new DocumentStore { ConnectionStringName = "RavenDB" }; 10: }

11: else

12: { 13: store = new EmbeddableDocumentStore { DataDirectory = "~/App_Data/Raven" }; 14: }

15:

16: store.Initialize();

17: IndexCreation.CreateIndexes(typeof(RavenStore).Assembly, store);

18: return store;

19: }

20: }

I think that this code need to be improved, to start with, there is no need for this to be an instance. And there is no reason why you can’t use EmbeddableDocumentStore to use remote stuff.

I would probably write it like this, but yes, this is stretching things:

1: public static class RavenStore

2: { 3: public static IDocumentStore CreateDocumentStore()

4: { 5: var store = new EmbeddableDocumentStore

6: { 7: DataDirectory = "~/App_Data/Raven"

8: };

9:

10: if (ConfigurationManager.ConnectionStrings["RavenDB"] != null)

11: { 12: store.ConnectionStringName = "RavenDB";

13: }

14: store.Initialize();

15: IndexCreation.CreateIndexes(typeof(RavenStore).Assembly, store);

16: return store;

17: }

18: }

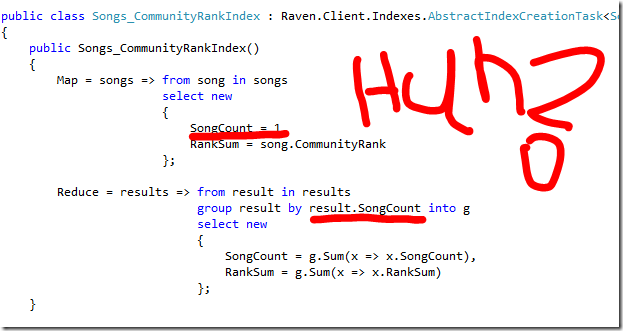

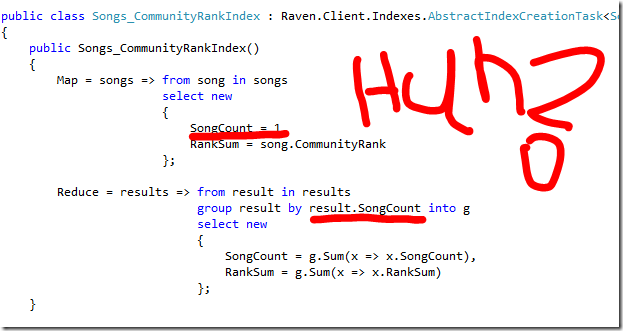

I intended to just glance at the indexes, but this one caught my eye:

This index effectively gives you random output. It will group by the count of documents, and since we reduce things multiple times, the output is going to be… strange.

I am not really sure what this is meant to do, but it is strange and probably not what the author intended.

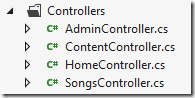

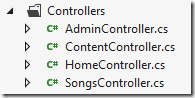

The Common directory contains nothing of interest beyond some util stuff. Moving on to the Controllers part of the application:

So this is a relatively small application, but an interesting one. We will start with what I expect o be a very simple part of the code .The HomeController:

1: public class HomeController : Controller

2: { 3: public ActionResult Index()

4: { 5: var userCookie = HttpContext.Request.Cookies["userId"];

6: if (userCookie == null)

7: { 8: var raven = Get.A<IDocumentStore>();

9: using (var session = raven.OpenSession())

10: { 11: var user = new User();

12: session.Store(user);

13: session.SaveChanges();

14:

15: HttpContext.Response.SetCookie(new HttpCookie("userId", user.Id)); 16: }

17: }

18:

19: // If we don't have any songs, redirect to admin.

20: using (var session = Get.A<IDocumentStore>().OpenSession())

21: { 22: if (!session.Query<Song>().Any())

23: { 24: return Redirect("/admin"); 25: }

26: }

27:

28: ViewBag.Title = "BitShuva Radio";

29: return View();

30: }

31: }

There are a number of things in here that I don’t like. First of all, let us look at the user creation part. You look at the cookies and create a user if it isn’t there, setting the cookie afterward.

This has the smell of something that you want to do in the infrastructure. I did a search for “userId” in the code and found the following in the SongsController:

1: private User GetOrCreateUser(IDocumentSession session)

2: { 3: var userCookie = HttpContext.Current.Request.Cookies["userId"];

4: var user = userCookie != null ? session.Load<User>(userCookie.Value) : CreateNewUser(session);

5: if (user == null)

6: { 7: user = CreateNewUser(session);

8: }

9:

10: return user;

11: }

12:

13: private static User CreateNewUser(IDocumentSession session)

14: { 15: var user = new User();

16: session.Store(user);

17:

18: HttpContext.Current.Response.SetCookie(new HttpCookie("userId", user.Id)); 19: return user;

20: }

That is code duplication with slightly different semantics, yeah!

Another issue with the HomeController.Index method is that we have direct IoC calls (Get.As<T>) and multiple sessions per request. I would much rather do this in the infrastructure, which would also give us a place for the GetOrCreateUser method to hang from.

SongsController is actually an Api Controller, so I assume that it is called from JS on the page. Most of the code there looks like this:

1: public Song GetSongForSongRequest(string songId)

2: { 3: using (var session = raven.OpenSession())

4: { 5: var user = GetOrCreateUser(session);

6: var songRequest = new SongRequest

7: { 8: DateTime = DateTime.UtcNow,

9: SongId = songId,

10: UserId = user.Id

11: };

12: session.Store(songRequest);

13: session.SaveChanges();

14: }

15:

16: return GetSongById(songId);

17: }

GetSongById will use its own session, and I think it would be better to have just one session per request, but that is about the sum of my comments.

One thing that did bug me was the song search:

1: public IEnumerable<Song> GetSongMatches(string searchText)

2: { 3: using (var session = raven.OpenSession())

4: { 5: return session

6: .Query<Song>()

7: .Where(s =>

8: s.Name.StartsWith(searchText) ||

9: s.Artist.StartsWith(searchText) ||

10: s.Album.StartsWith(searchText))

11: .Take(50)

12: .AsEnumerable()

13: .Select(s => s.ToDto());

14: }

15: }

RavenDB has a really good full text support. And we could be using that, instead. It would give you better results and be easier to work with, to boot.

Overall, this is a pretty neat little app.