Take a look at the code below. This actually completed as expected, and was working beautifully. As I probably mentioned, the architecture of this is really nice, and I think I was able to translate this into .NET code in a way that is both idiomatic and useful. 4:30 AM now, and I think that this is bed time for me now. But I just couldn’t leave this alone.

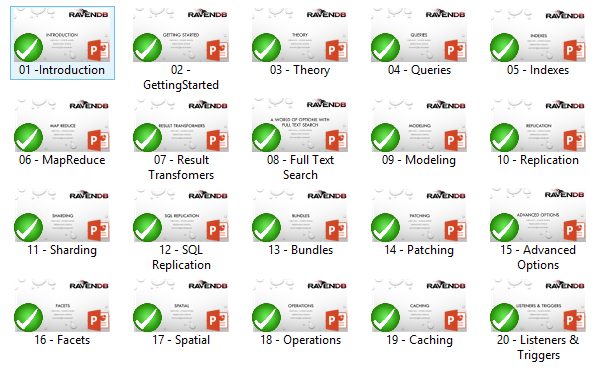

I am updating the RavenDB Course for 2.5. It is now a 3 days affair that include quite a lot of stuff. It was quite cramp at 2 days, so moving to 3 days will allow us to do a lot more and do more interesting stuff.

I’ll be giving the first 3 days RavenDB Course in about two weeks in London. Currently this is what I have:

Can you think of additional stuff that you’ll like me to cover?

Applying the lessons from the leveldb codebase isn’t going to be a straightforward task. I already discussed why we can’t just use leveldb directly, so I won’t go into that.

I decided to see if I can implement a leveldb inspired storage for RavenDB. And the first thing that I wanted to try is to build an SST. That seems like an easy first step. SST are just sorted data in a file, and it require nothing much to write to them. As mentioned, we can’t just do a line by line port of the code. As easy as that would be. The way leveldb manages memory is… not really suitable for what we can / should do in .NET.

- I want this to be an efficient .NET implementation.

- It is inspired, but not intended to be compatible with leveldb.

The leveldb API deals with byte arrays all over the place. This makes sense for a C++ codebase, but it is horrible for a .NET application, especially when we expect a lot of our values to be large enough to hit the LOH. That means fragmentation, and pain down the road. Instead, our API uses ArraySegment<byte> for keys, and Stream for values. Since keys are expected to be relatively small, I don’t foresee this being a problem. And the values are streams, so they are easily handled without introducing any cost from the API.

Another thing that leveldb does quite a lot is batch things in memory for a while. It may be the current block, it may be the current data block, it may be the index block, but it does so quite often. That works nicely for C++ apps with expected small values, but not so much for our expected use case. So I want to avoid as much as possible holding items in managed memory. Here is the API for creating an SST:

1: var options = new StorageOptions();2: using (var file = File.Create("test.sst"))3: using(var temp = new FileStream(Path.GetTempFileName(),FileMode.CreateNew,FileAccess.ReadWrite,4: FileShare.None, 4096, FileOptions.DeleteOnClose | FileOptions.SequentialScan))5: {6: var tblBuilder = new TableBuilder(options, file, temp);7:8: for (int i = 0; i < 100; i++)9: {10: var key = "tests/" + i.ToString("0000");11: tblBuilder.Add(key, new MemoryStream(Encoding.UTF8.GetBytes(key)));12: }13:14: tblBuilder.Finish();15: }

As you can see, we uses two streams here, one to actually write to the table, the second is a temporary stream that we use to write the index block while we are working, then we merged it back to the actual sst file. Note that after building the table, the temp file can be deleted (indeed, we marked is as delete on close, so that would automatically happen).

That part was easy, all it required was simple I/O for generating the file. The more interesting part is going to be reading the values out.

Well, I am very happy at the conclusion of this blog post series. Beside being one of the longest that I have done, this actually stretched my ability to count using roman numerals.

In summary, I am quite happy that I spent the time reading all of this code. The LevelDB codebase is really simple, when you grok what it actually does. There is nothing there that would totally stun a person. What there is there, however, is a lot of accumulated experience in building those sort of things.

You see this all over the place, in the format of the SST, in the way compaction is working, in the ability to add filters, write merging, etc. The leveldb codebase is a really good codebase to read, and I am very happy to have done so. Especially since doing this in C++ is way out of m comfort zone. It was also interesting to read what I believe is idiomatic C++ code.

Another observation about leveldb is that it is a hard core C++ product. You can’t really take that and use the same approach in a managed environment. In particular, efforts to port leveldb to java (https://github.com/dain/leveldb/) are going to run into hard issues with problems like managing the memory. Java, like .NET, has issues with allocating large byte arrays, and even from the brief look I took, working with leveldb on java using the codebase as it is would likely put a lot of pressure there.

Initially, I wanted to use leveldb as a storage engine for RavenDB. Since I couldn’t get it compiling & working on Windows (and yes, that is a hard requirement. And yes, it has to be compiling on my machine to apply), I thought about just porting it. That isn’t going to be possible. At least not in the trivial sense. Too much work is require to make it work properly.

Yes, I have an idea, no, you don’t get to hear it at this time ![]() .

.

I like reading interesting code bases. And in this case, I have been reading through the leveldb codebase for a while. Most of the time, I am concentrating on grokking how other people are doing things. And the knowledge that I glean from those is only relevant a while later.

In this case, I was able to apply leveldb’s knowledge in very short order. We got a complaint about the speed of RavenDB under high transactional writes. The customer complained that they were seeing about 100 – 200 writes/sec with multi threaded inserts, with a separate session per document. This simulate pretty well the behavior of a web application that does a write per request.

The problem was that we basically had many write requests all competing on the disk. Since all transactions need to call fsync, that meant that we were limited by the number of fsync that we could call on the physical medium (more or less, it is a bit more complex than that, but that works).

There really isn’t much that I can do about it when the transactions are sequential. But there might be when we have parallel transactions. Instead of make them wait for one another, I took a leaf from the leveldb codebase and decided to merge them. I re-wrote the code so we would use the following approach:

pendingBatches.Enqueue(pending); while (currentTask.Completed() == false && pendingBatches.Peek() != pending) { batchCompletedEvent.Wait(); } if (currentTask.Completed()) return pending.Result; lock (putSerialLock) { try { batchCompletedEvent.Reset(); var sp = Stopwatch.StartNew(); TransactionalStorage.Batch(actions => { int totalCommands = 0; PendingBatch currentBatch; while (totalCommands < 1024 && // let us no overload the transaction buffer size too much pendingBatches.TryDequeue(out currentBatch)) { batches++; if (ProcessBatch(currentBatch) == false) // we only go on committing transactions as long as we are successful, if there is an error, // we abort and do the rest in another cycle break; actions.General.PulseTransaction(); totalCommands += currentBatch.Commands.Count; } }); } finally { batchCompletedEvent.Set(); } }

As you can see, this code is very similar to the leveldb one. We queue the transaction to be executed and then we check if we are the first in the queue. If so, we will execute that transaction and continue executing all available transactions.

The key here is that because we merge those transactions, we can benefit from only calling fsync once, at the end of the global operation.

This code is nice because it allows us to take advantage on load on our system. The more, the more efficient we can batch things. But at the same time, if there isn’t any load, we don’t care.

Note that I limited the amount of work that can be done in merged transactions, because we don’t want the first transaction, the one that is actually doing the work for all of the others, to wait for too long. This is a really pretty way of doing this, especially since it doesn’t even require a background thread, which is how I usually solved this issue.

Oh, and the results?

On my machine, without this change, we get about 400 writes / second. Afterward, with 25 threads, we get over 1,100 writes / second.

We will cover:

- New features for JS Scripting

- Replication topologies

- How to handle maintenance tasks using RavenDB

- Sneak peek at reporting queries

You can register here: https://www2.gotomeeting.com/register/118743562

For some reason, there are a lot of real estate / rental people using RavenDB. I can tell you that I did not see that coming. However, that does bring us some interesting decisions.

In particular, at one client, we had the need to search for a lease. Searching for a lease can be done on one of many interesting properties. For example, the unit number, or internal code, or by the leaser name.

And here we got an interesting bug report.

Jane Smith leased an apartment from us at Dec 2012. At Feb 2013, she got married and changed her name to Jane Smith-Smyth. We need to allow searches on both names to find the appropriate lease.

Now, remember, you can’t go and change the lease document. That is a legal document that is frozen. Any change to that would invalidate it. (To be rather more accurate, you can modify the document, but there are certain fields that are frozen after the lease is signed.)

Luckily, this was really easy to do, using RavenDB’s referenced document feature:

1: from lease in docs.Leases2: select new3: {4: Leasers = lease.Leasers.Select(x=>x.Name)5: .Union(lease.Leasers.Select(x=>LoadDocument(x.Id).Name))6: .Distinct()7: }

And now we can support changes in the names, while maintaining the sanctity of the frozen fields.

Sadly, this is still not enough. And we actually need to keep track of all of the names that the leaser had during the leasing period.

Jane Smith-Smyth decided that it is a stupid name and changed her name to Jane Smite.

Now we need to support multiple names per leaser, while at the same time we have the current name for the lease. It looks like this:

1: from lease in docs.Leases2: select new3: {4: Leasers = lease.Leasers.Select(x=>x.Name)5: .Union(lease.Leasers.SelectMany(x=>LoadDocument(x.Id).Names))6: .Distinct()7: }

I highlighted the required changes ![]() .

.

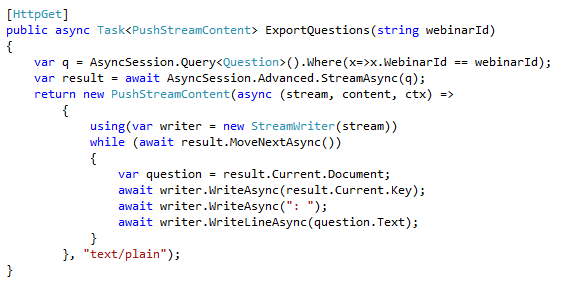

In RavenDB 2.5, we provide an easy way to grab all the data from the database, regardless of the usual paging limit.

I had the chance to experiment with that recently during a course. Here is the code we wrote. For fun, we made it use the async API:

I am pretty happy about that. We have stuff that streams all the way from the ravendb to the end client.

Facebook certainly changed the way we are doing things. Sometimes, that ain’t always for the best, as can be seen from the way too much time humanity as a whole spend watching cat videos.

One of the ways that Facebook impacted our professional lives is that a lot of people look at that as a blue print of how they want their application to be. I am not going to touch on whatever that is a good thing or not, suffice to say that this is a well known model that is very easy for a lot of users to grasp.

It is also a pretty hard model to actually design and build. I recently had a call from a friend who was tasked with building a Facebook like timeline. Like most such tasks, we have the notion of social network, with people following other people. I assume that this isn’t actually YASNP (yet another social network project), but I didn’t check too deeply.

The question was how to actually build the timeline. The first thing that most people would try is something like this:

1: var user = session.Load<User>(userId);2: var timelineItems =3: session.Query<Items>()4: .Where(x=>x.Source.In(user.Following))5: .OrderByDescending(x=>x.PublishedAt)6: .Take(30)7: .ToList();

Now, this looks good, and it would work, as long as you have small number of users and no one follows a lot of people. And as long as you don’t have a lot of items. And as long as you don’t have to do any additional work. When any of those assumption is broken… well, welcome to unpleasantville, population: you.

It can’t work. And I don’t care what technology you are using for storage. You can’t create a good solution using queries for something like the timeline.

Nitpicker corner:

- If you have users that are following a LOT of people (and you will have those), you are likely to get into problems with the query.

- The more items you have, the slower this query becomes. Since you need to sort them all before you can return results. And you are likely to have a LOT of them.

- You can’t really shard this query nicely or easily.

- You can’t apply additional filtering in any meaningful way.

Let us consider the following scenario. Let us assume that I care for this Rohit person. But I really don’t care for Farmville.

And then:

Now, try to imagine doing this sort of thing in a query. For fun, assume that I do care for Farmville updates from some people, but not from all. That is what I mean when I said that you want to do meaningful filtering.

You can’t do this using queries. Not in any real way.

Instead, you have to turn it around. You would do something like this:

1: var user = session.Load<User>(userId);2: var timelineItmes = session.Query<TimeLineItems>()3: .Where(x=>x.ForUser == userId)4: .OrderBy(x=>x.Date)5: .ToList();

Note how we structure this. There is a set of TimeLineItems objects, which store a bit of information about a set of items. Usually we would have one per user per day. Something like:

- users/123/timeline/2013-03-12

- users/123/timeline/2013-03-13

- users/123/timeline/2013-03-14

That means that we get well scoped values, we only need to search on a single set of items (easily sharded, with a well known id, which means that we can also just load them by id, instead of querying for them).

Of course, that means that you have to have something that builds those timeline documents. That is usually an async process that run whenever you have a user that update something. It goes something like this:

1: public void UpdateFollowers(string itemId)2: {3: var item = session.Include<Item>(x=>x.UserId)4: .Load(itemId);5:6: var user = session.Load<User>(item.UserId);7:8: // user.Followers list of documents with batches of followers9: // we assume that we might have a LOT, so we use this techinque10: // to avoid loading them all into memory at once11: // http://ayende.com/blog/96257/document-based-modeling-auctions-bids12: foreach(var followersDocId in user.Followers)13: {14: NotifyFollowers(followersDocId, item);15: }16: }17:18: public void NotifyFollowers(string followersDocId, Item item)19: {20: var followers = session.Include<FollowersCollection>(x=>x.Followers)21: .Load(followersDocId);22:23: foreach(var follower in followers.Followers)24: {25: var user = session.Load<User>(follower);26: if(user.IsMatch(item) == false)27: continue;28: AddToTimeLine(follower, item);29: }30: }

As you can see, we are batching the operation, loading the followers and batched on their settings, decide whatever to let that item to be added to their timeline or not.

Note that this has a lot of implications. Different people will see this show up in their timeline in different times (but usually very close to one another). Your memory usage is kept low, because you are only processing some of it at any given point in time. For users with a LOT of followers, and there will be some like those, you might want to build special code paths, but this should be fine even at its current stage.

What about post factum operations? Let us say that I want to start following a new user? This require special treatment, you would have to read the latest timeline items from the new user to follow and start merging that with the existing timeline. Likewise when you need to delete someone. Or adding a new filter.

It is a lot more work than just changing the query, sure. But you can get things done this way. And you cannot get anywhere with the query only approach.