Well, Raven is pretty much done now, from feature perspective, so now is the time to look at the performance numbers, see where I goofed up, etc. I decided to use StackOverflow Data Dump as my sample source (using the March 2010), because that is an accessible real world data, large, data set that I can utilize.

I quickly wrote a simple ETL process to read the StackOverflow dump files and load them into Raven. I’ll speak about the ETL process in more detail in a future post, but for now, I want to talk about the numbers.

The ETL approach I used isn’t the most efficient one, I’ll admit. It involves doing multiple passes on the data. Basically it goes:

- foreach user in users.xml

- foreach badge in badges.xml

- update appropriate user document with new badge

- foreach post in posts.xml

- foreach vote in votes.xml

- foreach comment in comments.xml

As you can imagine, this means that we are asking the server to do a lot of duplicated work. It would be better if we would pre-prepare the values and insert them only once, instead of insert & then update them. Unfortunately, the data sizes are large enough that doing trying to do this in memory is too expensive. I can think of several approaches to try to optimize this, but at the end, I don’t really see a reason. This ETL process is probably how people will write it in the real world, so there is no point in trying too hard.

In the end, using the March 2010 dump from stack overflow, I ended up with a Raven DB instance with: 2,329,607 documents. From random sampling, most documents are 1 KB – 5 KB in since, with several that are significantly larger than that.

Here are two typical documents:

// posts/2321816

{

"LastEditorUserId":200145,

"LastEditorDisplayName":"",

"PostTypeId":1,

"Id":2321816,

"Title":"Storing commercial files on the server",

"Score":0,

"CreationDate":"\/Date(1266952829257+0200)\/",

"CommentCount":2,

"AcceptedAnswerId":2321854,

"LastActivityDate":"\/Date(1266953391687+0200)\/",

"Tags":"",

"LastEditDate":"\/Date(1266953391687+0200)\/",

"Body":"Where would you store files that are meant for sale on an e-commerce website? \n",

"OwnerUserId":200145,

"AnswerCount":3,

"ViewCount":45,

"comments":[

{

"Score":null,

"CreationDate":"\/Date(1266952919510+0200)\/",

"Text":"are they \"sensitive\" information?",

"UserId":"users/203907"

},

{

"Score":null,

"CreationDate":"\/Date(1266953092057+0200)\/",

"Text":"I wouldn't say they are sensitive information. They are just commercial files and a free access to them shouldn't be allowed.",

"UserId":"users/200145"

}

]

}

// users/33

{

"Age":28,

"CreationDate":"\/Date(1217583130963+0300)\/",

"Id":33,

"UpVotes":354,

"LastAccessDate":"\/Date(1267455753720+0200)\/",

"DisplayName":"John",

"Location":"Southampton, England",

"AboutMe":"C# and VB.net Developer working primarily in windows service and winforms applications.\r\n\r\n ",

"EmailHash":"d0b76ae7bf261316683cad31ba0bad91",

"Reputation":3209,

"DownVotes":3,

"Views":334,

"badges":[

{

"Name":"Teacher",

"Dates":[

"\/Date(1221458104020+0300)\/"

]

},

{

"Name":"Student",

"Dates":[

"\/Date(1221458104190+0300)\/"

]

},

{

"Name":"Editor",

"Dates":[

"\/Date(1221458104377+0300)\/"

]

},

{

"Name":"Cleanup",

"Dates":[

"\/Date(1221458104470+0300)\/"

]

},

{

"Name":"Organizer",

"Dates":[

"\/Date(1221458104737+0300)\/"

]

},

{

"Name":"Supporter",

"Dates":[

"\/Date(1221458104893+0300)\/"

]

},

{

"Name":"Critic",

"Dates":[

"\/Date(1221458104987+0300)\/"

]

},

{

"Name":"Citizen Patrol",

"Dates":[

"\/Date(1221458105173+0300)\/"

]

},

{

"Name":"Scholar",

"Dates":[

"\/Date(1221458105483+0300)\/"

]

},

{

"Name":"Enlightened",

"Dates":[

"\/Date(1221458112677+0300)\/"

]

},

{

"Name":"Taxonomist",

"Dates":[

"\/Date(1221458113427+0300)\/"

]

},

{

"Name":"Nice Answer",

"Dates":[

"\/Date(1221458638367+0300)\/",

"\/Date(1236274052530+0200)\/",

"\/Date(1244026052343+0300)\/",

"\/Date(1244726552923+0300)\/",

"\/Date(1257249754030+0200)\/"

]

},

{

"Name":"Nice Question",

"Dates":[

"\/Date(1225182453990+0200)\/",

"\/Date(1231624653367+0200)\/"

]

},

{

"Name":"Commentator",

"Dates":[

"\/Date(1227767555493+0200)\/"

]

},

{

"Name":"Autobiographer",

"Dates":[

"\/Date(1233569254650+0200)\/"

]

},

{

"Name":"Necromancer",

"Dates":[

"\/Date(1234393653060+0200)\/",

"\/Date(1257860556480+0200)\/"

]

},

{

"Name":"Popular Question",

"Dates":[

"\/Date(1236054752283+0200)\/",

"\/Date(1248302252213+0300)\/",

"\/Date(1248607054807+0300)\/",

"\/Date(1250013763393+0300)\/",

"\/Date(1251215254023+0300)\/",

"\/Date(1258400556113+0200)\/"

]

},

{

"Name":"Yearling",

"Dates":[

"\/Date(1249237664163+0300)\/"

]

},

{

"Name":"Notable Question",

"Dates":[

"\/Date(1249583857093+0300)\/"

]

},

{

"Name":"Beta",

"Dates":[

"\/Date(1221512400000+0300)\/"

]

},

{

"Name":"Self-Learner",

"Dates":[

"\/Date(1251201753523+0300)\/"

]

},

{

"Name":"Civic Duty",

"Dates":[

"\/Date(1260347854457+0200)\/"

]

}

]

}

The database size is: 6.29 GB (out of which about 158 MB is for the default index).

Total number of operations: 4,667,100

The first major issue was that I couldn’t tell how many documents I had in the database, get document count turned out to be an O(N) operation(!), that was easy to fix, thankfully.

The second major issue was that Raven didn’t really handle indexing of a lot of documents very well, it would index each document as a standalone operation. The problem is that there are additional costs for doing this (opening & closing the index for writing, mostly), which slow things down enormously. I fixed that by implementing index merging, so documents that were inserted at the same time would be index together (up to some limit that I am still playing at).

Once those were fixed, I could try doing some measurements…

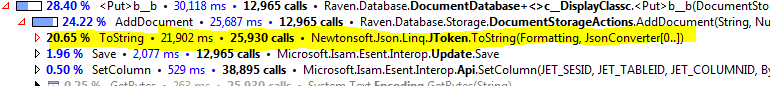

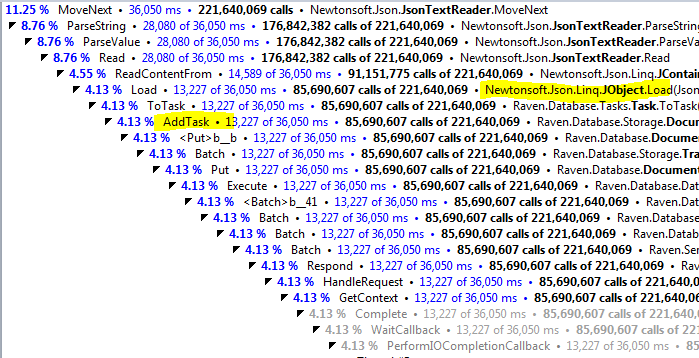

- With indexing disabled, inserting 2.3 million documents takes about 1 hour & 15 minutes. Considering that we made 4.6 million operations (including insert & updates), we are talking about over a 1000 operations per second (using single threaded mode).

I am very happy with those results.

- With indexing enabled, and a single index defined, the process takes much longer. About 3 hours & 15 minutes, giving us about 400 operations per second (again, single threaded), or about 2.5 milliseconds per operation.

- Waiting for the background indexing task to complete took a lot longer, another 2 hours & 45 minutes. This gives me just over 200 documents indexed per second.

I am pleased, but not really happy about those results. I think that we can do better, and I certainly intend to optimize things.

But that is for later, right now I want to see how Raven behaves when it has that many documents.

| What we test |

URL |

Timing |

| Looking up database stats |

GET /stats |

00:00:00.0007919 |

| Browse documents (start) |

GET /docs/?start=0&pageSize=25 |

00:00:00.0429041 |

| Browse documents (toward the end) |

GET /docs/?start=2300000&pageSize=25 |

00:00:00.0163617 |

| Get document by id (toward the start) |

GET /docs/users/32 |

00:00:00.0017779 |

| Get document by id (toward the end) |

GET /docs/posts/2321034 |

00:00:00.0022796 |

| Query index (start) |

GET /indexes/Raven/DocumentsByEntityName?query=Tag:Users&pageSize=25 |

00:00:00.0388772 |

| Query index (toward the end) |

GET /indexes/Raven/DocumentsByEntityName?query=Tag:Users&pageSize=25&start=100000 |

00:00:00.5988617 |

| Query index (midway point) |

GET /indexes/Raven/DocumentsByEntityName?query=Tag:Users&pageSize=25&start=20000 |

00:00:00.1644477 |

| Query index #2 (start) |

GET /indexes/Raven/DocumentsByEntityName?query=Tag:posts&pageSize=25 |

00:00:00.4957742 |

| Query index #2 (toward the end) |

GET /indexes/Raven/DocumentsByEntityName?query=Tag:posts&pageSize=25&start=2000000 |

00:00:07.3789415 |

| Query index #2 (midway point) |

GET /indexes/Raven/DocumentsByEntityName?query=Tag:posts&pageSize=25&start=200000 |

00:00:02.2621174 |

The results are very interesting. It is gratifying to see that browsing documents and retrieving documents is blazing fast, and once I fixed the O(N) issue on the stats, that is fast as hell as well.

Querying the indexes is interesting. It is clear that Lucene doesn’t like to page deeply. On the other hand, I think that it is safe to say that we don’t really have to worry about this, since deep paging (going to page #4,000) is very unlikely, and we can take the half a second hit when it is happening.

Querying index #2 is troublesome, though. It don’t think that it should take that long (without paging, if you want to page to page 80,000, please wait), even if that query returns ~2.1 million results. I think that this is because some of the Lucene options that we use, so I’ll have to look further into that.

Nitpicker corner: Those are not the final numbers, we intend to improve upon them.