In the previous post, I showed how we can get a pretty nice pager (important for building a storage system) in under 100 lines of code using mmap(). If that was all of it, it would be a pretty short series of posts. However, I want to explore what it would take to take ownership of that part of the storage system and build our own from scratch. Let’s see what it would take to build a pager when we are doing the I/O.

In the mmap() implementation, I didn’t really have a lot of states. Just the mapping and that was pretty much it. When building our own, we need to track a whole lot more states. Off the top of my head, we need to:

- Track what pages we handed out to callers.

- Track usage of pages so we’ll know when to release them.

- Manage concurrency explicitly between threads.

- Handle several scenarios that were just… working on the mmap() implementation.

For example, let’s talk about what kind of state I need. Zig comes with a hash table (and does that beautifully for an unmanaged language), so I can do this, right?

pages: std.AutoHashMap(u64, Page),

That would be a mapping between the pages in memory and the memory we allocated for them. Except… that it doesn’t quite work like that. One of the key issues that we have to deal with is the fact that while most of the time we will ask for a page, we can also ask for a continuous run of pages.

We can safely assume that the caller is responsible for ensuring that there is no duplication. In other words, the following sequence of calls is invalid:

That is a very important limit to how much complexity we have to deal with, I have to note. Another thing to deal with is concurrency. How do we deal with scenarios where two threads want to get pages (which may or may not be the same)?

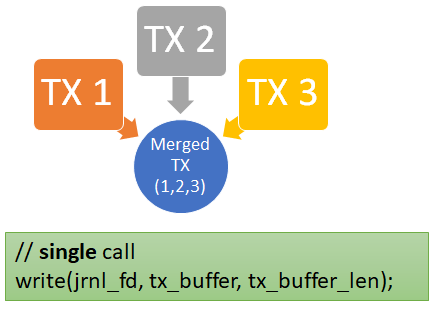

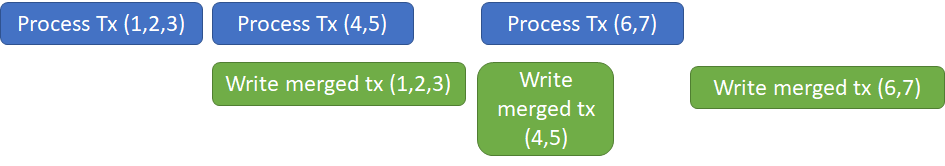

Anther consideration is about reduce the overall cost of I/O, we don’t want to issue too many operations, both for reads and for writes. That pushes us toward batching operations as much as possible. Here is the overall design that I have for the file pager:

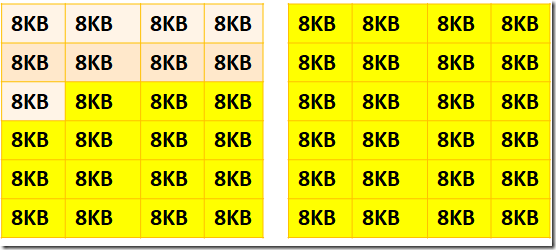

In other words, even though we are dealing with 8KB pages, the pager itself will issue work with chunks of 2MB in size each time. The idea is that we can amortize the cost of going to the disk by ensuring that we’ll do bulk I/O. That, in turn, means that we have to consider some aspects of our system very early on.

In the case of the mmap pager, we didn’t really need to think about caching, that was the responsibility of the operating system. In the case of this pager, we must have a cache, and if we cache a chunk, we can probably benefit greatly from locality of reference, which is always nice.

The 2MB chunk size design decision complicate our lives. The pager needs to handle both single pages access and work with values that may span multiple pages. As long as they reside in a single chunk, that is pretty easy. But we need to consider how we’ll manage to work with values that are bigger than 2MB in size. It’s interesting, because even at this very early stage, a design decision on how big the size we fetch from the disk will have impact for the implementation of the entire system.

As early as we are, we can make the following assumption / requirements from our callers:

- Most of the access is going to be for single pages.

- Some of the accesses will be for multiple pages, but under the 2 MB chunk limit.

- Few accesses will need to work with multiple pages over the 2 MB limit.

That is important because it impacts the way we think about the system. Earlier in this post, I mentioned using a hash map to store the references to the pages. With chunks, we can probably adjust slightly and be done with it, right?

Except that we really can’t. One of the primary issues that we have to deal with is the fact that this is meant to be concurrent. A hash map isn’t going to support that and will need to be protected by a lock. Interestingly, most concurrent data structures pretty much require garbage collection of some sort and building them with an unmanaged system is quite complex.

How do we deal with this issue? It turns out that it is far simpler to have an array to hold those references and access each element using atomic instructions. Here we run into another design decision. Are we going to have a single file or multiple files? That matters because if we have a single file, we need to deal with increasing the file size on the fly. That means that the array of references would need to grow, and that is also complex with concurrent access. If we have multiple files, we can just create a completely new file as needed. We can allocate a single array at the maximum file size and not worry about it. There are other reasons why we might want to use multiple files (such as making it easier to release space back to the file system), so we’ll go with multiple files.

That means that we can reasonably set the maximum file size at 8GB (remember the big values issue, I think it is reasonable to set the max size of a value at 2GB, so 8GB is plenty). With 8GB files, we are talking about 4,096 chunks of 2 MB each. Assuming that we’ll use an 8 bytes struct to hold the data about each chunk, that means that we can safely allocate the maximum size of 32Kb upfront. If we need to increase the size of the file, we already allocated the place for its metadata. That gives us a far simpler system (no need to try to manage concurrent accesses) at a small memory cost.

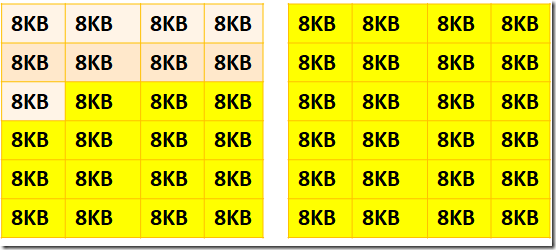

Now, we can require that page allocations that are below 2 MB in size will always be aligned inside a page boundary. But what happens when we have a value whose size exceeds 2MB? The answer to that is that we are going to require the calling code to follow specific patterns for that. We require that any value that is greater than 2MB will be aligned on a 2MB boundary from the end of the final chunk. Here is what this looks like, the yellow marked pages are allocated on two separate chunks, and you can see how we aligned this on the end:

The nice thing about this approach is that we know that the caller will not do partial calls. If we asked for pages 5 - 10, there can be no call to page 6 on an independent basis. As such, when we ask for a value that is bigger than a single chunk, it will always be expressed as a load from the starting chunk to the end. That means that we can load the full value in a single I/O call. Here, again, we have very low level concerns affecting how we lay out the data on disk.

There are other aspects that we need to consider, such as eviction policies, how to handle concurrency, etc. But that is enough for one post, I intentionally want to limit the scope of what we do to avoid getting mired in the details. Expect more in the next post in the series.