When I sat down over some years ago to actually decide how to go about dealing with that document database idea that wouldn’t let me sleep, I did several things. One of them was draw down the architecture of the system, and how to approach the technical challenges that we were seeing. But a much more interesting aspect was deciding to make this into a commercial product. One of the things that I did there was build a business plan for RavenDB. According to that business plan, we were supposed to hit the first production deployment of RavenDB round about now.

In practice, adoption of RavenDB has far outstripped my hopes, and we actually had production deployments for RavenDB within a few months of the public release. What actually took longer than deploying RavenDB was getting the stories back about it  . I am going to start posting those as soon as I get the authorization for the customers to do so.

. I am going to start posting those as soon as I get the authorization for the customers to do so.

The following is from the first production deployment of RavenDB…

1. Who are you? (Name, company, position)

Henning Christiansen, Senior Consultant at Webstep Fokus, Norway (www.webstep.no) I am working on a development team for a client in the financial sector.

2. In what kind of project / environment did you deploy RavenDB?

The client is, naturally, very focused on delivering solutions and value to the business at a high pace. The solutions we build are both for internal and external end users. On top of the result oriented environment, there is a heavy focus on building sustainable and maintainable solutions. It is crucial that modules can be changed, removed or added in the future.

In this particular project, we built a solution based on NServiceBus for communication and RavenDB for persistence. This project is part of a larger development effort, and integrates with both old and new systems. The project sounds unimpressive when described in short, but I'll give it a go:

The project's main purpose was to replace an existing system for distribution of financial analysis reports. Analysts/researchers work on reports, and submit them to a proprietary system which adds additional content such as tables and graphs of relevant financial data, and generates the final report as XML and PDF. One of the systems created during this project is notified when a report is submitted, pulls it out of the proprietary system, tags it with relevant metadata and stores it in a RavenDB instance before notifying subscribing systems that a new report is available. The reports are instantly available on the client's website for customers with research access.

One of the subscribing systems is the distribution system which distributes the reports by email or sms depending on the customer's preferences. The customers have a very fine-grained control over their subscriptions, and can filter them on things such as sector, company, and report type among other things. The user preferences are stored in RavenDB. When a user changes preferences, notifications are given to other systems so that other actions can be performed based on what the customers are interested in.

3. What made you select a NoSQL solution for your project?

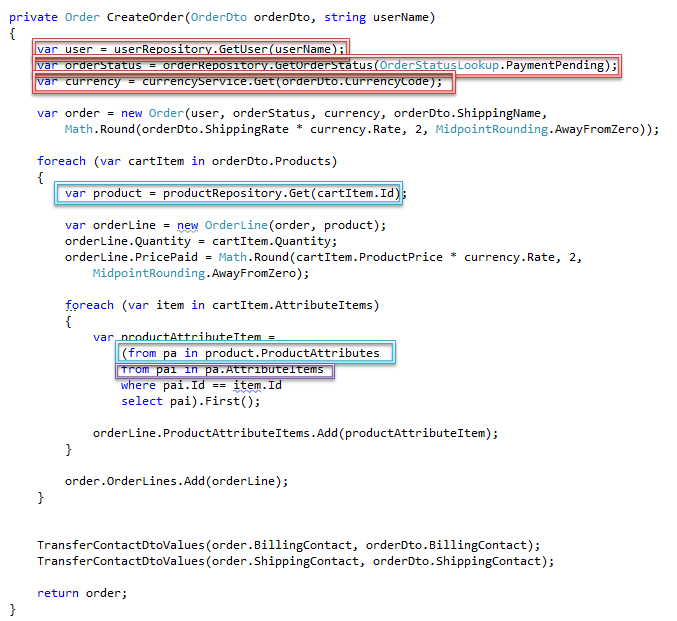

We knew the data would be read a lot more than it would be written, and it needed to be read fast. A lot of the team members were heavily battle-scarred from struggling with ORMs in the past, and with a very tight deadline we weren't very interested in spending a lot of time maintaining schemas and mappings.

Most of what we would store in this project could be considered a read model (à la CQRS) or an aggregate root (DDD), so a NoSQL solution seemed like a perfect fit. Getting rid of the impedance mismatch couldn't hurt either. We had a lot of reasons that nudged us in the direction of NoSQL, so if it hadn't been RavenDB it would have been something else.

4. What made you select RavenDB as the NoSQL solution?

It was the new kid on the block when the project started, and had a few very compelling features such as a native .NET API which is maintained and shipped with the database itself. Another thing was the transaction support. A few of us had played a bit with RavenDB, and compared to other NoSQL solutions it seemed like the most hassle-free way to get up and running in a .NET environment. We were of course worried about RavenDB being in an early development stage and without reference projects, so we had a plan B in case we should hit a roadblock with RavenDB. Plan B was never put into action.

5. How did you discover RavenDB?

I subscribe to your blog (http://ayende.com/Blog/) :)

There was a lot of fuss about NoSQL at the time, and RavenDB received numerous positive comments on Twitter and in blog posts.

6. How long did it take to learn to use RavenDB?

I assume you're asking about the basics, as there's a lot to be learnt if you want to. What the team struggled with the most with was indexes. This was before dynamic indexes, so we had to define every index up front, and make sure they were in the database before querying. Breaking free from the RDBMS mindset and wrapping our heads around how indexes work, map/reduce, and how and when to apply the different analyzers took some time, and the documentation was quite sparse back then. The good thing is that you don't really need to know a lot about this stuff to be able to use RavenDB on a basic level.

The team members were differently exposed to RavenDB, so guessing at how long it took to learn is hard. But in general I think it's fair to say that indexes was the team's biggest hurdle when learning how to use RavenDB.

7. What are you doing with RavenDB?

On this particular project we weren't using a lot of features, as we were learning to use RavenDB while racing to meet a deadline.

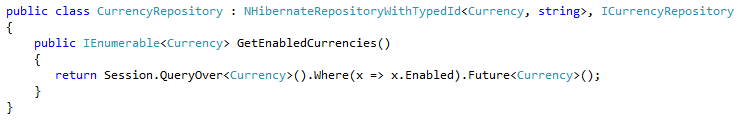

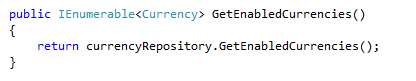

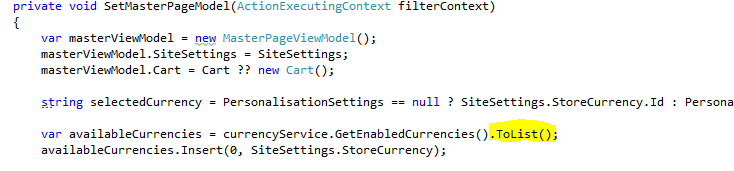

Aside from storing documents, we use custom and dynamic indexes, projections, the client API, and transactions. We're also doing some hand-rolled Lucene queries.

On newer projects however, with more experience and confidence with RavenDB, and as features and bundles keep on coming, we're doing our best to keep up with the development and making the best use of RavenDB's features to solve our problems.

8. What was the experience, compared to other technologies?

For one thing, getting up and running with RavenDB is super-easy and only takes a couple of minutes. This is very different from the RDBMS+ORM experience, which in comparison seems like a huge hassle. Working with an immature and rapidly changing domain model was also a lot easier, as we didn't need to maintain mappings. Also, since everything is a document, which in turn easily maps to an object, you're sort of forced to always work through your aggregate roots. This requires you to think through your domain model perhaps a bit more carefully than you'd do with other technologies which might easier allow you to take shortcuts, and thus compromise your domain model.

9. What do you consider to be RavenDB strengths?

It's fast, easy to get started with, and it has a growing community of helpful and enthusiastic users. Our support experience has also been excellent, any issues we've had have usually been fixed within hours. The native .NET API is also a huge benefit if you're working in a .NET environment

10. What do you consider to be RavenDB weaknesses?

If we're comparing apples to apples, I can't think of any weaknesses compared to the other NoSQL solutions out there aside from the fact that it's new. Hence it's not as heavily tested in production environments as some of the older NoSQL alternatives might be. The relatively limited documentation, which admittedly has improved tremendously over the last few months, was also a challenge. The community is very helpful, so anything you can't find in the documentation can normally be answered by someone on the forum. There's also a lot of blog posts and example applications out there.

I find the current web admin UI a bit lacking in functionality, but hopefully the new Silverlight UI will take care of that.

11. Now that you are in production, do you think that choosing RavenDB was the right choice?

Yes, definitely. We've had a few pains and issues along the way, but that's the price you have to pay for being an early adopter. They were all quickly sorted out, and now everything's been ticking along like clockwork for months. I'm confident that choosing RavenDB over another persistence technology has allowed us to develop faster and spend more time on the problem at hand.

12. What would you tell other developers who are evaluating RavenDB?

I have little experience with other document databases, but obviously tested a bit and read blog posts when evaluating NoSQL solutions for this project. Since we decided to go with RavenDB there's been a tremendous amount of development done, and at this time none of the competitors are even close featurewise.