Reading code is a Skill (with a capital letter, yes) that is really important for developers. You cannot be a good developer without it.

Today I want to talk about one aspect of this. The ability to go into an unfamiliar codebase and extract one piece of information out. The idea is that we don’t need to understand the entire system, grok the architecture, etc. I want to understand one thing about it and get away as soon as I can.

For example, you know that project Xyz is doing some operation, and you want to figure out how this is done. So you need to look at the code and figure that out, then you can go your merry way.

Today, I’m interested in understanding how the LMDB project writes data to the disk on Windows. This is because LMDB is based around a memory-mapped model, and Windows doesn’t keep the data between file I/O and mmap I/O coherent.

LMDB is an embedded database engine (similar to Voron, and in fact, Voron is based on some ideas from LMDB) written in C. If you are interested in it, I wrote 11 posts going through every line of code in the project.

So I’m familiar with the project, but the last time I read the code was over a decade ago. From what I recall, the code is dense. There are about 11.5K lines of code in a single file, implementing the entire thing.

I’m using the code from here.

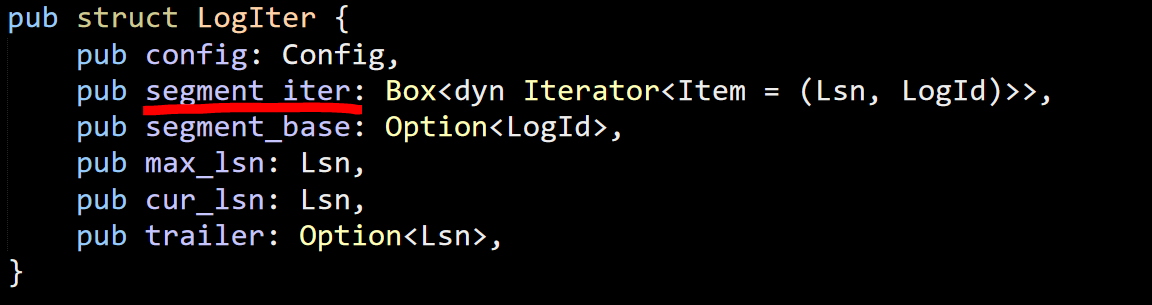

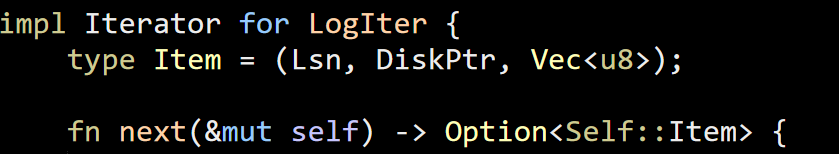

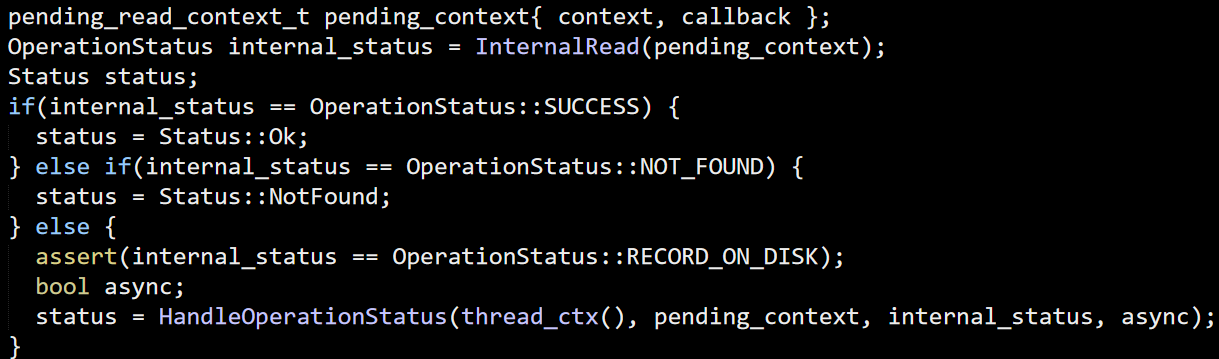

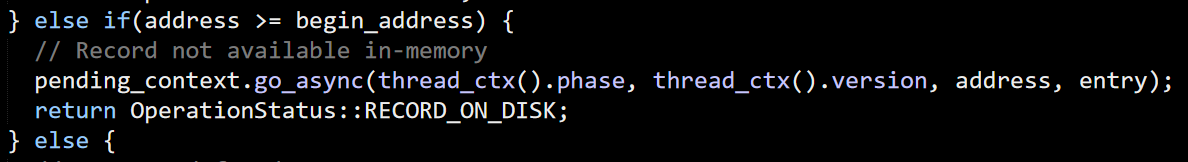

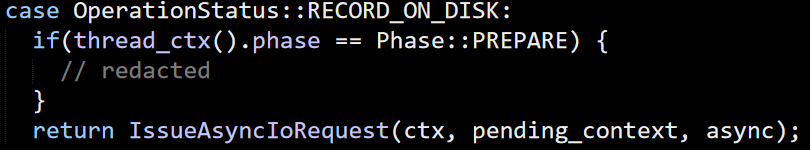

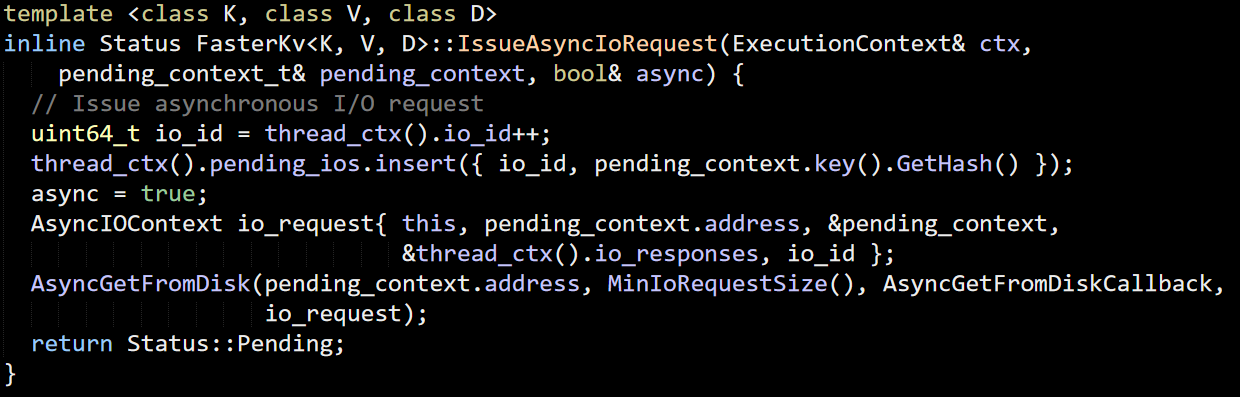

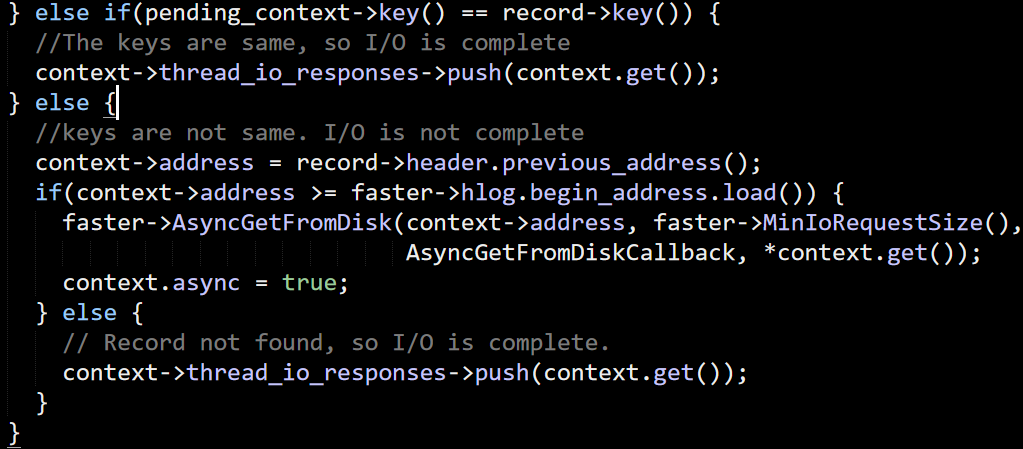

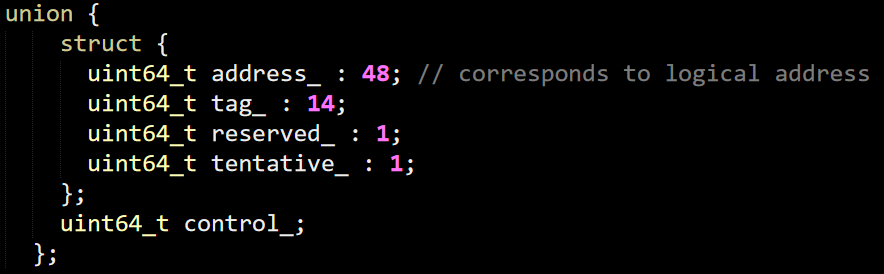

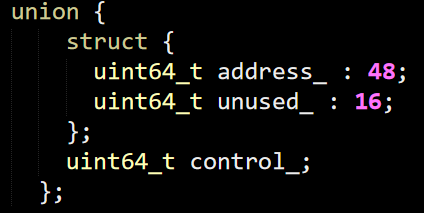

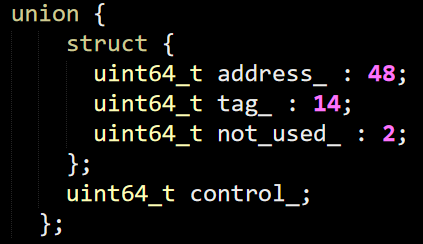

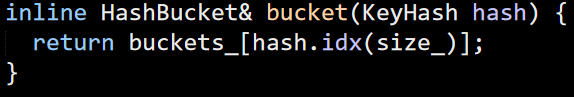

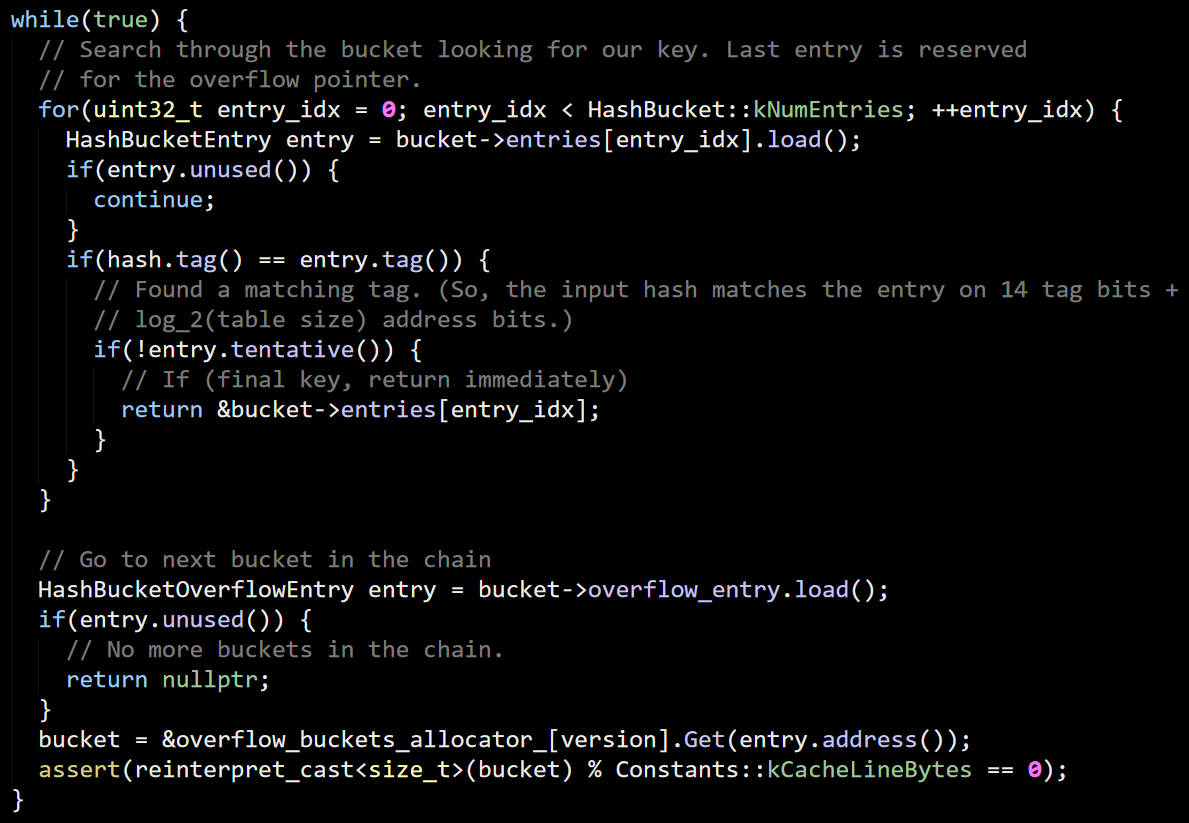

The first thing to do is find the relevant section in the code. I started by searching for the WriteFile() function, the Win32 API to write. The first occurrence of a call to this method is in the mdb_page_flush function.

I look at this code, and… there isn’t really anything there. It is fairly obvious and straightforward code (to be clear, that is a compliment). I was expecting to see a trick there. I couldn’t find it.

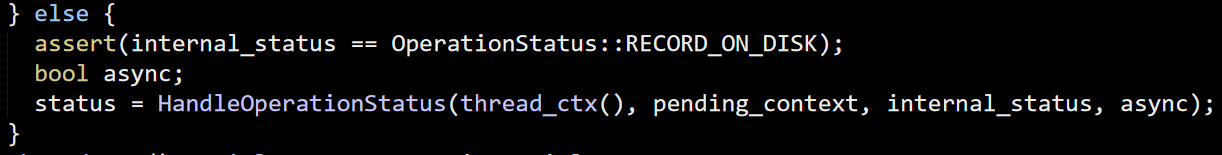

That meant either the code had a gaping hole and potential data corruption (highly unlikely) or I was missing something. That led me to a long trip of trying to distinguish between documented guarantees and actual behavior.

The documentation for MapViewOfFile is pretty clear:

A mapped view of a file is not guaranteed to be coherent with a file that is being accessed by the ReadFile or WriteFile function.

I have my own run-ins with this behavior, which was super confusing. This means that I had experimental evidence to say that this is broken. But it didn’t make sense, there was no code in LMDB to handle it, and this is pretty easy to trigger.

It turns out that while the documentation is pretty broad about not guaranteeing the behavior, the actual issue only occurs if you are working with remote files or using unbuffered I/O.

If you are working with local files and buffered I/O (which is 99.99% of the cases), then you can rely on this behavior. I found some vaguereferences to this, but that wasn’t enough. There is this post that is really interesting, though.

I pinged Howard Chu, the author of LMDB, for clarification, and he was quick enough to assure me that yes, my understanding was (now) correct. On Windows, you can mix memory map operations with file I/O and get the right results.

The documentation appears to be a holdover from Windows 9x, with the NT line always being able to ensure coherency for local files. This is a guess about the history of documentation, to be honest. Not something that I can verify.

I had the wrong information in my head for over a decade. I did not expect this result when I started this post, I was sure I would be discussing navigating complex codebases. I’m going to stand in the corner and feel upset about this for a while now.