This post isn’t actually about a production issue—thankfully, we caught this one during testing. It’s part of a series of blog posts that are probably some of my favorite posts to write. Why? Because when I’m writing one, it means I’ve managed to pin down and solve a nasty problem.

This time, it’s a race condition in RavenDB that took mountains of effort, multiple engineers, and a lot of frustration to resolve.

For the last year or so, I’ve been focused on speeding up RavenDB’s core performance, particularly its IO handling. You might have seen my earlier posts about this effort. One key change we made was switching RavenDB’s IO operations to use IO Ring, a new API designed for high-performance, asynchronous IO, and other goodies. If you’re in the database world and care about squeezing every ounce of performance out of your system, this is the kind of thing that you want to use.

This wasn’t a small tweak. The pull request for this work exceeded 12,000 lines of code—over a hundred commits—and likely a lot more code when you count all the churn. Sadly, this is one of those changes where we can’t just split the work into digestible pieces. Even now, we still have some significant additional work remaining to do.

We had two or three of our best engineers dedicated to it, running benchmarks, tweaking, and testing over the past few months. The goal is simple: make RavenDB faster by any means necessary.

And we succeeded, by a lot (and yes, more on that in a separate post). But speed isn’t enough; it has to be correct too. That’s where things got messy.

Tests That Hang, Sometimes

We noticed that our test suite would occasionally hang with the new code. Big changes like this—ones that touch core system components and take months to implement—often break things. That’s expected, and it’s why we have tests. But these weren’t just failures; sometimes the tests would hang, crash, or exhibit other bizarre behavior. Intermittent issues are the worst. They scream “race condition,” and race conditions are notoriously hard to track down.

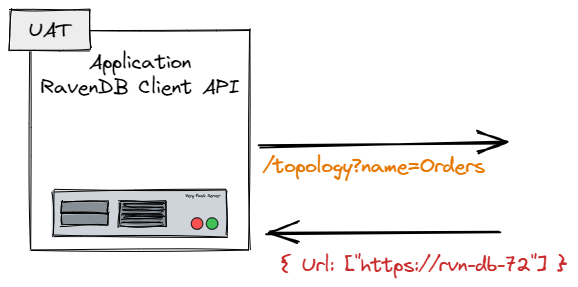

Here’s the setup. IO Ring isn’t available in managed code, so we had to write native C code to integrate it. RavenDB already has a Platform Abstraction Layer (PAL) to handle differences between Windows, Linux, and macOS, so we had a natural place to slot this in.

The IO Ring code had to be multithreaded and thread-safe. I’ve been writing system-level code for over 20 years, and I still get uneasy about writing new multithreaded C code. It’s a minefield. But the performance we could get… so we pushed forward… and now we had to see where that led us.

Of course, there was a race condition. The actual implementation was under 400 lines of C code—deliberately simple, stupidly obvious, and easy to review. The goal was to minimize complexity: handle queuing, dispatch data, and get out. I wanted something I could look at and say, “Yes, this is correct.” I absolutely thought that I had it covered.

We ran the test suite repeatedly. Sometimes it passed; sometimes it hung; rarely, it would crash.

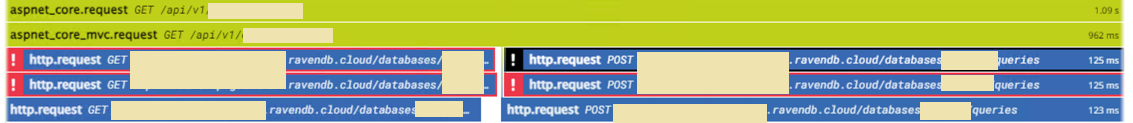

When we looked into it, we were usually stuck on submitting work to the IO Ring. Somehow, we ended up in a state where we pushed data in and never got called back. Here is what this looked like.

0:019> k

# Call Site

00 ntdll!ZwSubmitIoRing

01 KERNELBASE!ioring_impl::um_io_ring::Submit+0x73

02 KERNELBASE!SubmitIoRing+0x3b

03 librvnpal!do_ring_work+0x16c

04 KERNEL32!BaseThreadInitThunk+0x17

05 ntdll!RtlUserThreadStart+0x2cIn the previous code sample, we just get the work and mark it as done. Now, here is the other side, where we submit the work to the worker thread.

int32_t rvn_write_io_ring(void* handle, int32_t count,

int32_t* detailed_error_code)

{

int32_t rc = 0;

struct handle* handle_ptr = handle;

EnterCriticalSection(&handle_ptr->global_state->lock);

ResetEvent(handle_ptr->global_state->notify);

char* buf = handle_ptr->global_state->arena;

struct workitem* prev = NULL;

for (int32_t curIdx = 0; curIdx < count; curIdx++)

{

struct workitem* work = (struct workitem*)buf;

buf += sizeof(struct workitem);

*work = (struct workitem){

.prev = prev,

.notify = handle_ptr->global_state->notify,

};

prev = work;

queue_work(work);

}

SetEvent(IoRing.event);

bool all_done = false;

while (!all_done)

{

all_done = true;

WaitForSingleObject(handle_ptr->global_state->notify, INFINITE)

ResetEvent(handle_ptr->global_state->notify);

struct workitem* work = prev;

while (work)

{

all_done &= InterlockedCompareExchange(

&work->completed, 0, 0);

work = work->prev;

}

}

LeaveCriticalSection(&handle_ptr->global_state->lock);

return rc;

}We basically take each page we were asked to write and send it to the worker thread for processing, then we wait for the worker to mark all the requests as completed. Note that we play a nice game with the prev and next pointers. The next pointer is used by the worker thread while the prev pointer is used by the submitter thread.

You can also see that this is being protected by a critical section (a lock) and that there are clear hand-off segments. Either I own the memory, or I explicitly give it to the background thread and wait until the background thread tells me it is done. There is no place for memory corruption. And yet, we could clearly get it to fail.

Being able to have a small reproduction meant that we could start making changes and see whether it affected the outcome. With nothing else to look at, we checked this function:

void queue_work_origin(struct workitem* work)

{

work->next = IoRing.head;

while (true)

{

struct workitem* cur_head = InterlockedCompareExchangePointer(

&IoRing.head, work, work->next);

if (cur_head == work->next)

break;

work->next = cur_head;

}

}I have written similar code dozens of times, I very intentionally made the code simple so it would be obviously correct. But when I even slightly tweaked the queue_work function, the issue vanished. That wasn’t good enough, I needed to know what was going on.

Here is the “fixed” version of the queue_work function:

void queue_work_fixed(struct workitem* work)

{

while (1)

{

struct workitem* cur_head = IoRing.head;

work->next = cur_head;

if (InterlockedCompareExchangePointer(

&IoRing.head, work, cur_head) == cur_head)

break;

}

}This is functionally the same thing. Look at those two functions! There shouldn’t be a difference between them. I pulled up the assembly output for those functions and stared at it for a long while.

1 work$ = 8

2 queue_work_fixed PROC ; COMDAT

3 npad 2

4 $LL2@queue_work:

5 mov rax, QWORD PTR IoRing+32

6 mov QWORD PTR [rcx+8], rax

7 lock cmpxchg QWORD PTR IoRing+32, rcx

8 jne SHORT $LL2@queue_work

9 ret 0

10 queue_work_fixed ENDPA total of ten lines of assembly. Here is what is going on:

- Line 5 - we read the IoRing.head into register rax (representing cur_head).

- Line 6 - we write the rax register (representing cur_head) to work->next.

- Line 7 - we compare-exchange the value of IoRing.head with the value in rcx (work) using rax (cur_head) as the comparand.

- Line 8 - if we fail to update, we jump to line 5 again and re-try.

That is about as simple a code as you can get, and exactly expresses the intent in the C code. However, if I’m looking at the original version, we have:

1 work$ = 8

2 queue_work_origin PROC ; COMDAT

3 npad 2

4 $LL2@queue_work_origin:

5 mov rax, QWORD PTR IoRing+32

6 mov QWORD PTR [rcx+8], rax

; ↓↓↓↓↓↓↓↓↓↓↓↓↓

7 mov rax, QWORD PTR IoRing+32

; ↑↑↑↑↑↑↑↑↑↑↑↑↑

8 lock cmpxchg QWORD PTR IoRing+32, rcx

9 cmp rax, QWORD PTR [rcx+8]

10 jne SHORT $LL2@queue_work_origin

11 ret 0

12 queue_work_origin ENDPThis looks mostly the same, right? But notice that we have just a few more lines. In particular, lines 7, 9, and 10 are new. Because we are using a field, we cannot compare to cur_head directly like we previously did but need to read work->next again on lines 9 &10. That is fine.

What is not fine is line 7. Here we are reading IoRing.headagain, and work->next may point to another value. In other words, if I were to decompile this function, I would have:

void queue_work_origin_decompiled(struct workitem* work)

{

while (true)

{

work->next = IoRing.head;

// ↓↓↓↓↓↓↓↓↓↓↓↓↓

struct workitem* tmp = IoRing.head;

// ↑↑↑↑↑↑↑↑↑↑↑↑↑

struct workitem* cur_head = InterlockedCompareExchangePointer(

&IoRing.head, work, tmp);

if (cur_head == work->next)

break;

}

}Note the new tmp variable? Why is it reading this twice? It changes the entire meaning of what we are trying to do here.

You can look at the output directly in the Compiler Explorer.

This smells like a compiler bug. I also checked the assembly output of clang, and it doesn’t have this behavior.

I opened a feedback item with MSVC to confirm, but the evidence is compelling. Take a look at this slightly different version of the original. Instead of using a global variable in this function, I’m passing the pointer to it.

void queue_work_origin_pointer(

struct IoRingSetup* ring, struct workitem* work)

{

while (1)

{

struct workitem* cur_head = ring->head;

work->next = cur_head;

if (InterlockedCompareExchangePointer(

&ring->head, work, work->next) == work->next)

break;

}

}And here is the assembly output, without the additional load.

ring$ = 8

work$ = 16

queue_work_origin PROC ; COMDAT

prefetchw BYTE PTR [rcx+32]

npad 12

$LL2@queue_work:

mov rax, QWORD PTR [rcx+32]

mov QWORD PTR [rdx+8], rax

lock cmpxchg QWORD PTR [rcx+32], rdx

cmp rax, QWORD PTR [rdx+8]

jne SHORT $LL2@queue_work

ret 0

queue_work_origin ENDPThat unexpected load was breaking our thread-safety assumptions, and that led to a whole mess of trouble. Violated invariants are no joke.

The actual fix was pretty simple, as you can see. Finding it was a huge hurdle. The good news is that I got really familiar with this code, to the point that I got some good ideas on how to improve it further 🙂.