When we started building support for graph queries inside RavenDB, we looked at what is the state of the market in this regard. There seems to be two major options: Cypher and Gremlins. Gremlins is basically a fluent interface that represent a specific graph pattern while Cypher is a more abstract manner to represent the graph query. I don’t like Gremlins, and it doesn’t fit into the model we have for RQL, so we went for the Cypher syntax. Note the distinction between went for Cypher and went for Cypher syntax.

One of the major requirements that we have is fitting in into the pre-existing Raven Query Language, but the first concern we had was just getting started and getting some idea about our actual scenarios. We are now at the point where we have written a bunch of graph queries and got a lot more experience in how it mesh into the overall environment. And at this point, I can really feel that there is an issue in meshing Cypher syntax into RQL. They don’t feel the same at all. There are a lot of good ideas there, make no mistake, but we want to create something that would flow as a cohesive whole.

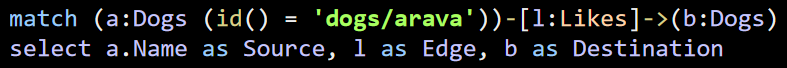

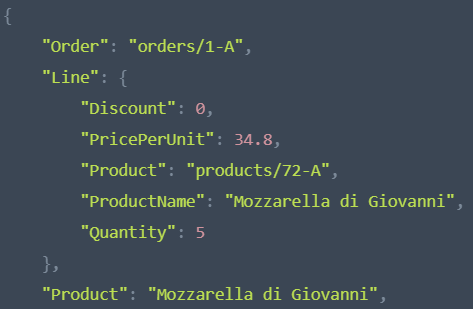

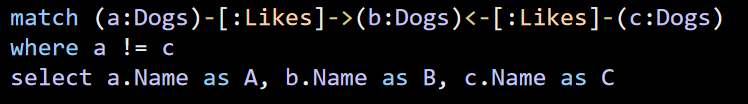

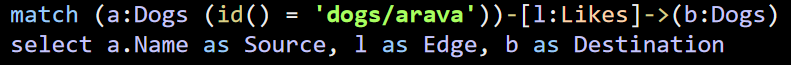

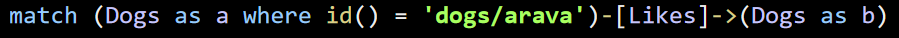

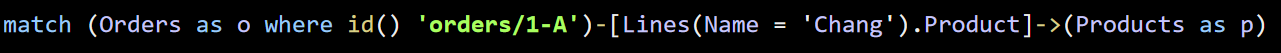

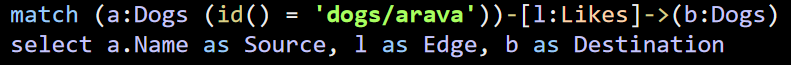

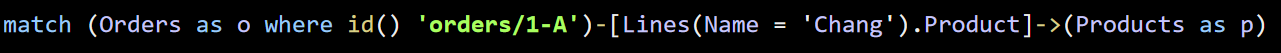

Let’s look at some of our queries and how we can better express them. The one I talked to about the most is this:

Let see what we have here:

- match is the overall clause that apply a graph pattern query to the dataset.

- () – is an indication of a node in the graph.

- [] – is an indication of an edge.

- a:Dogs, l:Likes and b:Dogs – this is an alias and a path specification.

- -[]-> – is an indication of an edge between two nodes

- (expression) – is a filter on a node or an edge

I’m ignoring the select statement here because it is just the usual RQL select statement.

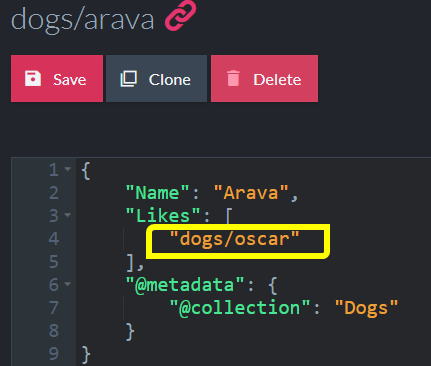

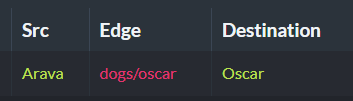

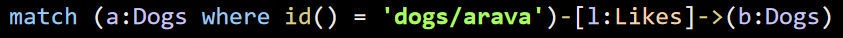

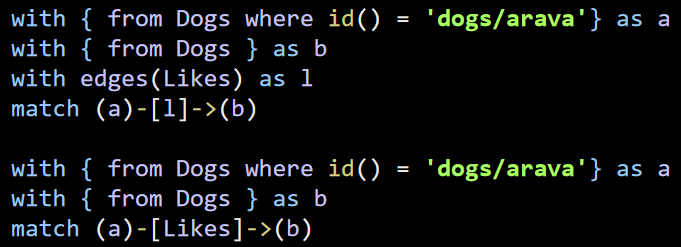

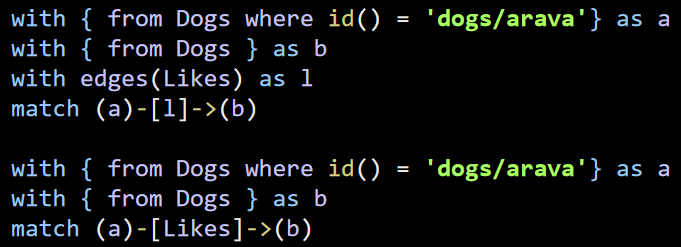

The first thing that keeps biting us is the filter in (a:Dogs (id() = 'dogs/arava')), I keep being tripped by missing the closing ), so that has got to go. Luckily, is it very obvious what to do here:

We use an explicit where clause, instead of the () to express the inline filter. This fits a lot more closely with how the rest of RQL works.

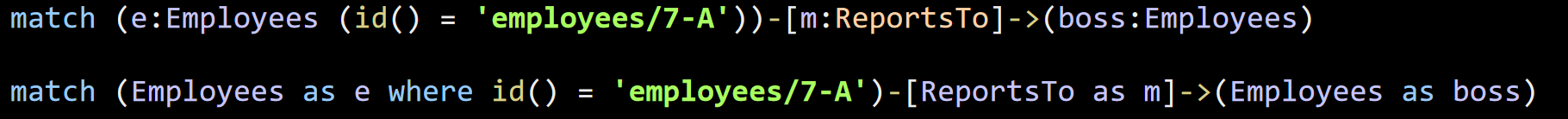

Now, let’s look at the aliases: (b:Dogs). The alias:Collection syntax is pretty foreign to RQL, we tend to use the Collection as alias syntax. Let’s see how that would look like, shall we?

This looks a lot more natural to me, and it is a good fit into RQL in general. This syntax does bring a few things to the table. In particular, look a the edge. In Cypher, an anonymous edge would be: [:Likes], and using this method, we will have just [Likes].

However, as nice as this syntax is, we still run into a problem. The query above is actually just a shorthand way to write the full query, which looks like so:

In fact, we have two queries here, to show off the actual problem we have in parsing. In the first case, we have a match clause the only refers to explicit with statement. On the second case, we have a couple of explicit with statements, but also an implicit with edges expression (the Likes).

From the point of view of the parser, we can’t distinguish those two. Now, we can absolutely say that if the edge expression contains a single name, we’ll simply look for an edge with that name and otherwise assume that this is the path that will be used.

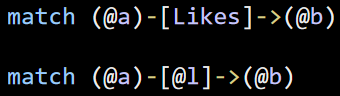

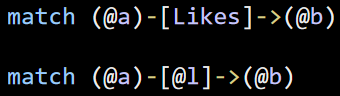

But this seems to be error prone, because you might have a small typo or remove a edge statement and get a completely different (and unexpected) meaning. I thought about adding some sort of prefix to help tell an alias from an implicit definition, but that looks very ugly, see:

And on the other hand, I really like the –[Likes]-> syntax in general. It is a lot cleaner and easier to read.

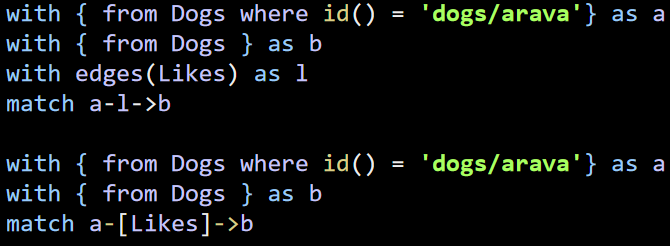

At this point, I don’t have a solution for this. I think we’ll go with the mode in which we can’t tell what the query is meant to say just from the parser, and look at the explicit with statements to figure it out (with the potential for mistakes that I pointed out earlier) until we can figure out something better.

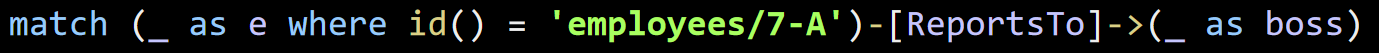

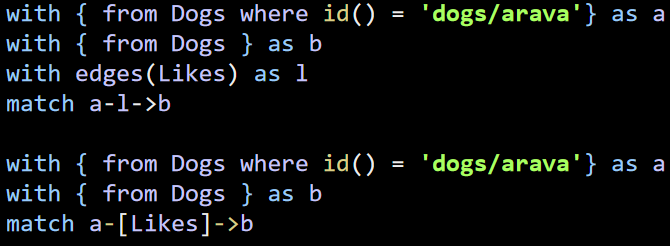

One thing that I’m thinking about is that the () and [] which help distinguish between nodes and edges, aren’t actually required for us if we have an explicit statement. So we can write it like so:

In this manner, we can tell, quite easily, if you meant to define an implicit edge / node or refers to an explicitly defined alias. I’m not sure whatever this would be a good idea, though.

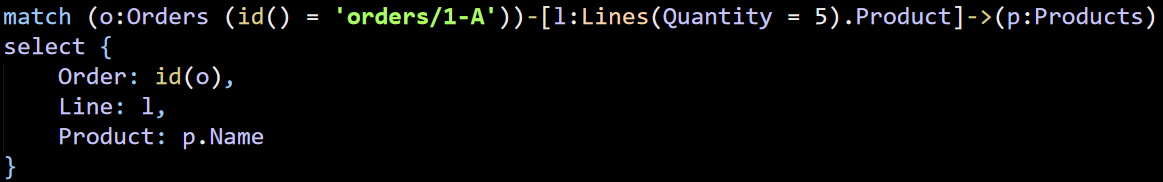

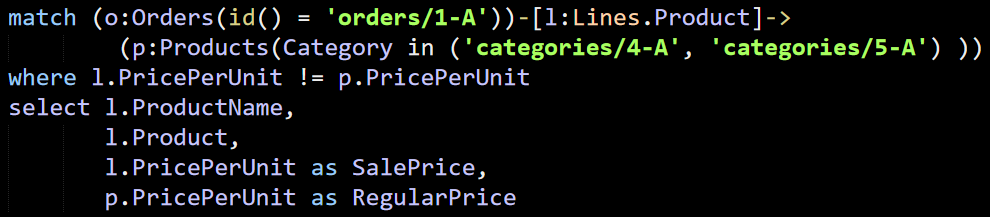

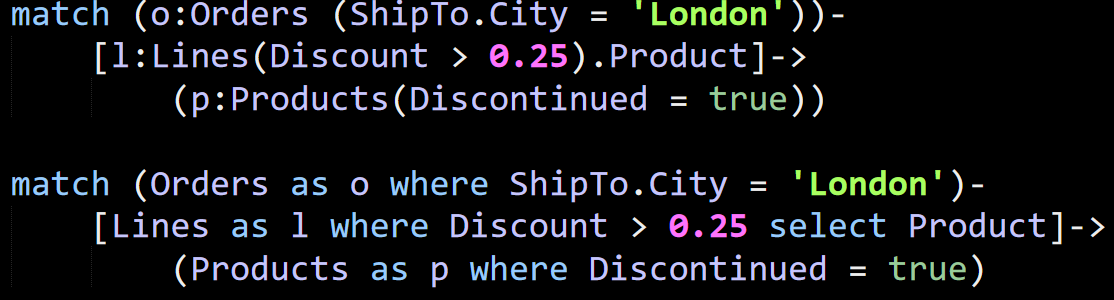

Another issue we have to deal with is:

Note that in this case, we have a filter expression on the edge as well. Applying the same process we have done so far, we get:

The advantages here is that this is very clear and obvious about what is going on. The disadvantage is that this takes quite a bit longer to express.

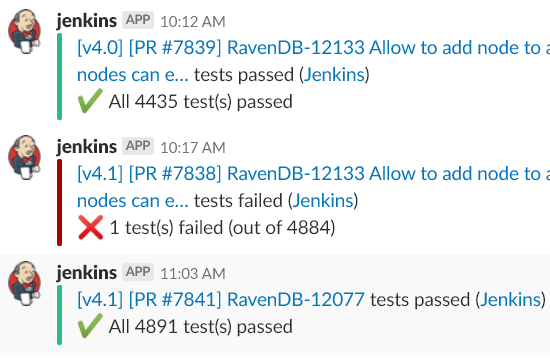

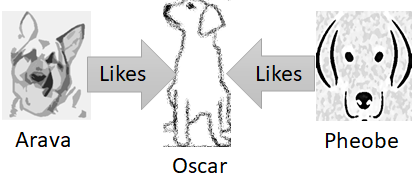

An interesting challenge with implementing graph queries is that you sometimes get into situations where the correct behavior is counter intuitive.

An interesting challenge with implementing graph queries is that you sometimes get into situations where the correct behavior is counter intuitive.