We had the following (really nasty) bug in RavenDB’s managed storage (which is still considered unstable, btw):

When deleting documents in a database that contains more than 2 documents, and the document(s) deleted are deleted in a certain order, RavenDB would go into 100% CPU. The server would still function, but it would always think that it had work to do, even if it didn’t have any.

To call this annoying is an understatement. To understand the bug I have to explain a bit about how RavenDB’s uses Munin, the managed storage engine. Munin gives you the notion of a primary key (which can be any json tuple) and secondary indexes. As expected, the PK is unique, but secondary indexes can contain duplicate values.

The problem that we had was that for some reason, removing values from the table wouldn’t remove them from the secondary indexes. That drove me crazy. At first, I tried to debug the problem by running the following unit test:

public class CanHandleDocumentRemoval : LocalClientTest

{

[Fact]

public void CanHandleDocumentDeletion()

{

using(var store = NewDocumentStore())

{

using(var session = store.OpenSession())

{

for (int i = 0; i < 3; i++)

{

session.Store(new User

{

Name = "ayende"

});

}

session.SaveChanges();

}

using (var session = store.OpenSession())

{

var users = session.Query<User>("Raven/DocumentsByEntityName")

.Customize(x => x.WaitForNonStaleResults())

.ToArray();

Assert.NotEmpty(users);

foreach (var user in users)

{

session.Delete(user);

}

session.SaveChanges();

}

using (var session = store.OpenSession())

{

var users = session.Query<User>("Raven/DocumentsByEntityName")

.Customize(x => x.WaitForNonStaleResults(TimeSpan.FromSeconds(5)))

.ToArray();

Assert.Empty(users);

}

}

}

}

But, while this reproduced the problem, it was very hard to debug properly. Mostly, because this executes the entire RavenDB server, which means that I had to deal with such things as concurrency, multiple operations, etc.

After a while, it became clear that I wouldn’t be able to understand the root cause of the problem from that test, so I decided to take a different route. I started to add logging in the places where I thought the problem was, and then I turned that log into a test all of its own.

[Fact]

public void CanProperlyHandleDeletingThreeItemsBothFromPK_And_SecondaryIndexes()

{

var cmds = new[]

{

@"{""Cmd"":""Put"",""Key"":{""index"":""Raven/DocumentsByEntityName"",""id"":""AAAAAAAAAAEAAAAAAAAABQ=="",""time"":""\/Date(1290420997504)\/"",

""type"":""Raven.Database.Tasks.RemoveFromIndexTask"",""mergable"":true},""TableId"":9,""TxId"":""NiAAMOT72EC/We7rnZS/Fw==""}",

@"{""Cmd"":""Put"",""Key"":{""index"":""Raven/DocumentsByEntityName"",""id"":""AAAAAAAAAAEAAAAAAAAABg=="",""time"":""\/Date(1290420997509)\/"",

""type"":""Raven.Database.Tasks.RemoveFromIndexTask"",""mergable"":true},""TableId"":9,""TxId"":""NiAAMOT72EC/We7rnZS/Fw==""}",

@"{""Cmd"":""Put"",""Key"":{""index"":""Raven/DocumentsByEntityName"",""id"":""AAAAAAAAAAEAAAAAAAAABw=="",""time"":""\/Date(1290420997509)\/"",

""type"":""Raven.Database.Tasks.RemoveFromIndexTask"",""mergable"":true},""TableId"":9,""TxId"":""NiAAMOT72EC/We7rnZS/Fw==""}",

@"{""Cmd"":""Commit"",""TableId"":9,""TxId"":""NiAAMOT72EC/We7rnZS/Fw==""}",

@"{""Cmd"":""Del"",""Key"":{""index"":""Raven/DocumentsByEntityName"",""id"":""AAAAAAAAAAEAAAAAAAAABg=="",""time"":""\/Date(1290420997509)\/"",

""type"":""Raven.Database.Tasks.RemoveFromIndexTask"",""mergable"":true},""TableId"":9,""TxId"":""wM3q3VA0XkWecl5WBr9Cfw==""}",

@"{""Cmd"":""Del"",""Key"":{""index"":""Raven/DocumentsByEntityName"",""id"":""AAAAAAAAAAEAAAAAAAAABw=="",""time"":""\/Date(1290420997509)\/"",

""type"":""Raven.Database.Tasks.RemoveFromIndexTask"",""mergable"":true},""TableId"":9,""TxId"":""wM3q3VA0XkWecl5WBr9Cfw==""}",

@"{""Cmd"":""Del"",""Key"":{""index"":""Raven/DocumentsByEntityName"",""id"":""AAAAAAAAAAEAAAAAAAAABQ=="",""time"":""\/Date(1290420997504)\/"",

""type"":""Raven.Database.Tasks.RemoveFromIndexTask"",""mergable"":true},""TableId"":9,""TxId"":""wM3q3VA0XkWecl5WBr9Cfw==""}",

@"{""Cmd"":""Commit"",""TableId"":9,""TxId"":""wM3q3VA0XkWecl5WBr9Cfw==""}",

};

var tableStorage = new TableStorage(new MemoryPersistentSource());

foreach (var cmdText in cmds)

{

var command = JObject.Parse(cmdText);

var tblId = command.Value<int>("TableId");

var table = tableStorage.Tables[tblId];

var txId = new Guid(Convert.FromBase64String(command.Value<string>("TxId")));

var key = command["Key"] as JObject;

if (key != null)

{

foreach (var property in key.Properties())

{

if(property.Value.Type != JTokenType.String)

continue;

var value = property.Value.Value<string>();

if (value.EndsWith("==") == false)

continue;

key[property.Name] = Convert.FromBase64String(value);

}

}

switch (command.Value<string>("Cmd"))

{

case "Put":

table.Put(command["Key"], new byte[] {1, 2, 3}, txId);

break;

case "Del":

table.Remove(command["Key"], txId);

break;

case "Commit":

table.CompleteCommit(txId);

break;

}

}

Assert.Empty(tableStorage.Tasks);

Assert.Null(tableStorage.Tasks["ByIndexAndTime"].LastOrDefault());

}

The cmds variable that you see here was generated from the logs. What I did was generate the whole log, verify that this reproduce the bug, and then start trimming the commands until I had the minimal set that reproduced it.

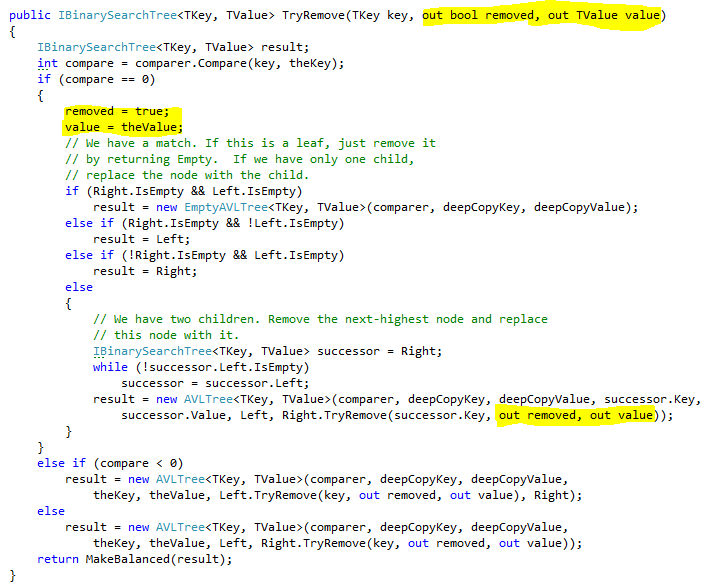

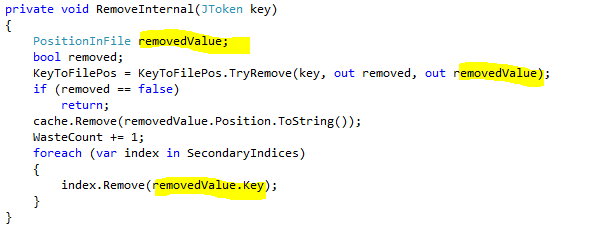

Using this approach, I was able to narrow the actual issue to a small set of API, which I was then able to go through in detail, and finally figure out what the bug was.

This post isn’t about the bug (I’ll cover that in the next post), but about the idea of going from a “there is a bug and I don’t know how to reproduce it in to a small enough step to understand” to “here is the exact things that fail”. A more sophisticated approach would be to do a dump of stack traces and parameters and execute that, but for my scenario, it was easy to just construct things from the log.