If we could code for the happy path only, I think that our lives would have been much nicer. Errors are hard, because you keep having to deal with them, and even basic issues in error handling can take down systems that are composed of thousands of nodes.

I went out to look at research around error handling rates, and I found this paper. It says that about 3% of code (C#, mind) is error handling. However, it counts only the code inside catch / finally as error handling. My recent foray into C allow me another data point. The short version, with no memory handling is 30 lines of code, the long version, with error handling, is over a 100.

If I had to guess, I would say that error handling is at least 10 – 15 %, and I would be surprised by 25 – 30%. In C# and similar languages, a centralized error handling strategy can help a lot in this regard, I think.

Anyway, let’s explore a few options for error handling:

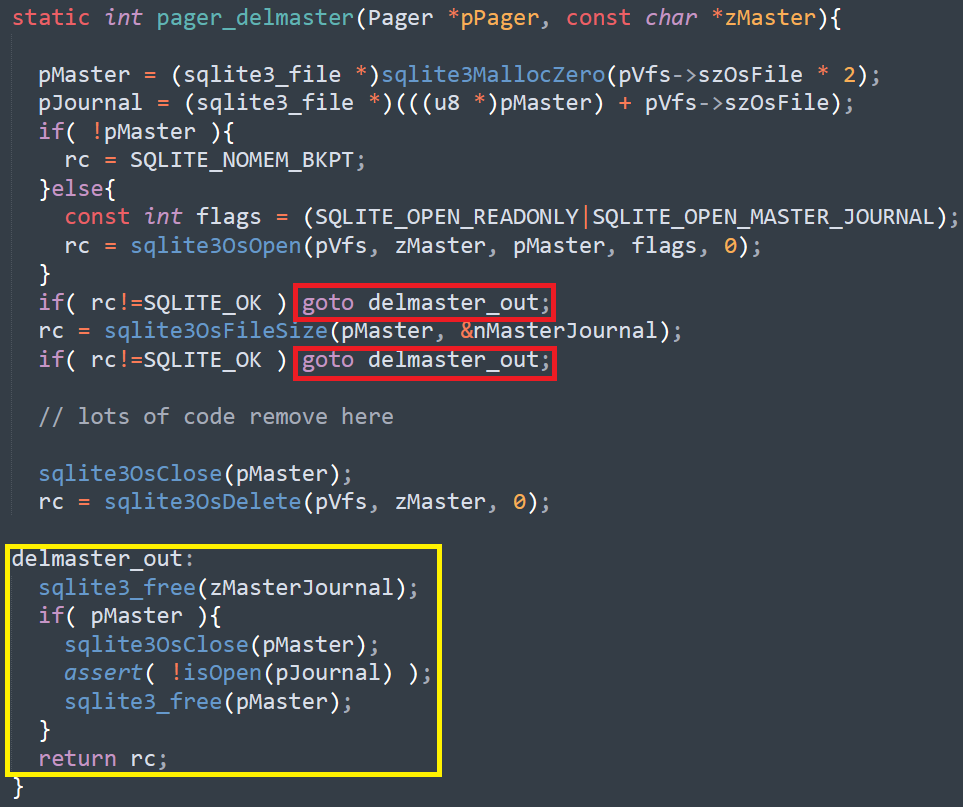

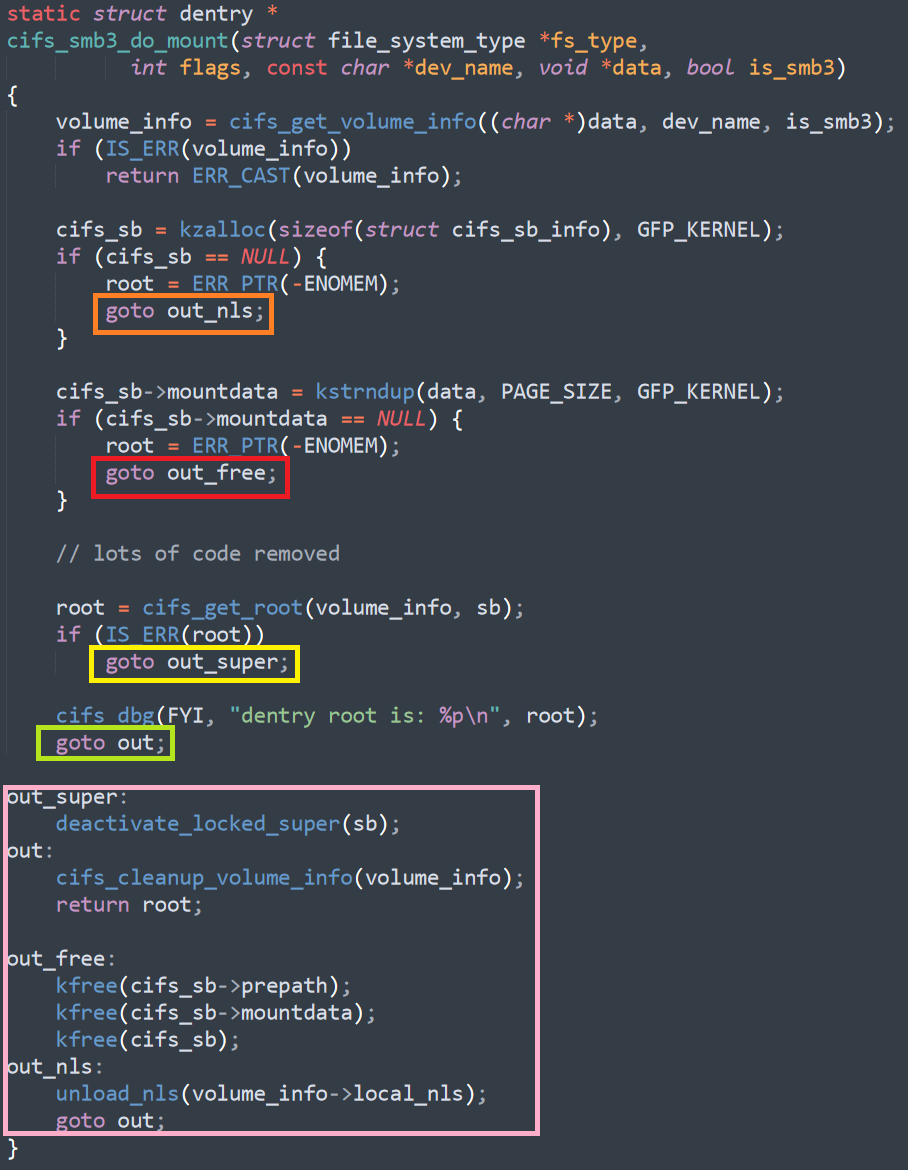

The C way – return codes. This sucks. I think that this is universally known to suck. In particular, there is no rhyme or reason for return codes. Something you need to check for INVALID_HANDLE_VALUE, sometimes for a value that is different from zero. Sometimes the return code is the error code. In other times you need to call a separate function to get it. It also forces you to have a very localized error handling mode. All error handling should be done all the time, which can easily lead to either a single forgotten return code causing issues down the line (forgetting to check fsync() return code got data corruption in Postgres, for example) or really bad code where you lose sight of what is actually going on because there are so much error handling that the real functionally went into hiding.

The return code model also doesn’t compose very well, in the case of complex operations failing midway. It doesn’t provide contextual information or allow you to get stack traces easily. Each of this is important if you want to have a good error handling strategy (and good debugging / troubleshooting experience).

So the C way of doing things is out .What are we left? We have a few options:

- Go with multiple return codes

- Rust with Option<T>, Result<T>

- Node.js with callbacks

- C# / Java with Exceptionsmuch

Let’s talk about the Go approach for a bit. I think that this is universally loathed as being very similar to the C method and cause a lot of code repetition. On the other hand, at least we don’t have GetLastError() / errno to deal with. And one advantage off Go in this regard that the defer command allow you to much more cleanly handle state (you can just return and any resource will be cleaned up). This means that the code may be repetitive to write, but it is much easier to review.

The problem with this approach is that it is hard to compose errors. Imagine a method that needs to read a string from the network, parse a number from the string and then update a value in a file. Without error handling, this looks like so:

I haven’t even written the file handling path, mostly because it got too tiring. In this case, there are so many things that can go wrong. The code above handles failure to make the request, failure to read the value from the server, failure to parse the string, etc. With a file, you need to handle failure to open the file, read its content, parse them, do something with the value from the server and file value and then serialize the value back to bytes to be written to the file. About every other word in this previous statement require some form of error handling. And the problem is that when we have complex system, we don’t just need to handle errors, we need to compose them so they would make sense.

EPERM error from somewhere is pretty useless, so having the file name is huge help in figuring out what the problem was. But knowing that the error is actually because we tried to write to save the data to the on-disk cache give me the proper context for the error. The problem with errors is that they can happen very deeply in the code path, and the policy for handling such errors belong much higher in the stack.

Rust’s approach for errors is cleaner than Go, you don’t have multiple result types but the result is actually wrapped in a Result / Option value that you need to explicitly handle. Rust also contain some syntax sugar to make this pretty easy to write.

However, Rust error handling just plain sucks when you try to actually compose errors. Imagine the case where I want to do several operations, some of which may fail. I need to report success if all has passed, but error if any had errored. For a bit more complexity, we need to provide good context for the error, so the error isn’t something as simple as “int parse failure” but with enough details to know that it was an int parse failure on the sixth line of a particular file that belong to a certain operation.

The reason I say that Rust sucks for this is that for consuming error, things are pretty simple. But for producing them? The suggestion to library authors is to implement your own Error type. That means that you need to implement the Display trait manually, you need to write a separate From trait for each error that you want to compose up. If your code suddenly need to handle a new error type, you deal with that by writing a lot of boiler plate code. Any change in the error enum require touching multiple places in the code, violating SRP. You can use Box<Error>, it seems, but in this case, you have just “an error occurred” and it is complex to get back the real error and act on it.

A major complication of all the return something option is the fact that they usually don’t provide you with a stack trace. I think that having a stack trace in the error is extremely helpful to actually analyzing a problem and being able to tell what actually happened.

Callbacks, such as was done with node.js, are pretty horrible. On the one hand, it is much easier to provide the context, because you are called from the error site and can check your current state. However, there is only so much that you can do in such a case, and state management is a pain. Callbacks have proven to be pretty hard to program with, and the industry as a whole is moving to async/await model instead. this give you sequential like mechanism and much better way to reason about the action of the system.

Finally, we have exceptions. There are actually several different models for exceptions. You have Java with checked exceptions, with the associated baggage there (cannot change the interface, require explicit handling, etc). There is the Pony language which has “exceptions”. That is really strange choice of implementation. Pony has exceptions for flow control, but it doesn’t give you any context about the actual error. Just that one happened. The proper way of handling errors in Pony is to return a union of the result and possible errors (similar to how Rust does it, although the syntax looks nicer and there is less work).

I’m going to talk about C#’s exceptions. Java’s exceptions, except for some of them being checked, are pretty much the same.

Exceptions have the nice property that they are easily composable, it is easy to decide to handle some errors and to pass some up the chain. Generic error handling is also easy. Exceptions are problematic because they break the flow of the code. An exception in one location can be handled somewhere completely different, and there is no way for you to see that when looking on the code. In fact, I’m not even aware of any IDE / tooling that can provide you this insight.

In languages with exceptions, you also can have exceptions pretty much at any location, which mean that you need to write exception-safe code to make sure that an exception don’t leave your code in an inconsistent state. There is also a decidedly non trivial cost of exceptions. To start with, many optimizations are mitigated by try blocks and throwing exceptions is often very expensive. Part of that is the fact that we need to capture the oh so valuable stack trace, of course.

There is also another aspect to error handling to consider. There are many cases where you don’t care about errors. Any time that you have generic framework code that calls to user code. An HTTP Handler is a good example of that. You call the user’s code to handle the request, and you don’t care about errors. You simple catch that error and return 500 / message to the client. Any error handling strategy must handle both scenarios. The “I really care about every single detail and separate error handling code path for everything” and “I just want to know if there is an error and print it, nothing else”.

In theory, I really love the Rust error handling mechanism, but the complexity of composability and generic handling means that it is a lot less convenient to actually consume and produce errors. Exceptions are great in terms of composability and the amount of detail they provide, but they are also breaking the flow of the code and introduce a separate and invisible code paths that are hard to reason about in many cases. On the other hand, exceptions allow you to bubble errors upward natively and easily, until you get to a location that can apply a particular error handling policy.

A good example from a recent issue we had to deal with. When running on a shared drive, a file delete isn’t going to be processed immediately, there is a gap of time in which the delete command seems to have succeeded, but attempting to re-create the file will fail with EEXISTS (and trying to open the file will give you ENOENT, so that’s fun). In this case, we throw the error up the stack. In our use case, we have this situation only when dealing with temporary files, and given that they are temporary, we can detect this scenario and use another file name to avoid this issue. So we catch a FileNotFoundException and retry with a different file name. This goes through four of five layers of code and was pretty simple to figure out and implement.

Doing that with error codes is hard, and adding another member for the Error type will likely have cascading implications for the rest of the code. On the other hand, throwing a new exception type from a method can also break the contract. Explicitly in languages like Java and implicitly in languages like C#. In fact, with C#, for example, the implied assumption is always: “Can throw the following exceptions for known error cases, and other exceptions for unexpected”. This is similar to checked exceptions vs. runtime exceptions in Java. But in this case, this is the implicit default and it gives you more freedom overall when writing your code. Checked exceptions sounds great, but they have been proven to be a problem for developers in practices.

Oh well, I guess I won’t be able to solve the error handling problem perfectly in a single blog post.

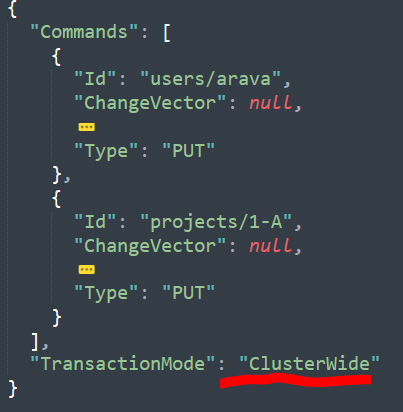

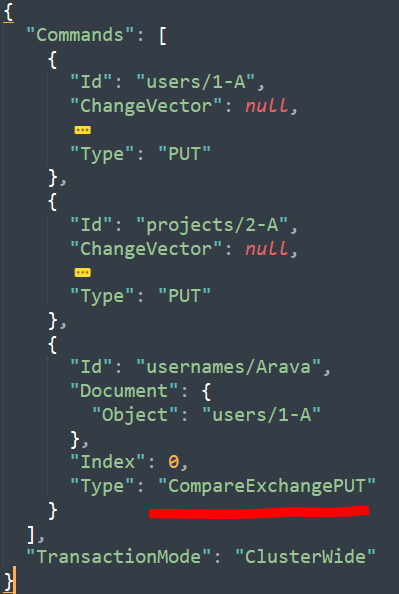

A fun feature we have back in RavenDB is the ability to run RavenDB as part of your own application. Zero deployment, setup or hassle.

A fun feature we have back in RavenDB is the ability to run RavenDB as part of your own application. Zero deployment, setup or hassle.