We are pretty much done with RavenDB 3.0, we are waiting for fixes to internal apps we use to process orders and support customers, and then we can actually make a release. In the meantime, that means that we need to start looking beyond the 3.0 release. We had a few people internally focus on post 3.0 work for the past few months, and we have a rough outline for what we done there. Primarily we are talking about better distribution and storage models.

Storage models – the polyglot database

Under this umbrella we put dedicated database engines to support specific needs. We are talking about distributed counters (high scale out, rapid throughput), time series and event store as the primary areas that we are focused on. For example, the counters stuff is pretty much complete, but we didn’t have time to actually make that into a fully mature product.

I talked about this several times in the past, so I’ll not get into too many details here.

Distribution models

We have been working on a Raft implementation for the past few months, and it is now in the stage where we are starting to integrate it into the rest of our software. Raft is planned to be the core replication protocol for the time series and events databases. But you are probably going to see if first as topology super layer for RavenDB and RavenFS.

Distributed topology management

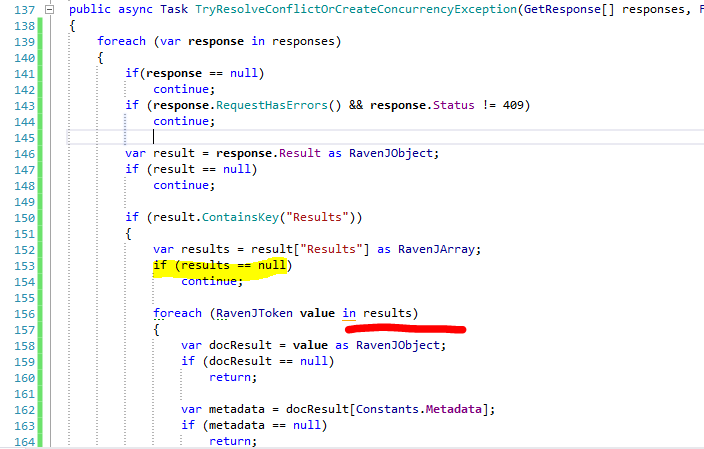

Replication support in RavenDB and RavenFS follow the multi master system. You can write to any node, and your write will be distributed by the server to all the nodes. This has several advantages, in particular, the fact that we can operate in disconnected or partially disconnected manner, and that we need little coordination between clients to get everything working. It also has the disadvantage of allow conflicts. In fact, if you are writing to multiple replicating nodes, and aren’t careful about how you are splitting writes, you are pretty much guaranteed to have conflicts. We have repeatedly heard that this is both a good thing and something that customers really don’t want to deal with.

It is a good thing because we don’t have data loss, it is a bad thing because if you aren’t ready to handle this, some of your data is inaccessible because of the conflict until it is resolved.

Because of that, we are considering implementing a server side topology management system. The actual replication mechanics are going to remain the same. But the difference is how we are going to decide how to work with it.

A cluster (in this case, a set of RavenDB servers and all databases and file systems on them) is composed of cooperating nodes. The cluster is managed via Raft, which is used to store the topology information of the cluster. Topology include each of the nodes in the system, as well as any of the databases and file systems on the cluster. The cluster will select a leader, and that leader will also be the primary node for writes for all databases. In other words, assume we have a 3 node cluster, and 5 databases in the cluster. All the databases are replicated to all three nodes, and a single node is going to serve as the write primary for all operations.

During normal operations, clients will query any server for the replication topology (and cache that) every 5 minutes or so. If a node is down, we’ll switch over to an alternative node. If the leader is down, we’ll query all other nodes to try to find out who the new leader is, then continue using that leader’s topology from now on. This give us the advantage that a down server cause clients to switch over and stay switched. That avoid an operational hazard when you bring a down node back up again.

Clients will include the topology version they have in all communication with the server. If the topology version doesn’t match, the server will return an error, and the client will query all nodes it knows about to find the current topology version. It will always chose the latest topology version, and continue from there.

Note that there are still a chance for conflicts, a leader may become disconnected from the network, but not be aware of that, and accept writes to the database. Another node will take over as the cluster leader and clients will start writing to it. There is a gap where a conflict can occur, but it is pretty small one, and we have good mechanisms to deal with conflicts, anyway.

We are also thinking about exposing a system similar to the topology for clients directly. Basically, a small distributed and consistent key/value store. Mostly meant for configuration.

Thoughts?