In 2009, I decided to make a pretty big move. I decided to take RavenDB and turn that from a side project into a real product. That was something that I actually had a lot of trouble with. Unlike the profiler suite, which is a developer tool, and has a relatively short time to purchase, building a database was something that I knew was going to be a lot more complex in terms of just getting sales.

Unlike a developer tool, which is a pretty low risk investment, a database is something that is pretty significant, and that means that it would take time to settle into the market, and even if a user starts developing with RavenDB, it is usually 3 – 6 months minimum just to get to the part where they order the license. Add that to the cost of brining a new product to market, and…

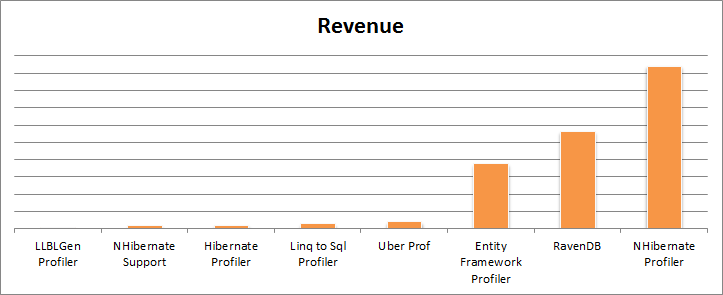

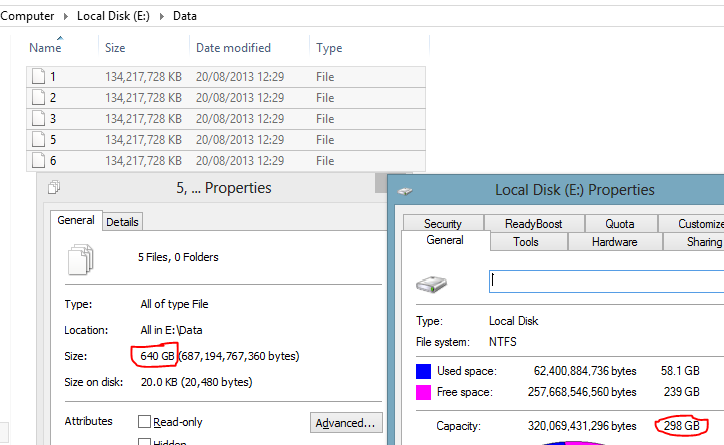

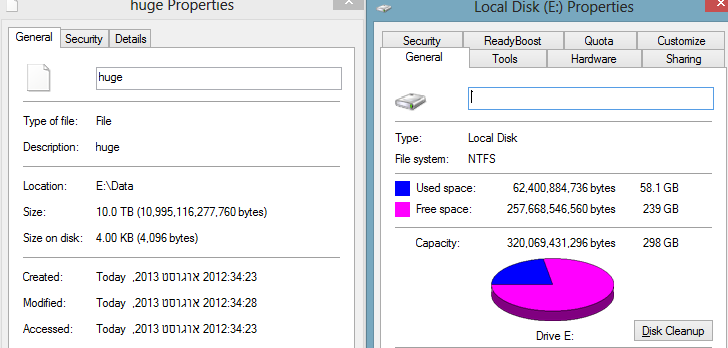

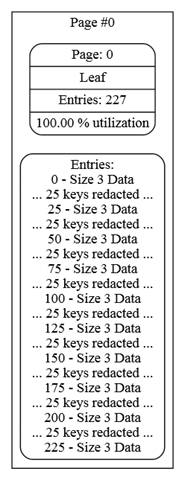

Anyway, it wasn’t an easy decision. Today I was looking at some reports when I noticed something interesting. The following is the breakdown of our product based revenue since the first sale of NHibernate Profiler. Note that there is no doubt that NH Prof is a really good product for us. But it is actually pretty awesome that RavenDB is at second place.

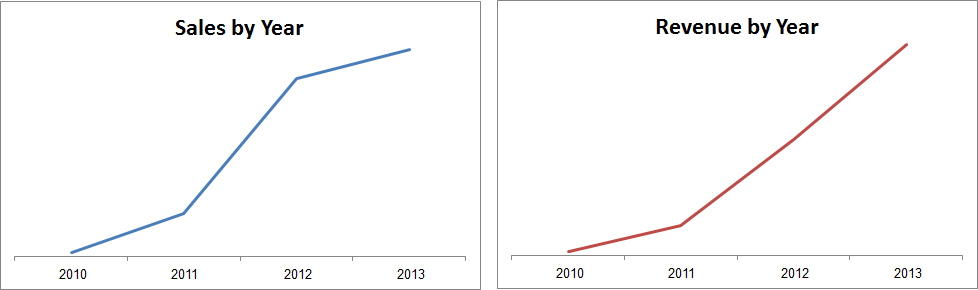

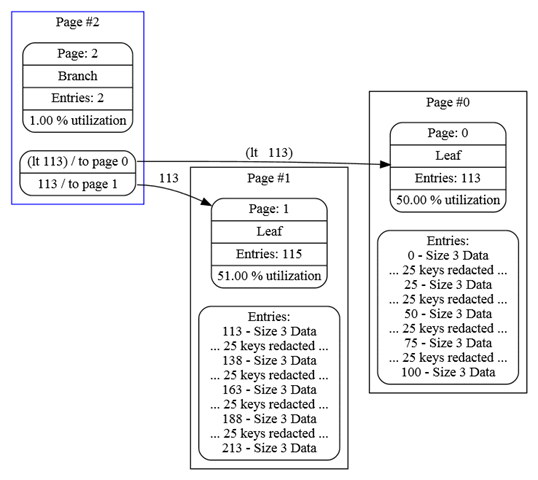

This is especially significant in that the profilers has several years of lead time in the market over RavenDB. In fact, running the numbers, until 2011, we sold precious few licenses of RavenDB. In fact, here are the sales numbers for the past few years:

Obviously, the numbers for 2013 are still not complete, but we have already more than surpassed 2012, and we still have a full quarter to go.

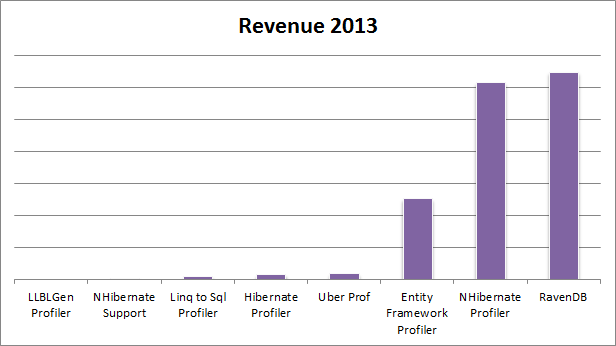

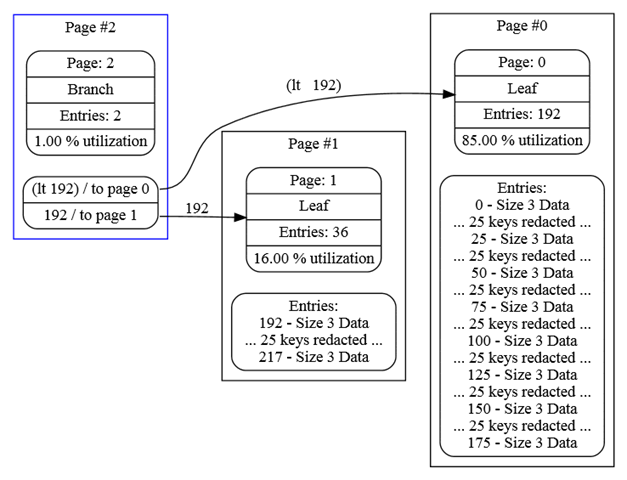

For that matter, looking at the number just for 2013, we see:

So NH Prof is still a very major product, but RavenDB is now our top performing product for 2013, which makes me a whole lot better.

Of course, it also means that we probably need to get rid of a few other products, in particular, LLBLGen, Linq to Sql and Hibernate profilers don’t look like they are worth the trouble to keep them going. But that is a matter for another time.