PageTable is a pretty critical piece of Voron. It is the component responsible for remapping modified pages in transactions and is the reason why we support MVCC and can avoid taking locks for the most part. It has been an incredibly stable part of our software, rarely changing and pretty much the same as it was when it was initially written in 2013. It has been the subject for multiple performance reviews in that time, but acceptable levels of performance from our code in 2013 is no longer acceptable today. PageTable came up recently in one of our performance reviews as a problematic component. It was responsible for too much CPU and far too many allocations.

Here is a drastically simplified implementation, which retain the salient points:

Here is the sample workout for this class, which just simulates ten thousand transactions. This little scenario takes 15.3 seconds and allocates a total of 1.1GB of memory! That is a lot of allocations, and must have tremendous amount of time spent in GC. The most problematic issue here is the SetItems methods, which will allocate two different delegates for each modified page in the transaction. Then we have the total abandon in which we’ll allocate additional memory in there. As you can imagine, we weren’t very happy about this, so we set out to fix this issue.

We can take advantage off the fact that SetItems and RemoveBefore are only called under lock, while TryGetValue is called concurrently with everything else.

So I wrote the following code:

This relies on allowing stale reads from concurrent readers, which we don’t care about since they wouldn’t be able to make use of the data anyway, and it was able to reduce the allocations to just 320 MB, but the runtime actually went up to 32 seconds.

That is quite annoying, as you can imagine, and much cursing enthused as a result. I then pulled my trusty profiler ask it kindly to figure out what piece of code needs to be hit with a rolling pin and have a stern talk to about what is expected from code after it has been laboriously and carefully optimized. It is expected to sit nicely and be fast, or by Git I’ll revert you.

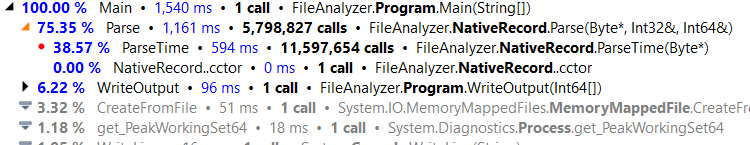

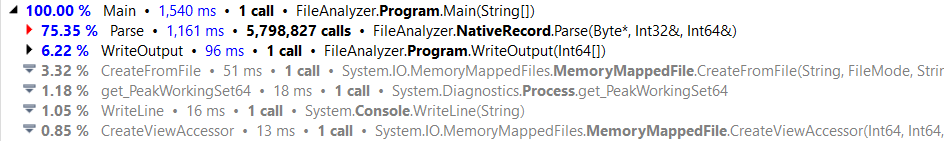

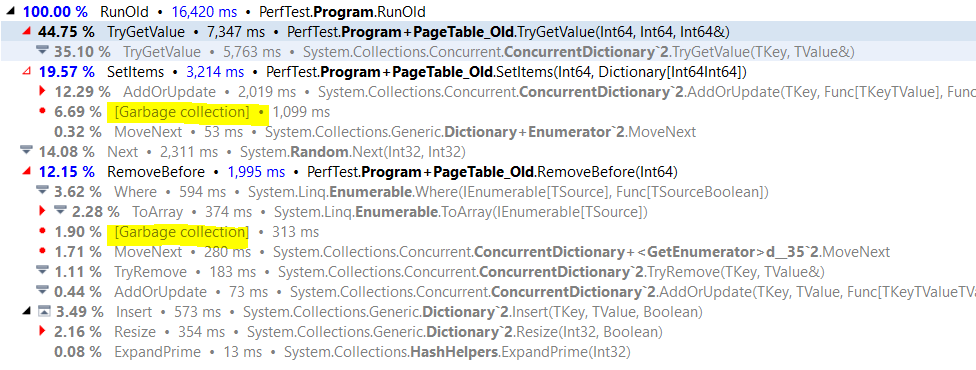

What the hell?! Here are the original implementation costs, and you can clearly see how much time we are spending on garbage collection.

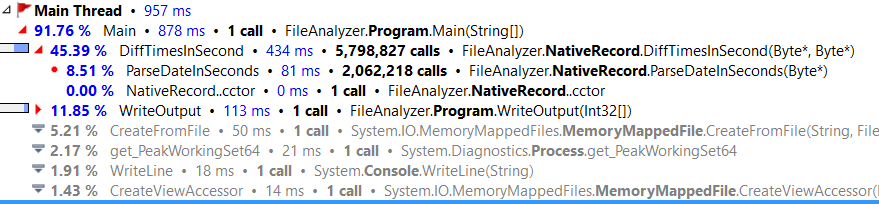

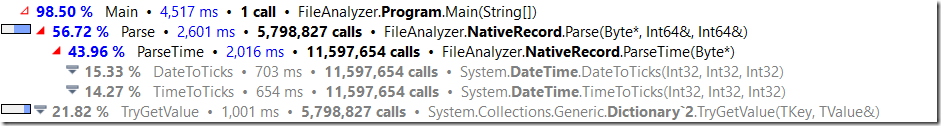

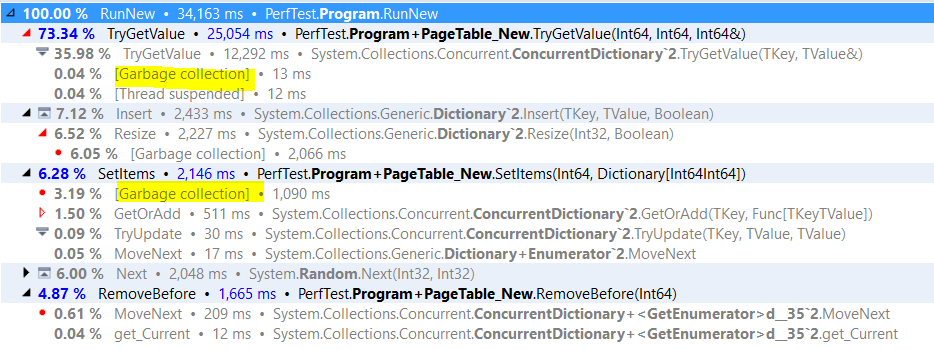

And here is the optimized version, which is actually slower, and actually used more memory?!

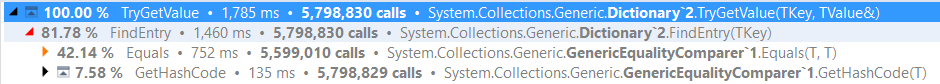

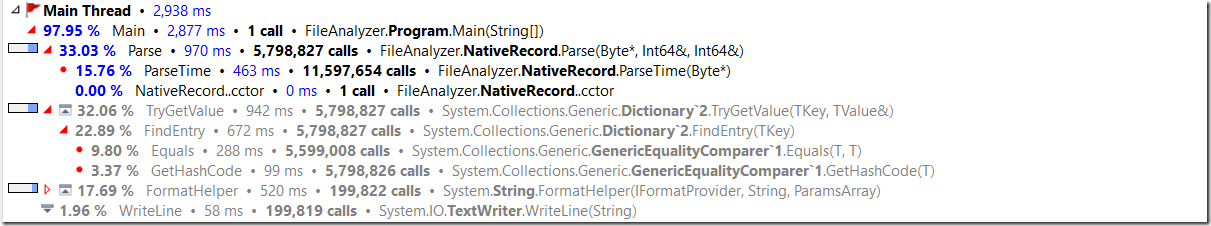

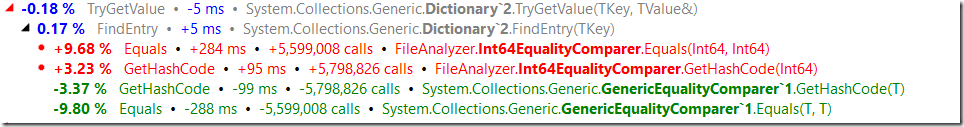

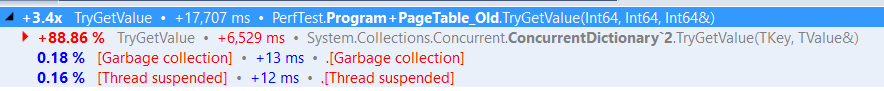

There are a bunch of interesting things going on here. We can see that we are indeed using spending a little less time in GC, and that both RemoveBefore and SetItems methods are much cheaper, but the cost of TryGetValue is so much higher, in fact, if we compare the two, we have:

So we are 3.4 times higher, and somehow, the cost of calling the concurrent dictionary TryGetValue has risen by 88%.

But the implementation is pretty much the same, and there isn’t anything else that looks like it can cause that much of a performance gap.

I’ll leave this riddle for now, because it drove me crazy for two whole days and give you the details on what is going on in the next post.

My Talk at InfoQ Brazil has been published.

My Talk at InfoQ Brazil has been published.