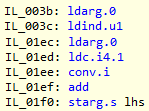

Note, this post was written by Federico. In the previous post after inspecting the decompiled source using ILSpy we were able to uncover potential things we could do.

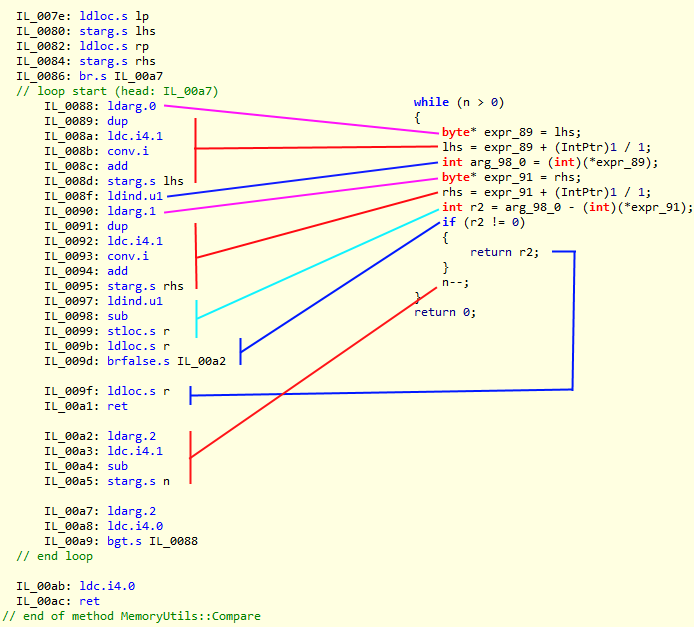

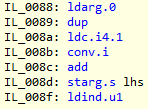

By now we already squeeze almost all the obvious inefficiencies that we had uncovered through static analysis of the decompiled code, so now we will need another strategy. For that we need to analyze the behavior in runtime in the average case. We did something like that when in this post when we made an example using a 16 bytes compare with equal arrays.

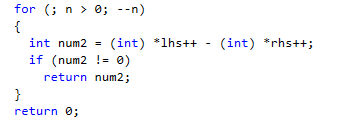

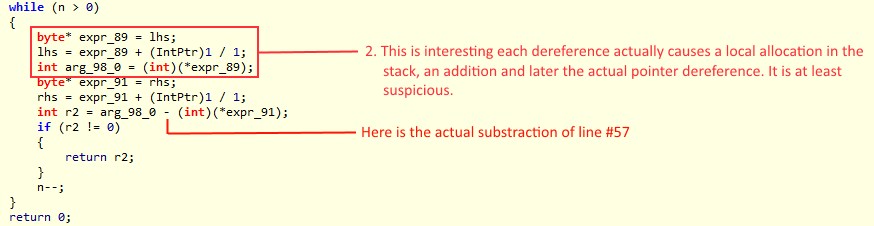

To achieve that analysis live we will need to somehow know the size of the typical memory block while running the test under a line-by-line profiler run. We built a modified version of the code that stored the size of the memory chunk to compare and then we built an histogram with that (that’s why exact replicability matters). From our workload the histogram showed that there were a couple of clusters for the length of the memory to be compared. The first cluster was near 0 bytes but not exactly 0. The other cluster was centered around 12 bytes, which makes sense as the keys of the documents were around 11 bytes. This gave us a very interesting insight. Armed with that knowledge we made our first statistical based optimization.

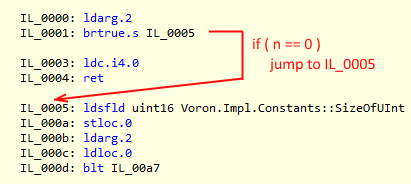

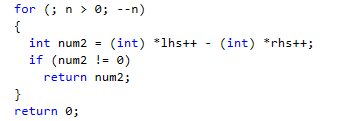

You can notice the if statement at the start of the method, which is a pretty standard bounding condition. If the memory blocks are empty, therefore they are equal. In a normal environment such check is so simple that nobody would bother, but in our case when we are measuring the runtime in the nanoseconds, 3 extra instructions and a potential branch-miss do count.

That code looks like this:

That means that not only I am making the check, we are also forcing a short-jump every single time it happens. But our histogram also tells us that memory blocks of 0 size almost never happen. So we are paying with 3 instructions and a branch for something that almost never happen. But we also knew that there was a cluster near the 0 that we could exploit. The problem is that we would be paying 3 cycles (1 nanosecond in our idealized processor) per branch. As our average is 97.5 nanoseconds, we have 1% improvement in almost any call (except the statistically unlikely case) if we are able to get rid of it.

Resistance is futile, that branch needs to go. ![]()

In C and Assembler and almost any low level architecture like GPUs, there are 2 common approaches to optimize this kind of scenarios.

- The ostrich method. (Hide your head in the sand and pray it just work).

- Use a lookup table.

The first is simple, if you don’t check and the algorithm can deal with the condition in the body, zero instructions always beats more than zero instruction (this case is a corner case anyways, no damage is dealt). This one is usually used in massive parallel computing where the cost of instructions is negligible while memory access is not. But it has its uses in more traditional superscalar and branch-predicting architectures (you just don’t have so much instructions budget to burn).

The second is more involved. You need to be able to “index” somehow the input and pay with less instructions than do the actual branches (at a minimum of 1 nanosecond each aka 3 instructions of our idealized processor). Then create a branch table and jump to the appropriate index which itself will jump to the proper code block using just 2 instructions.

Note: Branch tables are very well explained at http://en.wikipedia.org/wiki/Branch_table. If you made it that far you should read it, don’t worry I will wait.

As the key take away if your algorithm have a sequence of 0..n, you are in the best world, you already have your index. Which we did ![]() .

.

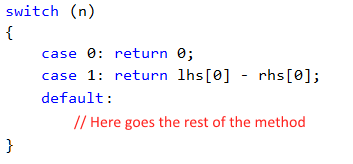

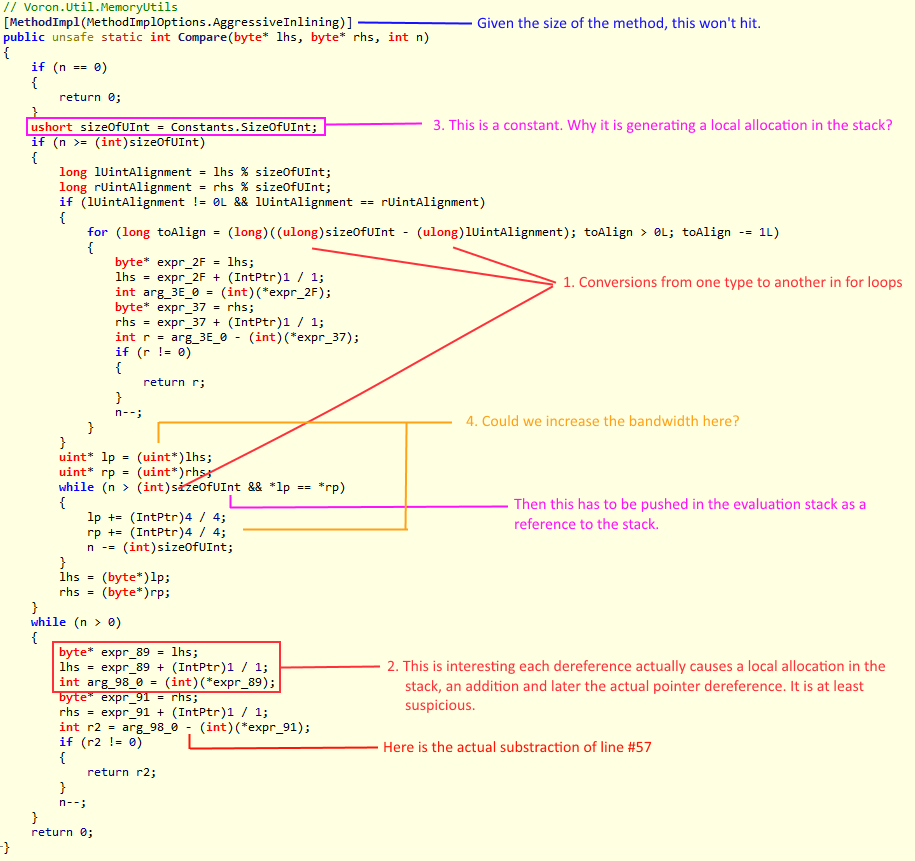

I know what you are thinking: Will the C# JIT compiler be smart enough to convert such a pattern into a branch table?

The short answer is yes, if we give it a bit of help. The if-then-elseif pattern won’t cut it, but what about switch-case?

The compiler will create a switch opcode, in short our branch table, if our values are small and contiguous.

Therefore that is what we did. The impact? Big, but this is just starting. Here is what this looks like in our code:

I’ll talk about the details of branch tables in C# more in the next post, but I didn’t want to leave you hanging too much.