Product recommendations is a Big Thing. The underlying assumption is that there are patterns in the sales of products, so we can detect and recommend what products usually go together. That gives us a very nice way to give accurate recommendations to users about products that they might want to purchase.

Here is a great example of how this may look like, from Amazon:

As an aside, I’m really happy to see the grouping of my book with Release It~ and Writing High Performance .Net Core books.

An interesting question is can we get this kind of behavior in RavenDB? If we were using SQL, we could probably write some queries to handle this. I wrote about this a decade ago with NHiberante, and the queries are… complex. They also have non trivial amount of runtime costs. With RavenDB, however, we can do things differently. We can use RavenDB’s map/reduce feature to handle this.

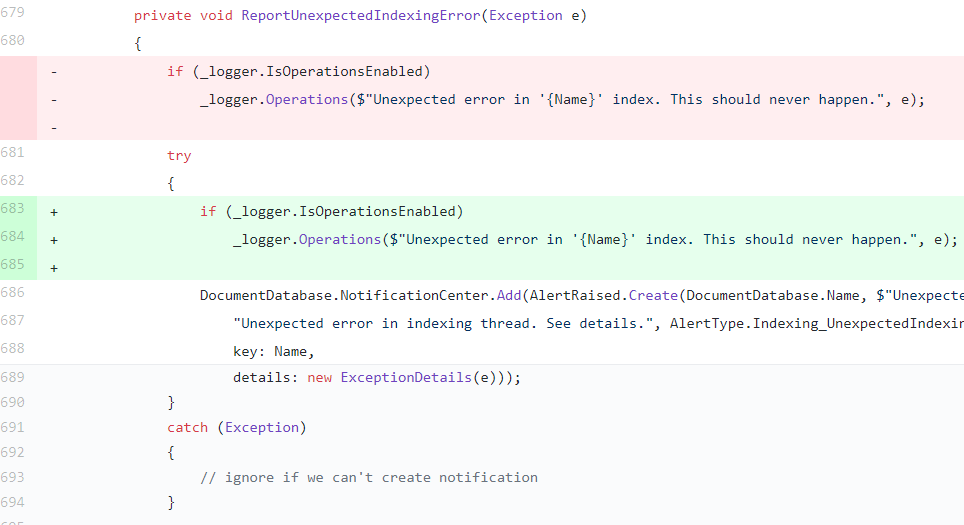

The key observation is that we want to gather, for each product, the products that were also purchased with it. We’ll use the sample dataset to test things out. There, we have an Orders collection and each order has a list of Lines that were purchased in the order. Given that information, we can use the following index definition:

Let’s break this index apart to its constituent parts. In the map, we project an entry for each line, which has the Product that is being purchased as well as all the other products that were purchased in the same order. We use this to create a link between the various products that are sold together. In the reduce, we group by the product that was sold, and aggregate the sales of related products to get the final tally.

The end result will looks like so:

You can see some interesting design decisions in how I built this index. We keep track of the number of orders for each product, as well as the number of times it was purchased along side each related product. This means that we can very easily implement related products, but also filter outliers. If someone purchased the “Inside RavenDB” book to learn RavenDB, but at the same time also bought the Hungry Caterpillar for their child, you probably don’t want to put recommend each other. The audiences are quite different (even though telling my own 4 years old daughter about RavenDB usually puts her to sleep pretty quickly  ).

).

We can use the number of joint sales as a good indication of whatever the products are truly related, all the while using the users tell us what matter. And the best part, you don’t have to go out of your way to get this information. This is based on pretty much just the data that you are already collecting.

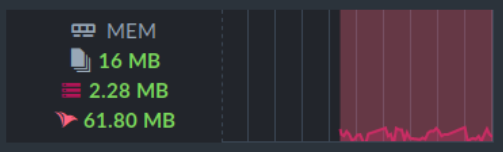

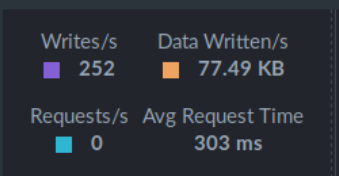

Because this is a map/reduce index in RavenDB, the computation happens at indexing time, not at runtime. This means that the cost of querying this information is minimal, and RavenDB will make sure that it is always up to do.

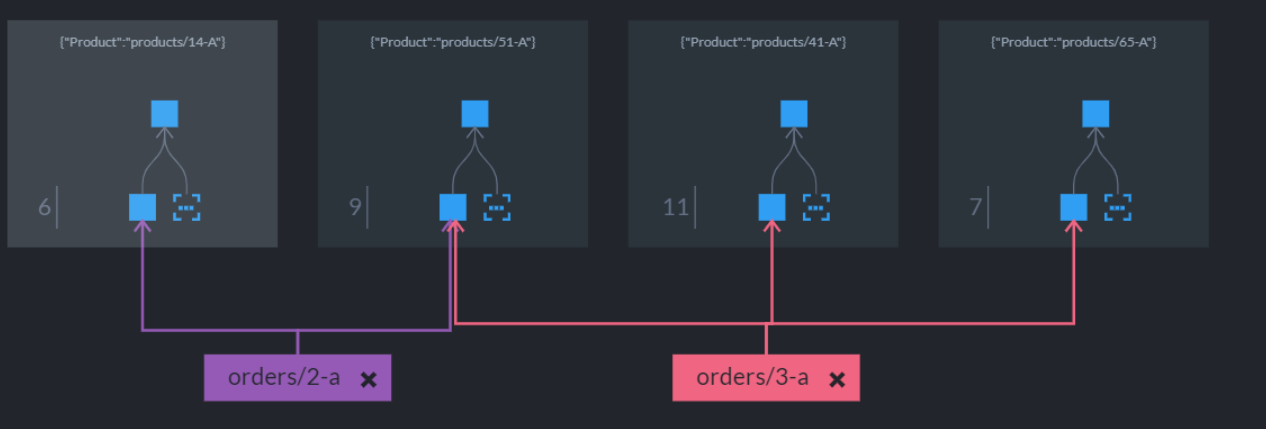

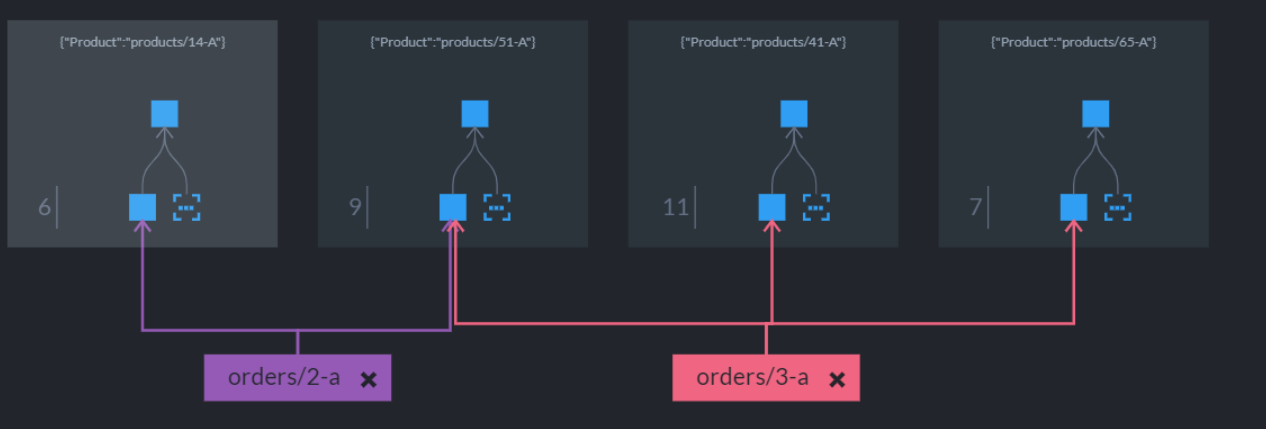

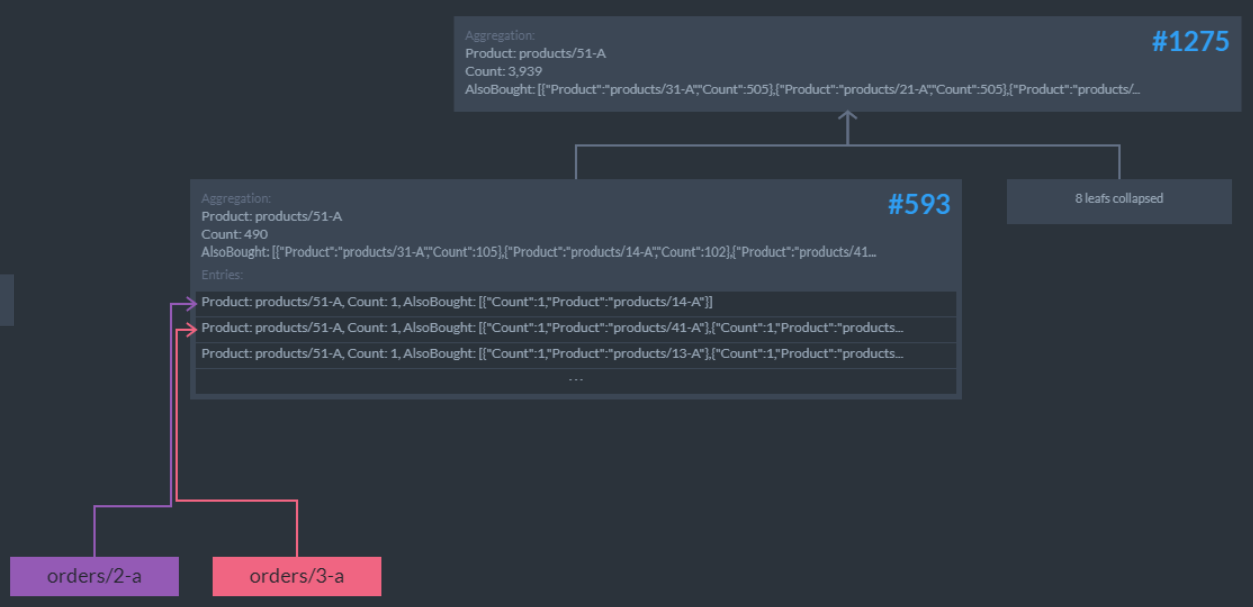

In fact, we can go to the Map/Reduce Visualizer page in RavenDB to see how this works. Let’s take a peek, shall we?

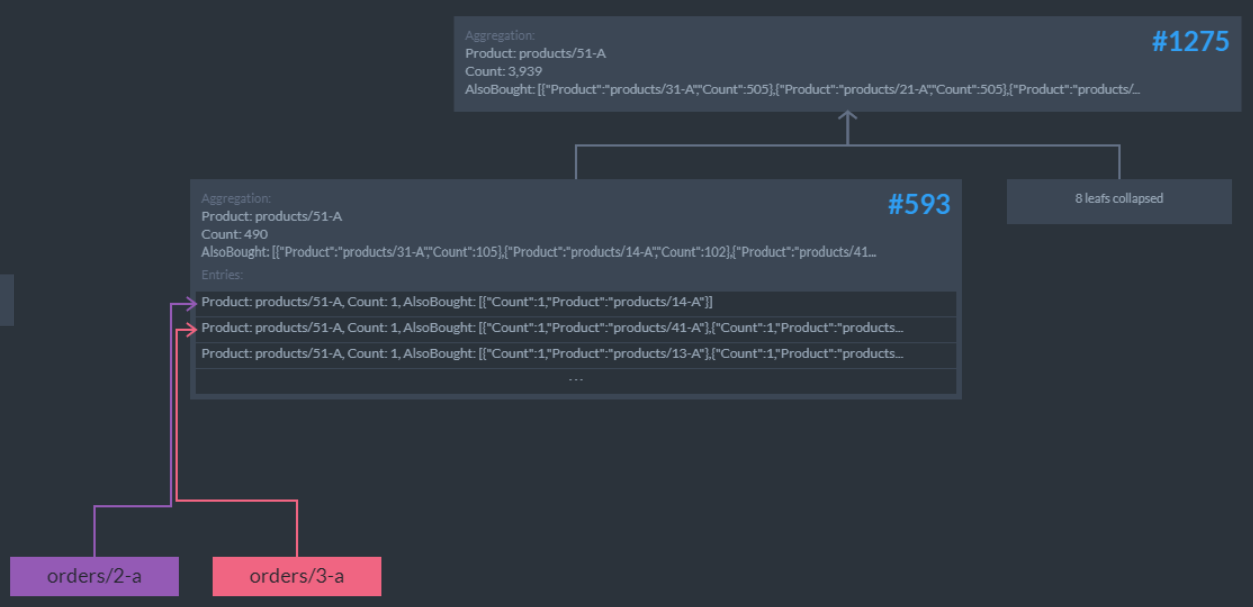

Here we can see a visual representation of two orders for the same product, as well as a few others. This is exactly the kind of thing we want to explore. Let’s look a bit deeper, just for products/51-A:

You can see how for the first order (bottom left), we have just one additional product, (products/14-A) while the second has a couple of them. We aggregate that information (Page #593) for all the 490 orders that fit there. There is also the top level (Page #1275) which aggregate the data from all the leaves.

When we query, we will get the data from the top, so even if we have a lot of orders, we don’t actually need to run any costly computation. The data is already pre-chewed for us and immediately (and cheaply) available.