I have been sitting on this post for a while now, because that was my first impression after seeing Oslo & M and all the hype around it from the PDC. To be frank, I had a hard time believing my own gut feeling. I kept having the feeling that I am missing something, which is why I avoided talking about this so far.

But, as time passed, and as we started to see more and more about Oslo and M, it validated my initial thinking. Now, just to be clear, I don’t intend to even touch on the whole of Oslo in this post. I don’t have a problem stating that I still don’t see the whole point there, but that is beside the point (no pun intended). What I would like to talk about is the M language, its usage, and the DSL that Microsoft shows as samples.

I see M as a whole lot of effort trying to optimize something that is really not that interesting, complex or really very hard. I look at the M language, the way that you worked with, the tooling and the API and I would fully agree that it is a nice parser generator.

What it is not, I have to say, is a DSL toolkit. It is just one, small, part of building a DSL. And, to be perfectly honest, M is the drag & drop of DSL. It looks good, on first glance, but then you dig just a little deeper and you see what actually going on, and you realize that you are probably not where you wanted to be.I see it as trying very hard to optimize opening the car’s door. While I assume that this is interesting to someone in the world, optimizing the opening of a car door is crucial, I don’t really see it as an important feature. More to the point, it has negligible effect on the time taken for the primary task for which we use a car, the actual driving!

Why am I saying that?

Well, M is used for defining the syntax of the language, which is what most people look at. It does a good job there, but it also stops there. And there is a lot of stuff other then the syntax that you really care about.

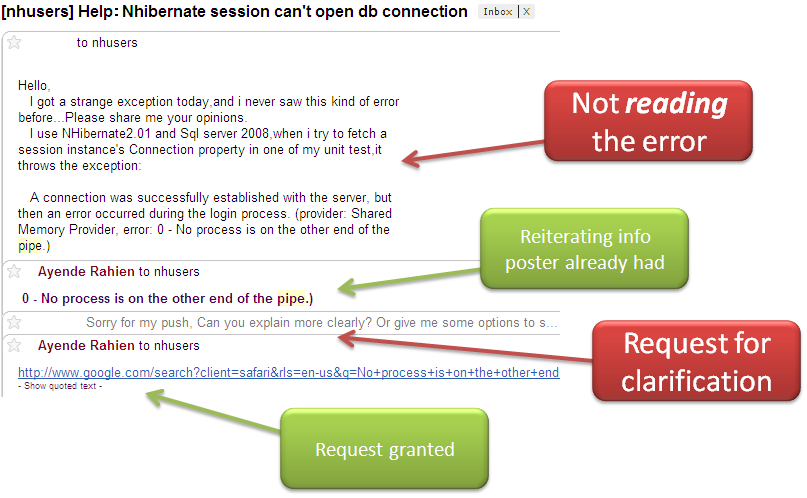

Here is a snippet from MisBehave, which was an attempt to build a BDD framework on top of M:

Pretty impressive syntax, right?

The problem is that there isn’t really a good way to take this and translate that into something that is executable. Not without doing a lot of work. And that is why I am saying that M isn’t really an important piece of the stack. The actual syntax definition isn’t really that important. It is all the other things that you do with the DSL that matters.

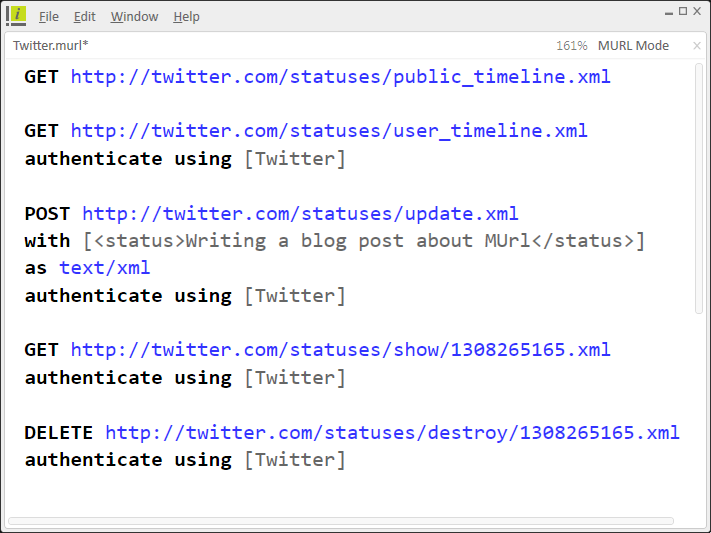

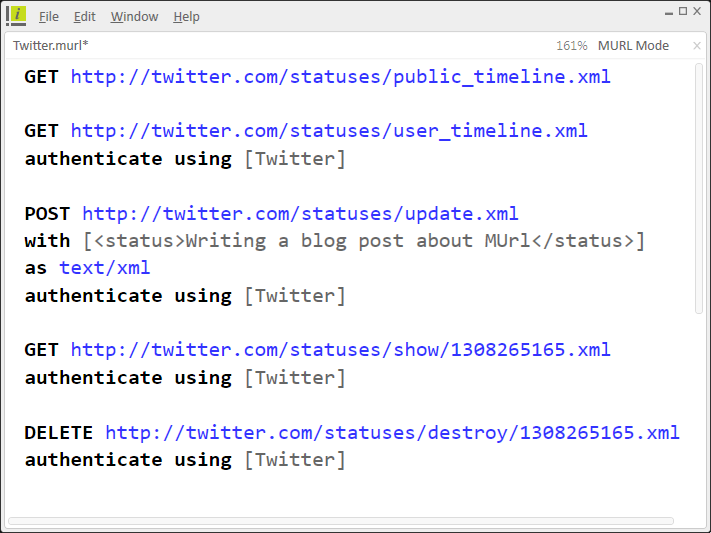

Let us take a look at MUrl:

I am looking at this, and after looking at the source code, I still can’t figure out the point.

Yes, this is a demo DSL. But it is a good example that shows how you can completely miss the point with regards to a DSL. What problem does this DSL solve? What benefits do I get from integrating that into my system?

How does this helps me solve a real problem?

The answer is that it doesn’t. The only remotely useful case that I can think of is if I really want to be able to issue REST calls from the command line, and even then, there are better ways of doing that on the command line. MUrl is an exercise in abstraction for the sake of abstraction. More than that, it gives the impression that it is something that is is not.

If you want to show me a DSL, show me one that has logic, not one that is a glorified serialization format. That is the sweet spot for a DSL, to extract policy decisions from your systems, so you can work with them at a higher level and have easier time making change.

M is not a language for creating DSL. It is a language to define a serialization format, that is all.