In RavenDB 5.4, we’re introducing new ELT features for Kafka and RabbitMQ. Now, instead of your documents just sitting there in your database, you can involve them in your messaging transactions. In this webinar, RavenDB CEO Oren Eini explains how these ETL tasks open up a whole new world of architectural patterns, and how they spare you from a lot of complexity when you want to involve your data in pub/sub or other messaging patterns.

I spoke at Cloud Lunch & Learn about the basics of building a database from scratch. We took a storage engine and created a simple database within the span of an hour.

Covered in the talk are the details of how you can build the database, using indexes to speed up queries and the manner in which a database interacts with its storage engine. I think it was a great talk, but let me know your feedback:

RavenDB is written in C#, and as such, uses managed memory. As a database, however, we need granular control of our memory, so we also do manual memory management.

One of the key optimizations that we utilize to reduce the amount of overhead we have on managing our memory is using an arena allocator. That is a piece of memory that we allocate in one shot from the operating system and operate on. Once a particular task is done, we can discard that whole segment in one shot, rather than try to work out exactly what is going on there. That gives us a proper scope for operations, which means that missing a free in some cases isn’t the end of the world.

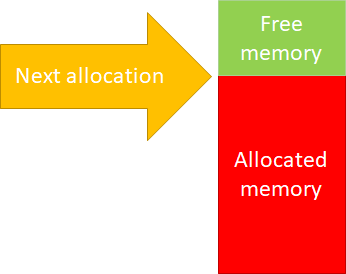

It also makes the code for RavenDB memory allocation super simple. Here is what this looks like:

Whenever we need to allocate more memory, we’ll just bump the allocator up. Initially, we didn’t even implement freeing memory, but it turns out that there are a lot of long running processes inside of RavenDB, so we needed to reuse the memory inside the same operation, not just between operations.

The implementation of freeing memory is pretty simple, as well. If we return the last item that we allocated, we can just drop the next allocation position by how many bytes were allocated. For that matter, it also allows us to do incremental allocations. We can ask for some memory, then increase the allocation amount on the fly very easily.

Here is a (highly simplified) example of how this works:

As you can see, there isn’t much there. A key requirement here is that you need to return the memory back in the reverse order of how you allocated it. That is usually how it goes, but what if it doesn’t happen?

Well, then we can’t reuse the memory directly. Instead, we’ll place them in a free list. The actual allocations are done on powers of two, so that makes things easier. Here is what this actually looks like:

So if we free, but not from the top, we remember the location and can use it again. Note that for 2048 in the image above, we don’t have any free items.

I’m quite fond of this approach, since this is simple, easy to understand and has a great performance profile. But I wouldn’t be writing this blog post if we didn’t run into issues, now would I?

A customer reported high memory usage (to the point of memory exhaustion) when doing a certain set of operations. That… didn’t make any sense, to be honest. That was a well traveled code path, any issue there should have been long found out.

They were able to send us a reproduction and the support team was able to figure out what is going on. The problem was that the code in question did a couple of things, which altogether led to an interesting issue.

- It allocated and deallocated memory, but not always in the same order – this is fine, that is why we have the free list, after all.

- It extended the memory allocation it used on the fly – perfectly fine and an important optimization for us.

Give it a moment to consider how could these two operations together result in a problem…

Here is the sequence of events:

- Loop:

- Allocate(1024) -> $1

- Allocate(256) -> $2

- Grow($1, 4096) -> Success

- Allocate(128) -> $3

- Free($1) (4096)

- Free($3) (128)

- Free($2) (256)

What is going on here?

Well, the issue is that we are allocating a 1KB buffer, but return a 4KB buffer. That means that we add the returned buffer to the 4KB free list, but we cannot pull from that free list on allocation.

Once found, it was an easy thing to do (detect this state and handle it), but until we figured it out, it was quite a mystery.

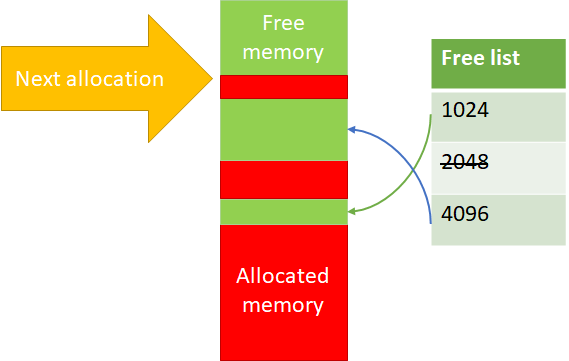

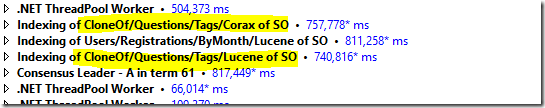

I’m trying to compare indexing speed of Corax vs. Lucene. Here is an interesting result:

We have two copies of the same index, running in parallel on the same data. And we can clearly see that Lucene is faster. Not by a lot, but enough to warrant investigation.

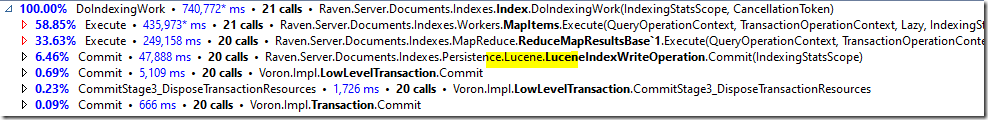

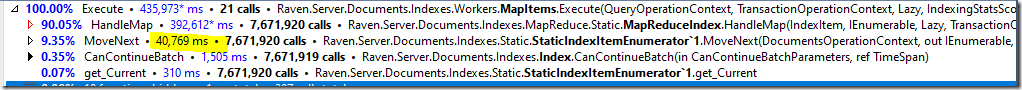

Here is the core of the work for Lucene:

And here it is for Corax:

If you look at the results, you’ll see something really interesting.

For the Corax version, the MapItems.Execute() is almost 5% slower than the Lucene version.

And that really pisses me off. That is just flat out unreasonable to see.

And the reason for that is that the MapItems.Execute() is identical in both cases. The exact same code, and there isn’t any Corax or Lucene code there. But it is slower.

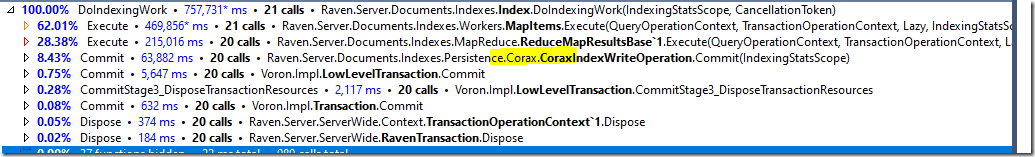

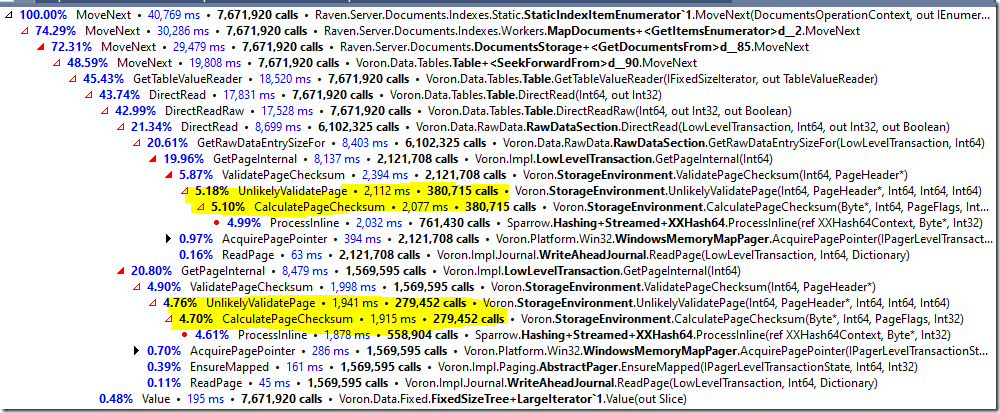

Let’s dig deeper, and we can see this interesting result. This is the Lucene version, and the highlighted portion is where we are reading documents for the indexing function to run:

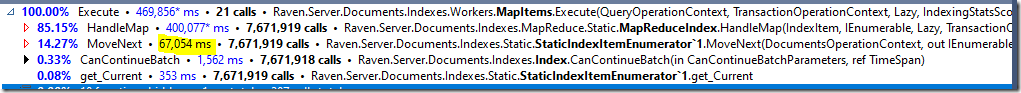

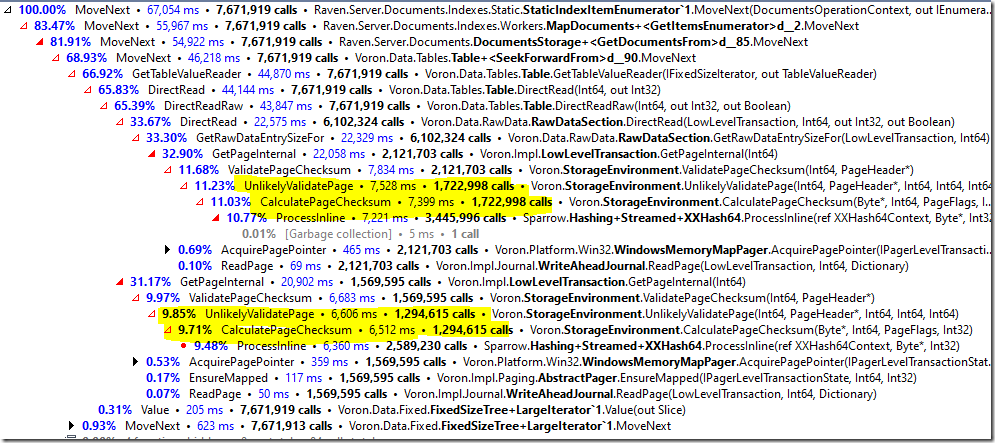

And here is the Corax version:

And here it is two thirds more costly? Are you kidding me? That is the same freaking code and is utterly unrelated to the indexing.

Let’s dig deeper, shall we? Here is the costs breakdown for Lucene, I highlighted the important bits:

And here is the cost breakdown for Corax

I have to explain a bit about what is going on here. RavenDB doesn’t trust the disk and will validate the data it reads from it the first time it loads a page.

That is what the UnlikelyValidatePage is doing.

What we are seeing in the profiler results is that both Corax and Lucene are calling GetPageInternal() a total of 3.69 million times, but Corax is actually paying the cost of page validation for the vast majority of them.

Corax validated over 3 million pages while Lucene validated only 650 thousand pages. The question is why?

And the answer is that Corax is faster than Lucene, so it is able to race ahead. When it races ahead, it will encounter pages first, and validate them. When Lucene comes around and tries to index those documents, they were already validated.

Basically, Lucene is surfing all the way forward on the wavefront of Corax’s work, and ends up doing a lot less work as a result.

What this means, however, is that we need to test both scenarios separately, on cold boot. Because otherwise they will mess with each other results.

When we are handling a support call, we are often working with partial information about the state of the software at the customer site. Sometimes that is an unavoidable part of the job. When troubleshooting a system with patients' records, I can’t just ask the customer to schlep the data to my laptop so I can analyze it properly. Even if we could do that, there are a lot of cases that simply don’t reproduce anywhere but the live environment.

Part of the process of debugging an issue in a production environment is to be able to gather enough information on site that we can draw the appropriate conclusions from. RavenDB comes with a lot of tools to do just that. One of the most useful of those tools is the idea of the debug package. That is a simple idea, in the end. It gathers all the information we have about our system and packages that into a zip file. That zip file contains a lot of metrics, but it doesn’t contain customer data (aside from databases & index names, which are usually not sensitive).

There have been several separate cases recently where we were able to use the debug package to analyze what is going on and came back to the customer with answers. However, when hearing our explanations about what was the root cause of the issue, the customer rejected our hypothesis.

In one case, a customer was worried about the load that they were observing in their system. Not because there was an issue, but the number of requests that they observed was higher than was expected. The customer switched to using concurrent subscriptions recently and deployed a couple of worker nodes to spread the load of processing documents. However, the number of requests observed was far higher than they expected. Whereas before they had a single worker per subscription, and a known amount of work that they could easily measure, after switching to concurrent subscriptions they observed a big increase in the number of requests processed by RavenDB.

Given that they deployed their subscriptions to two workers, initially, it was expected that the amount of work that the cluster is processing would double. Instead, it was increased by tenfold. Looking at the metrics in the debug package, we could see that they had 10 instances of each subscription running, but the customer was insistent that they only deployed two workers nodes.

Our metrics said that there were 5 subscriptions from IP-1 and 5 subscriptions from IP-2. After some back and forth it was revealed that everyone was correct, but talking past each other. The customer deployed two worker nodes, yes. But each of those spawned 5 instances of the subscriptions to take advantage of concurrency inside the same worker node.

In the second case, we have a customer that noticed a marked increase in the amount of bandwidth that they were using. They traced that additional bandwidth to the internal communication between the nodes in the cluster. Given that they are running in the cloud, they were (rightly) concerned about the sudden jump in the bandwidth. We started the investigation process and… we didn’t like what we saw. The cluster had gone through three full node rebuilds in the past month. At least, that was what the data was telling us. But that didn’t make much sense.

Quite concerned that there is something really bad going on, we talked to the customer, who thought about this for a while, checked their own logs and explained what was going on. They are running on Lsv2-series Azure instances, and apparently, within the space of a few weeks, all three of their instances had been moved to another physical host. The Lsv2-series instances use local ephemeral NVMe drives. When they moved an instance between hosts, the effect was as if we were given a brand new hard disk. RavenDB was able to handle that scenario more or less seamlessly, with the other nodes in the cluster filling in for the down node and sending it all the data it lost. The effect of that, of course, was a big jump in network bandwidth while that was going on.

The customer wasn’t actually aware that this happened until they looked at the logs, RavenDB had it handled, and it was only noticed because of the bandwidth spike.

The point of this post isn’t to talk about how awesome RavenDB is (even if I do think it is pretty awesome). Nor is it to extoll how good our support team is at figuring out things about the customer setup that even the customer isn’t aware of.

The point of this post is that you have to take into account, quite clearly, that the details that the customer is providing may be outdated, wrong or just misleading. Not because of any malicious intention on their end, but because they give you the information they have, not what is actually going on.

It reminds me of an old trick in tech support: “Take the plug out of the socket, blow on both the socket and the plug, then insert it again”. The point isn’t to blow whatever dust may have been there, preventing good contact. The point is to ensure that the silly thing is actually plugged in, but you can’t ask if this is plugged in, because the person on the other side of the call would say: “Of course it is” and never check.

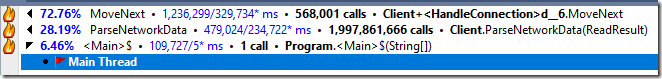

I’m going to go back a few steps and try to see where I should be looking at next, to see where I should pay the most attention. So far in this series, I mostly focused on how we read and process the data. But I think that we ought to take a step or two back and see where we are at in general. I ran the version with Pipelines and string usage in the profiler, trying to see where we are at. For example, in a previous post, the ConcurrentDictionary that I was using had a big performance cost. Is that still the case now?

Here are the current hotspots in the codebase:

Looking at this with more detail, we have:

That is… interesting. Let’s look at the code for HandleConnection right now?

Looking at the code and the profiler results, I wonder if I can do better here. Here is a small change that gives me ~2% speed boost:

The idea is that we parallelize reading from and writing to the network. It is a small boost, but any little bit helps, especially once we get into the cascading impacts of repeated optimizations.

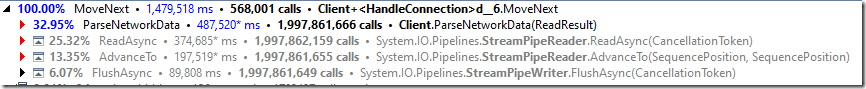

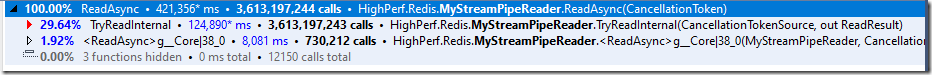

Looking into this, we have almost two billion calls to ReadAsync, let’s see what is costly there:

That is… wow.

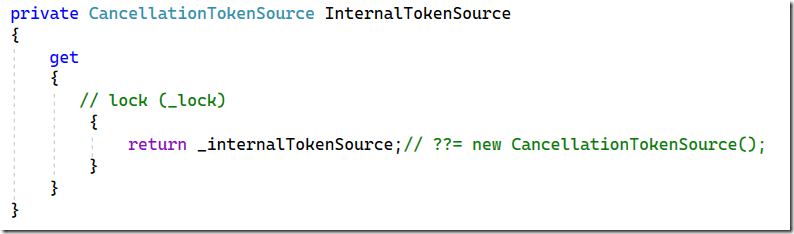

Why is InternalTokenSource so expensive? I’m willing to bet that the issue is this one, it is taking a lock. In my use case, I know that there is a single thread running this, so it is worth seeing if I can skip it. Unfortunately, there isn’t an easy way to skip that check. Fortunately, I can copy the code from the framework and modify it locally, to see what the impact of that would be. So I did just that (initialized once in the constructor):

Of course, that is very much a brute force approach, and not one that I would recommend. Looking at the code, it looks like there is a reason for this usage (handling cancellation of operations), but I’m ignoring that for now. Let’s see what the profiler says now:

That means that we have about 40% improvements in per call costs. As I mentioned, that is not something that we can just do, but it is an interesting observation on the cost of reading using PipeReader.

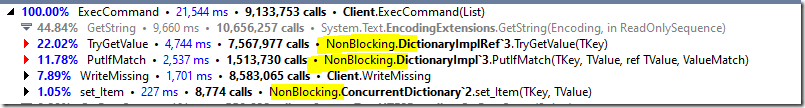

Another aspect that is really interesting is the backend state we have, which is a ConcurrentDictionary. If we’ll look at its cost, we have:

You’ll note that I’m using the NonBlocking NuGet package, which provides a ConcurrentDictionary implementation that isn’t using locking. If we’ll use the default one from the framework, which does use locking, we’ll see:

You can see the difference costs better here:

Note that there is a significant cost difference between the two options (in favor of NonBlocking). But it doesn’t translate to much of a difference when we run a real world benchmark.

So what is next?

Looking at the profiler result, there isn’t really much that we can push forward. Most of the costs we have are in the network, not in the code we run.

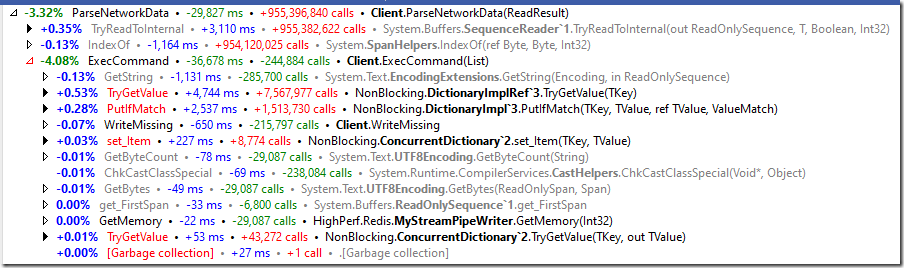

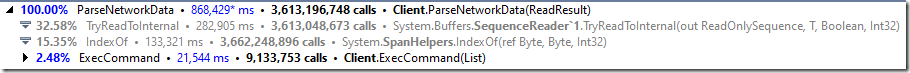

Well, that isn’t quite true, is it? The bulk of our code is in ParseNetworkData call, which looks like this:

So the total time we spend actually executing the core functionality of our server is really negligible. A lot of time is actually spent parsing the commands from the buffer. Note that here, we don’t actually do any I/O, all operations are operating on buffers in memory.

The Redis protocol isn’t that friendly for machine parsing, requiring us to do a lot of lookups to find the delimiters (hence the IndexOf() calls). I don’t believe that you can significantly improve on this. This means that we have to consider other options for better performance.

We are spending 35% of our runtime in parsing the command streams from the client, and the code we execute is less than 1% of our runtime. I don’t think that there are significant optimization opportunities remaining for the stream parsing, so that leaves us with the I/O that we have left. Can we do better?

We are currently using async I/O and pipelines. Looking at the project that got me interested in this topic, it is using IO_Uring (via this API) on Linux for their needs. Their parsing is straightforward, as well, see here. Quite similar to the manner in which my code operates.

So to get to the next stage in performance (as a reminder, we are now at the 1.8 million req / sec) we’ll probably need to go to the ring based approach as well. There is a NuGet package to support it, but that moves this task from something that I can spend a few hours in an evening to a couple of days / full week of effort. I don’t think that I’ll pursue this in the near future.

RavenDB introduced a TCP compression feature in version 5.3. The idea is that all internal communication in the cluster (as well as subscriptions), will use the Zstd compression format to reduce the overall bandwidth utilization by RavenDB. We have always supported HTTP compression, and that closed the circle.

The fact hat we are using Zstd means that we have a higher compression ratio and less CPU usage, so everyone was happy. Except… sometimes, they weren’t.

In some cases, we noticed that there would be network failures at a far higher rate than previous experienced. RavenDB is robust to network errors, so that was handled, but that is still a concern. We figured out that the problem was rooted in the compression code. If we enabled compression between the systems, it would have far higher rate of failures than otherwise. But only when running in secured mode, when the system is running without security, everything works.

My first suspicion is that something is in the network, monitoring it. But the whole point of secured mode is that no one can peek into the stream not interfere with the contents. Given that this is a self-healing issue, it took some time to dedicate the right amount of attention to it, but we managed to figure it out.

This is a confluence of three different features that all play together to get this to happen.

With compression, we typically do something like this:

That is pretty much how all compression stream will work. But we do have to consider the following issue, there may be no output.

When can that happen?

Let’s assume that I’m using the simplest compression algorithm (run length encoding).

In other words, it will take a buffer such as: aaaaaacccccccbbbb and turn that into a7c6b4.

Now, let’s consider what would be the output of such an algorithm if we pass it a buffer consisting of a single value?

It will only update its internal state, it will not output anything. That is fine, we need a call to Flush() to ensure that all the state is out.

That means that this will return an empty buffer, which we are then writing to the inner stream. And that is fine, right? Since writing a zero length buffer is a no-op.

Except that it isn’t a no-op. There is the concept of empty SSL records, mostly it seams to handle the BEAST attack. So when you pass an empty buffer to the SslStream, it will emit an empty record to the network.

Which is good, except that you may have a scenario where you emit a lot of those values. And it turns out that OpenSSL has a limit to how many consecutive empty records it will accept (under the assumption that it must move forward and produce output and not just loop).

So, in order to repeat this bug, we need:

- Input that will result in zero output from the compressor (fully repeating previous values, usually). Resulting in a zero length buffer as the output of the compression.

- Sending the empty SSL record over the stream.

- Repeating this for 32 times.

When all three conditions are satisfied, we get an error on the receiving end and the connection is broken. That means that the next call will have a different compression state and likely won’t have a problem at the same location.

In short, this is fun exercise in seeing how three different design decisions, all of whom are eminently reasonable, result in a very hard to trace bug.

The good thing is that this is simplicity itself to solve. We just need to avoid writing zero length buffer to the stream.

A few days ago I talked for close to three hours discussing RavenDB, modeling data in a non relational world and diving very deep into the internals of database engines in the .NET Zurich users group.

You can watch the recording here.

As usual, any and all feedback is welcome.

Next week I’ll be presenting a new major feature for the new RavenDB 5.4 release. Built-in ETL for Kafka and RabbitMQ. Instead of your documents just sitting there in your database, you can involve them in your messaging transactions.

You can register for the webinar here…

Please register, I would love to hear your feedback on the topic.

We got a call from a customer, a pretty serious one. RavenDB is used to compute billing charges for customers. The problem was that in one of their instances, the value for a particular customer was wrong. What was worse was that it was wrong on just one instance of the cluster. So the customer would see different values in different locations. We take such things very seriously, so we started an investigation.

Let me walk you through reproducing this issue, we have three collections (Users, Credits and Charges):

The user is performing actions in the system, which issue charges. This is balanced by the Credits in the system for the user (payment they made). There is no 1:1 mapping between charges and credits, usually.

Here is an example of the data:

And now, let’s look at the index in question:

This is a multi map-reduce index that aggregates data from all three collections. Now, let’s run a query:

This is… wrong. The charges & credits should be more or less aligned. What is going on?

RavenDB has a feature called Map Reduce Visualizer, to help work with such scenarios, let’s see what this tells us, shall we?

What do we see in this image?

You can see that we have two results for the index. Look at Page #854 (at the top), we have one result with –67,343 and another with +67,329. The second result also does not have an Id property or a Name property.

What is going on?

It is important to understand that the image that we have here represents the physical layout of the data on disk. We run the maps of the documents, and then we run the reduce on each page individually, and sum them up again. This approach allows us to handle even a vast amount of data with ease.

Look at what we have in Page #540. We have two types of documents there, the users/ayende document and the charges documents. Indeed, at the top of Page #540 we can see the result of reducing all the results in the page. The data looks correct.

However…

Look at Page #865, what is going on there? Looks like we have most of the credits there. Most importantly, we don’t have the users/ayende document there. Let’s take a look at the reduce definition we have:

What would happen when we execute it on the results in Page #865? Well, there is no entry with the Name property there. So there is no Name, but there is also no Id. But we project this out to the next stage.

When we are going to reduce the data again among all the entries in Page #854 (the root one), we’ll group by the Id property, but the Id property from the different pages is different. So we get two separate results here.

The issue is that the reduce function isn’t recursive, it assumes that in all invocations, it will have a document with the Name property. That isn’t valid, since RavenDB is free to shuffle the deck in the reduce process. The index should be robust to reducing the data multiple times.

Indeed, that is why we had different outputs on different nodes, since we don’t guarantee that will process results in the same order, only that the output should be identical, if the reduce function is correct. Here is the fixed version:

And the query is now showing the correct results:

That is much better ![]()