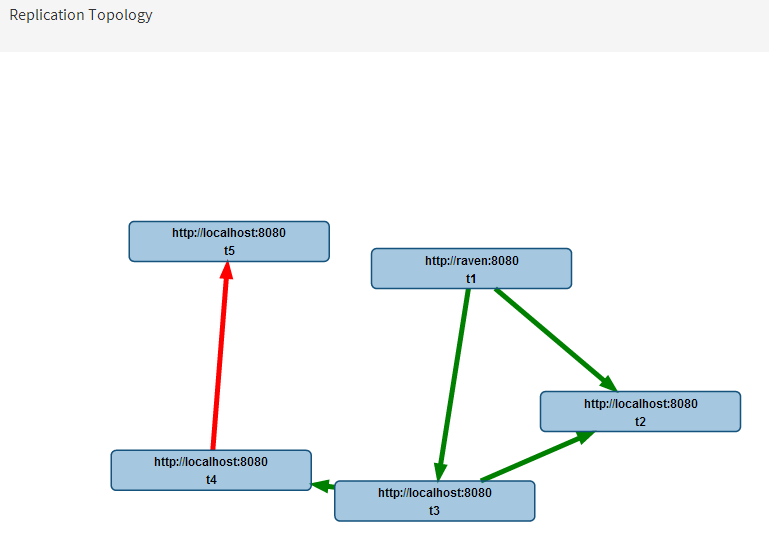

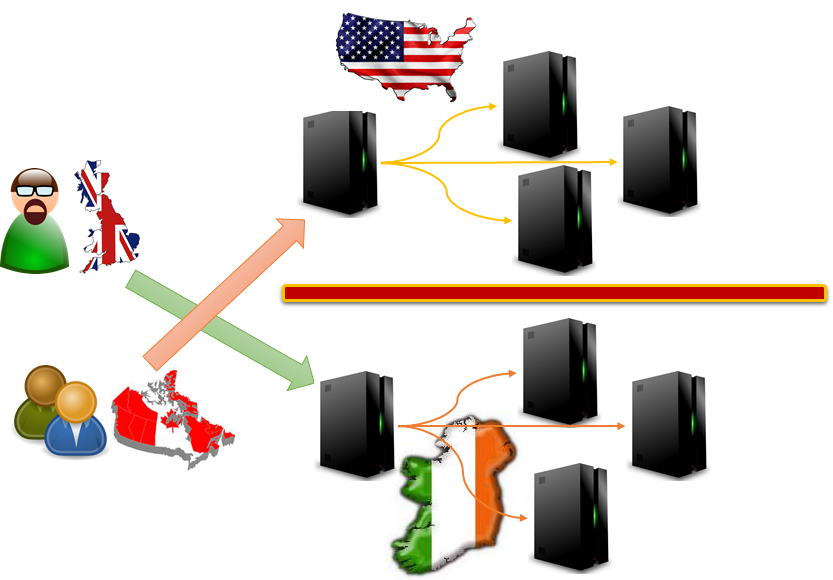

Yes, I choose the title on purpose. The topic of this post is this issue. In RavenDB, we use replication to ensure high availability and load balancing. We have been using that for the past five years now, and in general, it has been great, robust and absolutely amazing when you need it.

But like all software, it can run into interesting scenarios. In this case, we had three nodes, call them A, B and C. In the beginning, we had just A & B and node A was the master node, for which all the data was written and node B was there as a hot spare. The customer wanted to upgrade to a new RavenDB version, and they wanted to do that with zero downtime. They setup a new node, with the new RavenDB server, and because A was the master server, they decided to replicate from node B to the new node. Except… nothing appear to be happening.

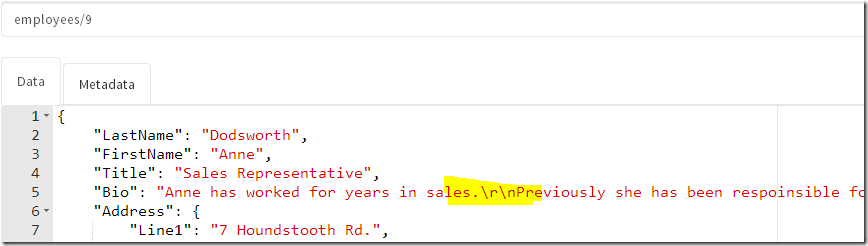

No documents were replicating to the new node, however, there was a lot of CPU and I/O. But nothing was actually happening. The customer opened a support call, and it didn’t take long to figure out what was going on. The setup the replication between the nodes with the default “replicate only documents that were changed on this node”. However, since this was the hot spare node, no documents were ever changed on that node. All the documents in the server were replicated from the primary node.

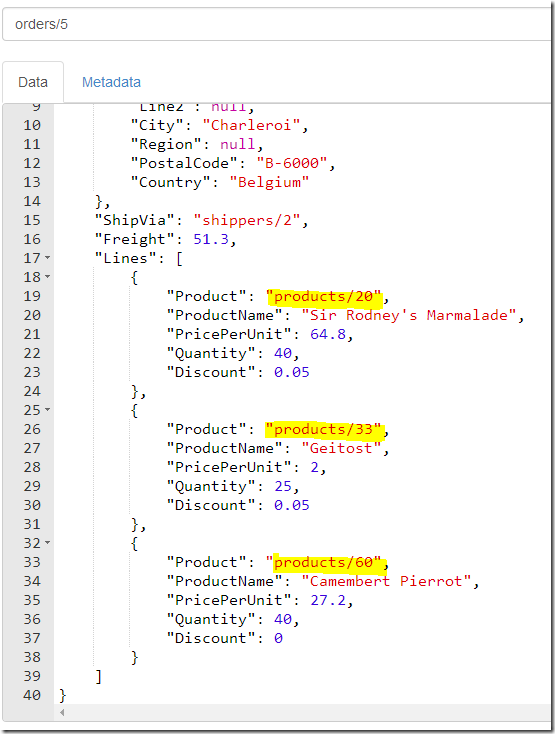

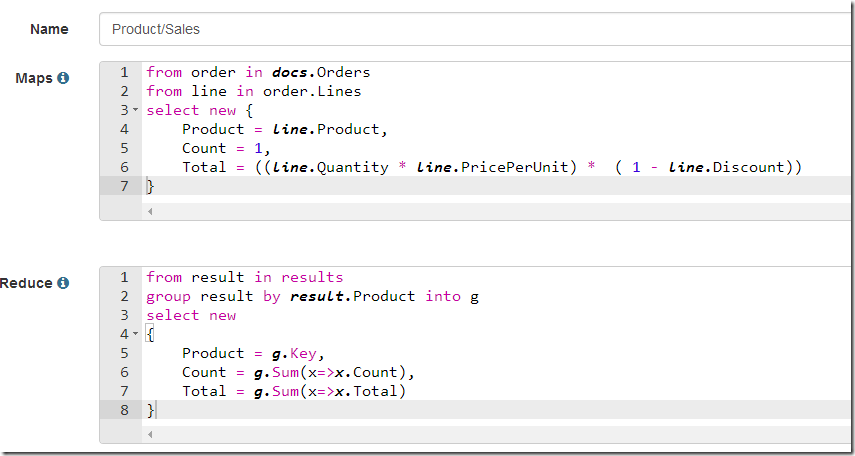

The code for that actually look like this:

public IEnumerable<Doc> GetDocsToReplicate(Etag lastReplicatedEtag)

{

foreach(var doc in Docs.After(lastReplicatedEtag)

{

if(ModifiedOnThisDatabase(doc) == false)

continue;

yield return doc;

}

}

var docsToReplicate = GetDocsToReplicate(etag).Take(1024).ToList();

Replicate(docsToReplicate);

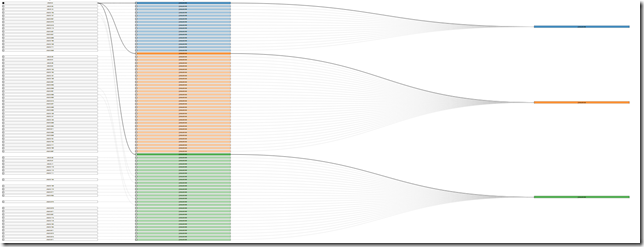

However, since there are no documents that were modified on this node, this meant that we had to scan through all the documents in the database. Since this was a large database, this process took time.

The administrators on the server noted the high I/O and that a single thread was constantly busy and decided that this is likely a hung thread. This being the hot spare, they restarted the server. Of course, that aborted the operation midway, and when the database started, it just started everything from scratch.

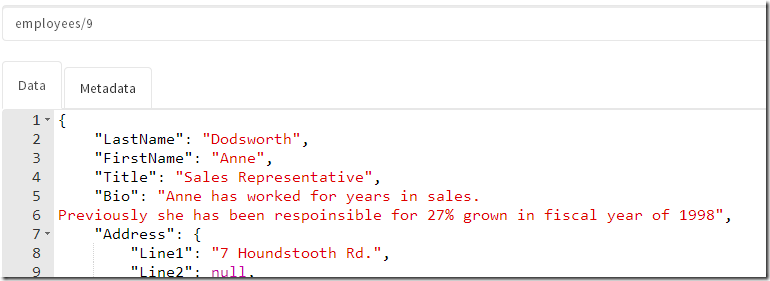

The actual solution was to tell the database, “just replicate all docs, even those that were replicated to you”. That is the quick fix, of course.

The long term fix was to actually make sure that we abort the operation after a while, report to the remote server that we scanned up to a point, and had nothing to show for it, and go back to the replication loop. The database would then query the remote server for the last etag that was replicated, it would respond with the etag that we asked it to remember, and we’ll continue from that point.

The entire process is probably slower (we make a lot more remote calls, and instead of just going through everything in one go, we have to stop, make a bunch of remote calls, then resume). But the end result is that the process is now resumable. And an admin will be able to see some measure of progress for the replication, even in that scenario.