One of the clearest signs of maturity that I’m looking for when reading code is the scaffolding that were used.

One of the clearest signs of maturity that I’m looking for when reading code is the scaffolding that were used.

Just like in physical construction, it is often impossible to start by building everything properly from the get go, and you start by building temporary scaffolding to get you going. In some cases, those can be things that you need to actually build the software, but I have found that scaffolding are mostly useful in debugging and understanding issues.

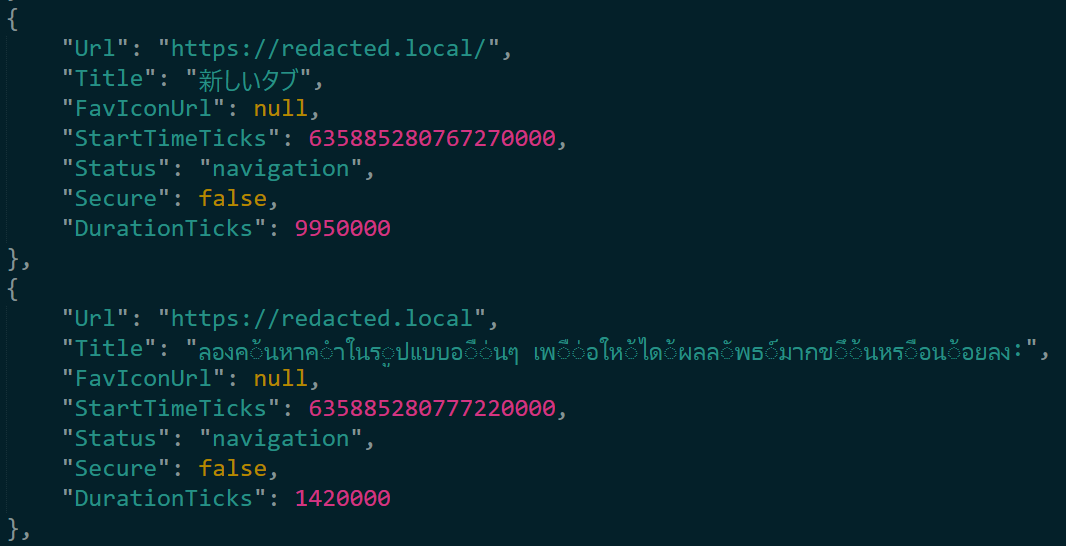

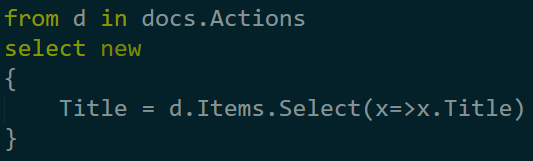

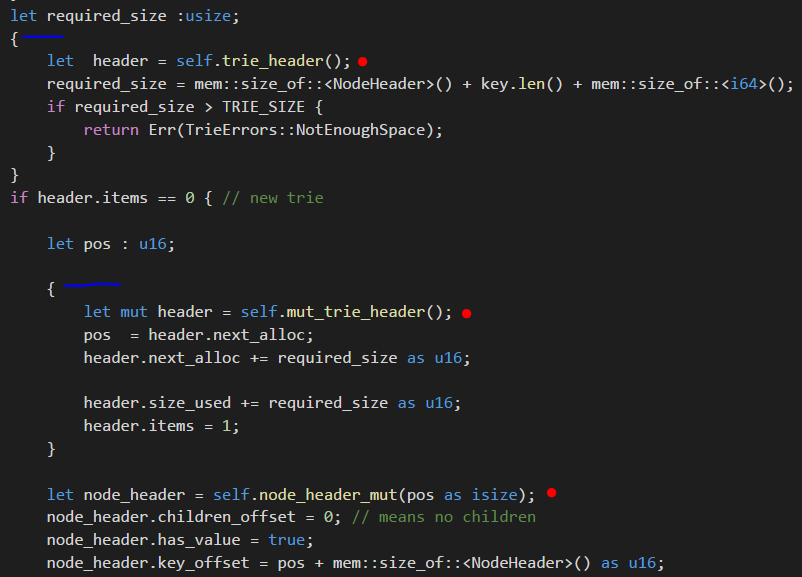

For example, if I’m working on a complex data structure, it would be very useful to be able to dump it into a human readable format, so I can visually inspect it and understand how it works.

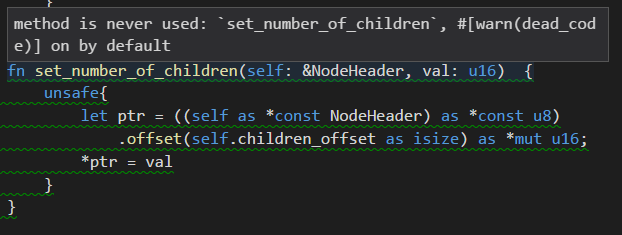

In the recent low level trie example, I have a file dedicated to doing just that, it contains some code to print the trie as well as validate the structure, and it was very useful to figure out certain things.

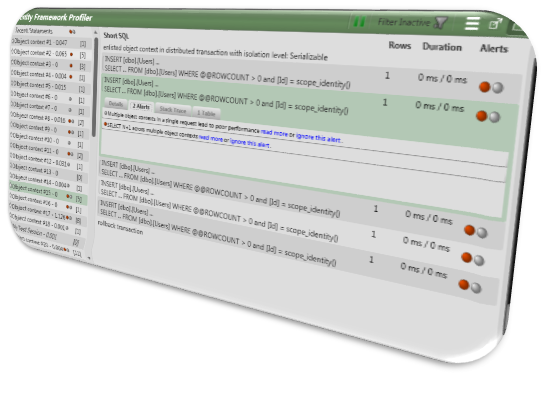

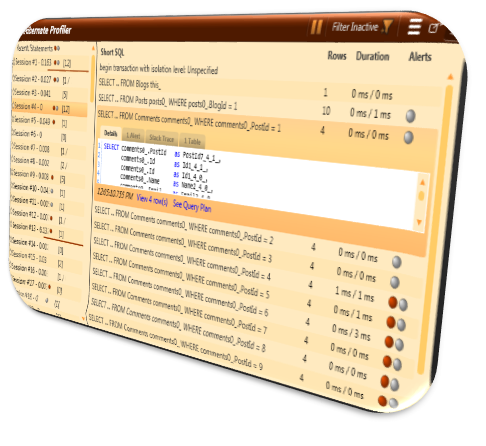

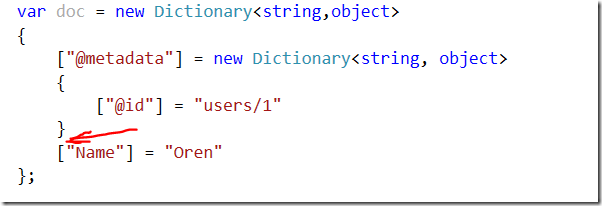

If the project is big enough, and mature enough, those scaffolding take on a permanent nature. In RavenDB, for example, they are debug endpoint and live visualizations that can help an administrator to figure out exactly what is going on with the system. The more complex the system, the more important the scaffolding become.

One very important consideration is what kind of scaffolding is being built. For example, if you throw a bunch pf printf all over the place while you are debugging, that is helpful, but that isn’t something that will remain over time, and in many cases, the second or third time that you find yourself having to add code to help you narrow down a problem, you want to make this sort of code a permeant part of your codebase.

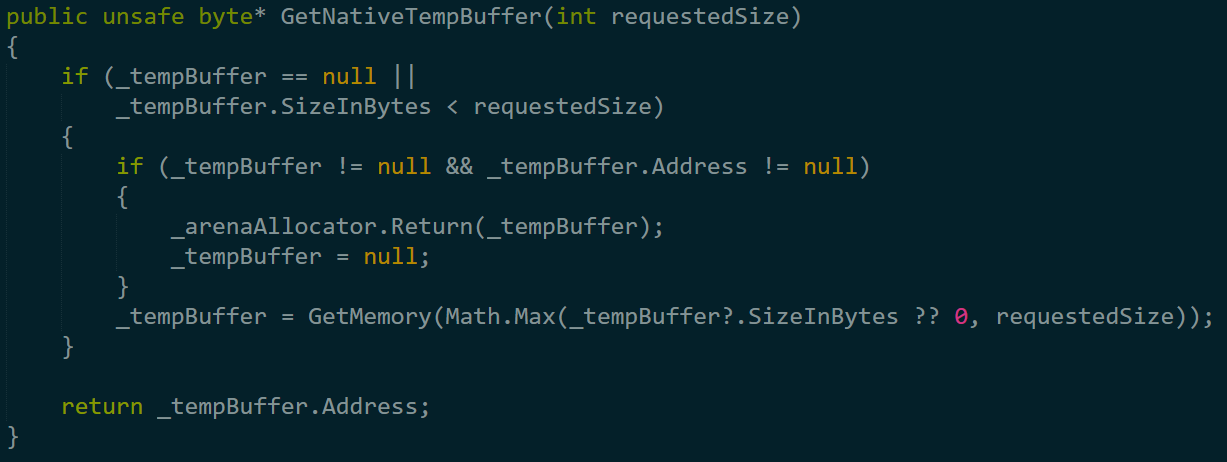

One of the more complex pieces in building Voron was the B+Tree behavior, in particular when dealing with page splits and variable size data. We build a lot of code that would verify that structure and invariants for us, and it is running as part of our CI builds to ensure that stuff doesn’t sneak in.

One of the things that we teach our new hires is that one of the things that we need to have not just working code, but also all of the surrounding infrastructure. Everything that I need to work with, diagnose and manage the system in production over long periods of time. I distinctly remember a debugging session where we had to add a whole bunch of diagnostics code to figure out some really gnarly issue. I was pairing with another dev on that on his machine, and we were working on that for a long time. We finally managed to figure out what the problem was and fix that, and I left and got the final PR with the fix later in the day.

None of the diagnostics code was in it, and when I asked why the answer was: “We fixed the problem, and we didn’t need it, so I removed it.”

That is not the kind of thing that you remove, that is the kind of thing that you keep, because you can bet your bottom dollar that we’ll need it again, when the next problem shows up.