Replication with RavenDB is one of our core features. Something that we had in the product from the very first release (although we did simplify things by several orders of magnitudes over the years). Replication is responsible for high availability, load balancing and several other goodies. For the most part, replication works quite well, and it is a lot less complex then some of the other things that grew over the years (LoadDocument, for example). That said, it doesn’t mean that it can’t be improved. And since this is such an important aspect of RavenDB, we spent quite a lot of time in seeing what we can do to improve it.

Here are the basic design guidelines:

- RavenDB is going to remain a multi master system, where each node can accept writes and distribute it to its siblings.

- We intend to use Raft for dynamic leader selection, but that is a layer on top of the basic replication.

- That means that RavenDB is an AP system, and needs to handle conflicts.

- We mostly deal with fully connected graphs of relatively small clusters (less than 10 nodes).

- Higher number of nodes are quite frequent, but they don’t use a mesh topology, but typically go for a hierarchy.

This post is going to focus solely on the server side aspects of replication, I’ll do another post about changes from the clients perspective.

Probably the first thing that we intend to change is how we track the replication history. Currently, we track the last 50 changes made on a document. This has several problems:

- if there have been more than 50 changes on a document between replication batches, we’ll get a false conflict.

- if the documents are small, in many cases the replication metadata is actually bigger than the document itself.

We are going to move to an explicit vector clock implementation. This is a bit complex, because there are multiple concepts that we need to track concurrently here.

Every time that a document changes, the server generate an etag for that change. This etag is an int64 number that is always increasing. This is used for optimistic concurrently, indexing, etc. The etag value is per server, and cannot be used across servers. Each server has a unique identifier. Joining the two together, whenever a document is changed on a server directly (not via replication), we’ll stamp it with the server id and the current document etag.

In other words, let us imagine the following set of operations happening in a three node cluster.

Users/1 is created on Server A, it gets an etag of 1 and a vector clock of {A:1}. Users/2 is created on Server A, it gets an etag of 2 and a vector clock of {A:2}. Users/3 is created on Server C, it gets etag 1 (because etags are local per server) and its vector clock is {C:1}. Servers A and C both replicate to Server B, and to each other, resulting in the following cluster wide setup:

| | Server A | Server B | Server C |

| Users/1 | etag 1, {A:1} | etag 1, {A:1} | etag 2, {A:1} |

| Users/2 | etag 2, {A:2} | etag 3, {A:2} | etag 3, {A:2} |

| Users/3 | etag 3, {C:1} | etag 2, {C:1} | etag 1, {C:1} |

Note that the etags assigned for each document are not consistent across the different servers, but that they are temporally consistent with respect to the writes. In other words, Users/1 will always have a lower etag than Users/2.

Now, when we modify Users/3 on server B, we’ll get the following cluster wide picture:

| | Server A | Server B | Server C |

| Users/1 | etag 1, {A:1} | etag 1, {A:1} | etag 2, {A:1} |

| Users/2 | etag 2, {A:2} | etag 3, {A:2} | etag 3, {A:2} |

| Users/3 | etag 4, {B:4,C:1} | etag 4, {B:4,C:1} | etag 4, {B:4,C:1} |

As I said, only changed on the server directly (and not via replication) will impact the document vector clock, but any modification (replication or directly on the node) will modify a document’s etag.

Using such vectors clocks, we gain two major features. First, it is very easy to see if we have conflicting changes. {C:1, B:4} is obviously a parent of {C:4,B:6}, while {C:2,A:6} is a conflict. The other is that we can now form a very easy view of the kind of changes that we have received. We do that using a server wide vector clock. In the case of the table above, the server wide vector clock would be {A:2,B4,C:1}. In other words, it will contain the latest etag seen from each server.

We’ll get to why exactly this is important for us in a bit. For now, just accept that it does, because the next part is about how we are going to actually do the replication. In previous versions of RavenDB, we did each replication batch through a separate REST call to the remote server. This has a few disadvantages. It meant that we had to authenticate every single time, and we couldn’t make any assumptions about the state of the remote server.

In RavenDB 4.0, we intend to move replication to use pure Websockets only usage. On startup, a node will connect to all its siblings, and stay connected to them (retrying the connection on any interruption). This has the nice benefit of only doing authentication once, of course, but far more interesting from our perspective is the fact that it means that we can rely on the state of the server on the other side. TCP has a few very interesting properties here for us. In particular, it guarantee that we’ll have ordered delivery of messages. Which means that we can assume that once we sent a message to a server on a TCP connection, it either got it, or the TCP connection will return an error at some point, forcing us to reconnect.

In other words, it isn’t just authentication that I can do just once, I can also query the remote server for its state (as it regards me), and since I’m the only person that can talk as myself, and I’m the one sending the details. As long as the connection lasts, I know what the other side knows about me. Confusing, isn’t it?

But basically it means that instead of having to ask on each batch what is the last document that the destination server saw of me, I can assume that the last document that I sent was received. That lasts until the connection breaks, in which case I can need to figure out what actually arrived. This seems like a small thing, but this will actually allow me to reduce the number of roundtrips for a batch by half. There are other aspects here that are really nice, I get to piggyback on TCP’s congestion protocol, so if the remote server is slow in accepting updates, it will (eventually) reflect as a blocking write on my end. That seems like a bad thing, right? But this is actually what I want.

Each destination server in RavenDB 4.0 is going to get its own dedicated thread. This thread will manage all outgoing communication with this server. That gives us several really important behaviors. It means that we can easily account for problems by just looking at the thread responsible (hm… I see that replication to node C is consuming a lot of CPU) and it also turn the entire replication process to a pretty simple single threaded operation. Because of the blittable format, we don’t need complex prefetching strategies or sharing of memory in the replication, and a slow node will not impact any other replication behavior. That, in turn, basically mean a thread per connection (see previous discussion on the expected number of nodes being relatively small) and a very simple programming / error handling / communication model.

The replication sending logic goes something like this:

Yes, my scratch pad language is still Boo (Python, if you aren’t familiar with it), and this is meant to convey how simple that thing is. All the complexity that we currently have to deal with is out. Of course, the real code will need to have error handling, reconnection logic, etc, but that is roughly all you’ll need.

Actually, that is a lie. The problem with the code above is that it doesn’t work well with multiple servers. In other words, it is perfect for two nodes, replicating to one another, but when you have multiple nodes, you don’t want a single document update to replication from each node to every other node. That is why we have the concept of vector clocks. At the document level, this serves as an easy way to detect conflicts and see what version of a document is casually later than another version of a document. But on the server level, we gather the latest writes from all nodes that we saw to get the server wide vector clock.

When a document is modified on a server, that server will immediately send that document to all its siblings. Because there is no way that they already have it. But if a document was replicated to a node, it will not start replicating right away. Instead, it will let a set amount of time go by (defaulting to once a minute) and then ask each sibling what is the latest server wide vector clock that it is aware of. If the remote vector clock is equal to or higher than the local server wide vector clock, then we know that they are up to date. In this case, the local server will let the remote server know that they are a match to the current etag on that server.

If, however, the local vector clock is smaller (or conflicting) from the remote server, then we need to send the relevant documents. We already know what is the last etag that the remote server has from us (we negotiated that when we established the connection, and we updated it every time we sent a document to the remote server. Since we have the current vector clock from the remote server, we aren’t going to just blindly send all documents after the last etag we sent to the remote server. Instead, we are going to check each of those to see if the vector clock for the document is larger (or conflicting) than the remote server vector clock. In this way, we can send the remote server only the documents that it doesn’t have.

What about delayed servers? If we had a new node in the cluster, and we just started replicating to it, what happens when a new document is being written. Above, I mentioned that the written to server will immediately write it to all its siblings, but that is an over simplification. An extremely important property of RavenDB replication is that documents are always replicated in the order the server saw them (either written to it directly, or the order they were replicated to it). If we allow a server to replicate documents directly to another server, that might break this guarantee. Looking at the code above, it will also require us to write a separate code path to handle such things. But that is the beauty in this design. All of this logic is actually encapsulated in WaitForMoreDocuments(). You can this of WaitForMoreDocuments() as a simple manual reset event. Whenever a document is written to a document directly, it will be set. But not when a document is replicated to us.

So WaitForMoreDocuments() will basically wait for a document to be written to us, or a timeout, in which case it will check with its sibling for new stuff that need to go over the wire because it was replicated to us. But the code is the same code, and the behavior is the same. If we are busy sending data to a new server? We’ll still set the event, but that will have no effect on the actual behavior. And when we are working with a fully caught up server, the act of writing a single document will immediately free the replication threads to start sending it to the sibling. All the desired behaviors, and very little actual complexity.

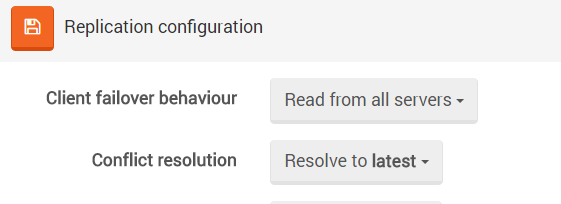

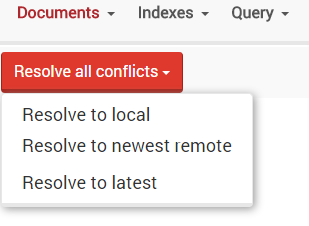

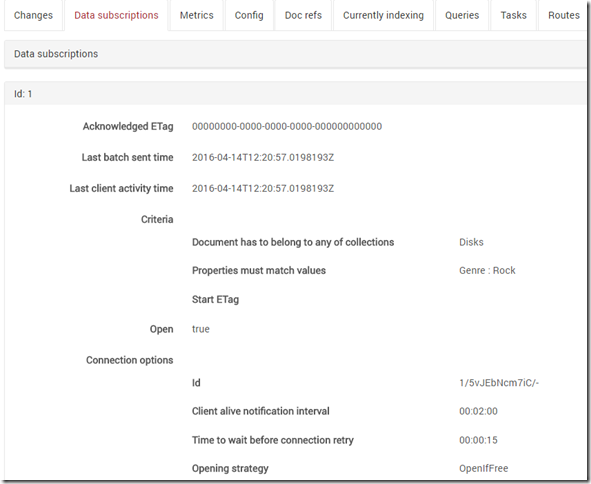

On the receiving end, we get just the documents we don’t have, as well as the last etag from that source server (which we’ll keep in persistent storage). Whenever we get a new document, we’ll check if it is conflicting. If so, we’ll mark the document as conflicting and allow the user to define default strategies to handle that (latest, resolve to remote, resolve to local). But we are also going to allow the user to define a Javascript function that will merge the conflicted documents directly. This way you can have your business logic for the resolution directly on the server, and you’ll never actually see any conflicts externally.

There are quite a lot of small details that I’m skipping, but this is already long enough, and should give you a pretty good idea about where we are headed.

I recently run into this blog post Scaling to 100M: MySQL is a Better NoSQL (from about 6 months ago) and cringed, hard. Go ahead and read it, I’ll wait. There are so much stuff going on here that I disagree with that I barely even know where to start.

I recently run into this blog post Scaling to 100M: MySQL is a Better NoSQL (from about 6 months ago) and cringed, hard. Go ahead and read it, I’ll wait. There are so much stuff going on here that I disagree with that I barely even know where to start.