From the get go, we have built RavenDB to be as easy to use as possible. Quite explicitly, wherever possible, we aimed to make it as simple as possible for someone familiar with .NET. The fun part is sometimes looking at people’s expectations and answering them is a pleasure, such as this one:

The reasons for this exchange being amusing is that RavenDB doesn’t have the concept of expensive queries. For the most parts, queries are merely scanning through an already computed index. RavenDB does no computation and very little work to answer your queries, so the notion of an expensive query is meaningless, there aren’t any.

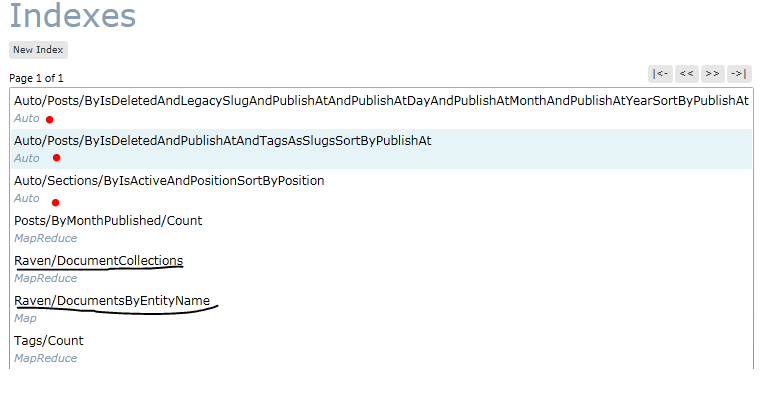

That said, this behavior does comes at a cost, it means that we have to do the work of computing the indexes at some stage, and in our case, we have chosen to do so on the background. What that means, in turn, is the whole notion of queries potentially returning stale results. As it turned out, there is a actually a surprisingly few cases where you can’t tolerate some staleness in your application, and that makes RavenDB highly suited for a large number of scenarios.

There is, however, one common scenario that is annoying, the Create –> List flow. As a user, I want to complete the Create screen:

And then I want to see the List screen:

And naturally I expect to see the just created item in the list. That is the one scenario where people usually run into RavenDB’s consistency model, and it brought a few complaints every now and then. If the timing is just wrong, you may be able to issue the next request before the addition to the database had the chance to index, resulting in a missing update.

Usually, the advice was to add a WaitForNonStaleResultsAsOfNow(), which resolved this issue, so we didn’t really consider this any further. It was just one of those things that you had to understand about the way RavenDB worked.

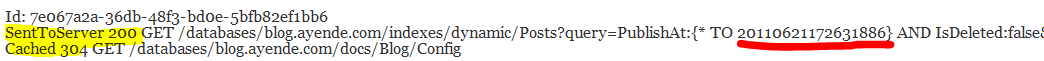

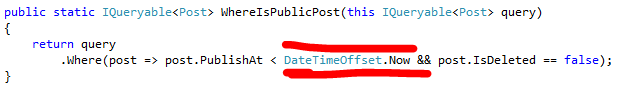

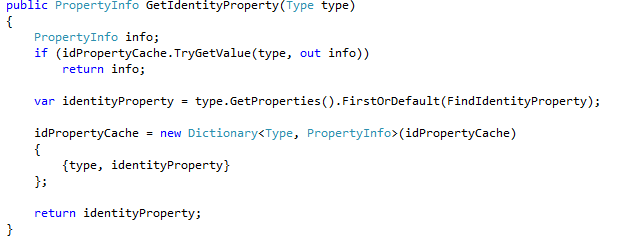

Recently, however, we got a bug report for this exact scenario, and the user couldn’t just use WaitForNonStaleResultsAsOfNow(), or to be rather more accurate, he was already using it, but it wasn’t working. We eventually figured out that the problem was clock synchronization between the server and client computers. That forced us to re-consider how to approach this. After looking at several alternatives, we ended up creating a new consistency model for RavenDB.

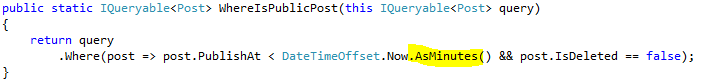

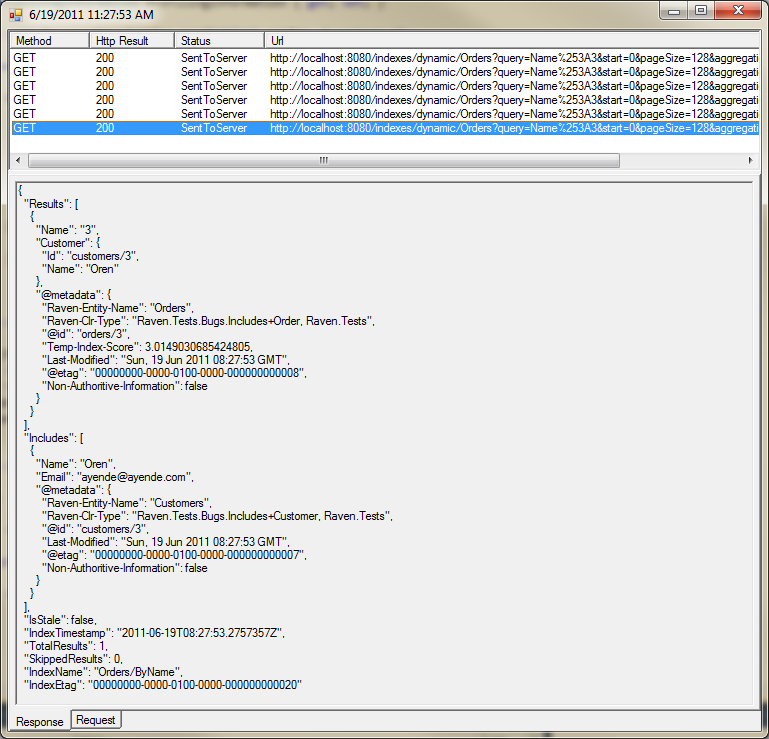

In this consistency model, we are basically ensuring a very simple metric, “you can query anything that you have written”, instead of relying on the time being the same everywhere, we have decided to use the etags that are being reported back to the server. Using this approach, you can basically ignore the difference between RavenDB and the traditional Relational Database, since it will behave (externally), in much the same fashion.

You can enable this mode on a per query basis:

Or you can enable this for all queries:

I am pretty proud of this feature, since it simplify a lot for most users, and it provides a very effective (and simple) way to approach consistency. This is especially true if we are talking about multiple clients working on the same database (which is the case in the issue that was raised).

Whenever each client is writing, they will have to wait for their changes to be indexed, but they won’t have to wait for the changes from any other client. That matches the user’s expectation, and also allow RavenDB to answer most queries without doing any waiting whatsoever.