I want to generate API documentation for Raven DB. I decided to take the plunge and generate XML documentation, and to ensure that I’ll be good, I marked the “Warnings as Errors” checkbox.

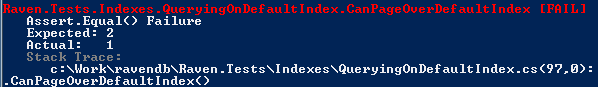

864 compiler errors later (I spiked this with a single project), I had a compiling build and a pretty good initial XML documentation. The next step was to decide how to go from simple XML documentation to a human readable documentation.

Almost by default, I checked Sandcastle, unfortunately, I couldn’t figure out how to get this working at all. Sandcastle Help File Builder seems the way to go, though. And using that GUI, I had a working project in a minute or two. There are a lot of options, but I just went with the default ones and hit build. While it was building, I had the following thoughts:

- I didn’t like that it required installation.

- I didn’t know whatever you can easily put it in a build script.

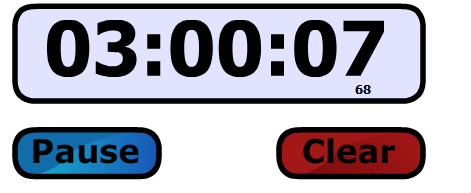

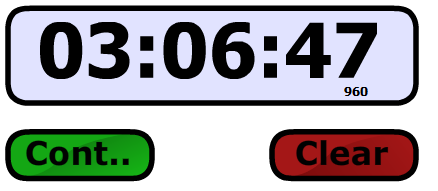

The problem was that generating the documentation (a CHM) took over three and a half minutes.

I then tried Docu. It didn’t work, saying that my assembly was in the wrong format. Took me a while to figure out exactly why, but eventually it dawned on me that Raven is a 4.0 project, while Docu is a 3.5. I tried recompiling it for 4.0, but it gave me an error there as well, something about duplicate mscorlib, at which point I decided to drop it. That was also because I didn’t really like the format of the API it generated, but that is beside the point.

I then tried doxygen, which I have used in the past. The problem here is the sheer number of options you have. Luckily, it is very simple to setup using Doxywizard, and the generated documentation looks pretty nice as well. It also take a while, but significantly faster than Sandcastle.

Next on the list was MonoDoc, where I could generate the initial set of XML files, but was unable to run the actual mono doc browser. I am not quite sure why that happened, but it kept complaining that the result was too large.

I also checked VSDocman, which is pretty nice.

All in all, I think that I’ll go with doxygen, it being the simplest by far and generating (OOTB) HTML format that I really like.