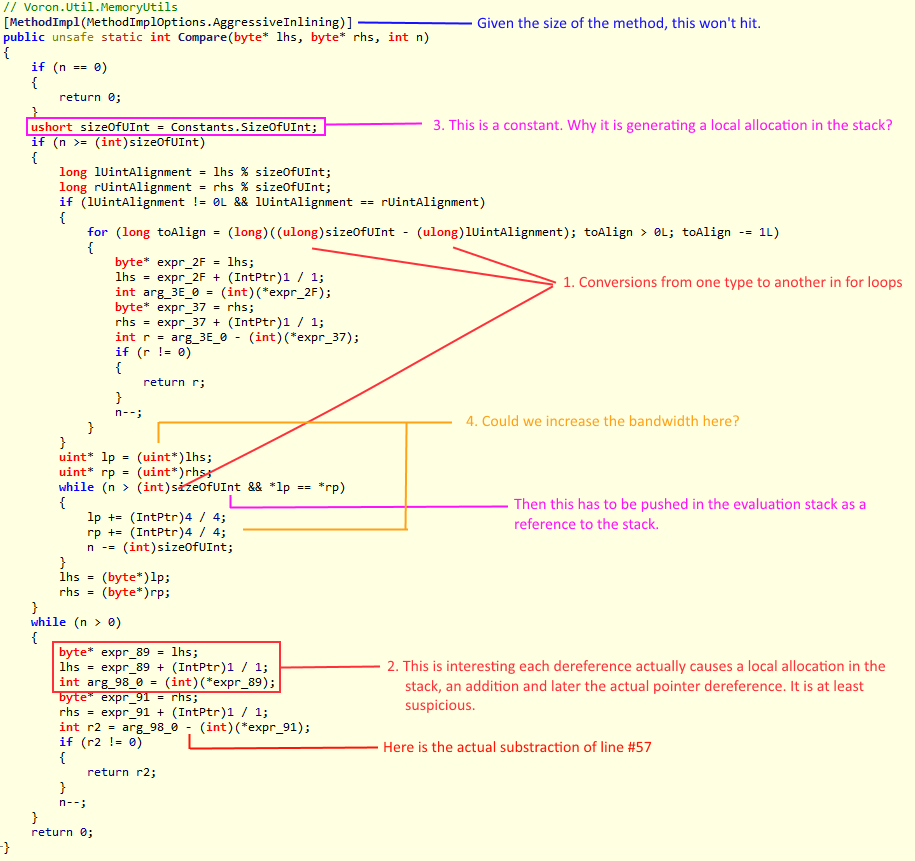

Note, this post was written by Federico. Where I had notes or stuff to extend, I explicitly marked it as such. In the previous post after inspecting the decompiled source using ILSpy we were able to uncover potential things we could do.

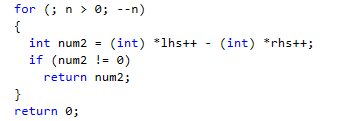

Did you remember how dotPeek and ILSpy didn’t agree on the last for-loop?

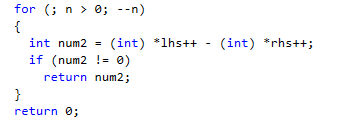

dotPeek

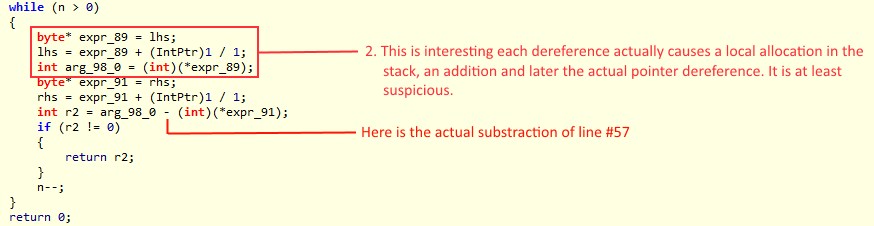

ILSpy

Well to really know which one is right, lets dig deeper. Looks like dotPeek is just too smart for our purposes.

MSIL is an stack machine, so everything has to be pushed to the stack to be operated. And the lower you go the less context you have to make optimization choices. The compiler knows a lot more, therefore it can make sensible choices that the JIT can’t. Well this is one of those, the problem here is that the compiler is treating those native memory references in a very “un-native” way, leaving small room to the JIT to do its magic. Therefore we are going to give the compiler a nudge to point him in the right direction.

We know that most architecture have a set of indexed instructions that will allow you to load from memory at a base address plus an offset and special ones optimized to operate with constants. Yeah all that magic in a single opcode.

Therefore if we can find a way that the compiler would emit such a sequence, there is a high chance that the JIT will understand it and emit such a load statement. What could appear to be a long shot is actually quite easy. Instead of doing pointer arithmetic (pre/post increment and dereferencing) as usual, we will do something we would never do in C/C++; we will just ask for it at face value.

So what would be:

var v = *(lhs++) – *(rhs++);

Now becomes:

var v = lhs[0] - rhs[0]; lhs++; rhs++;

What if we need the next one?

var v = lhs[1] - rhs[1];

And so on… However, that is true if and only if the number can be loaded into the stack using an special short instruction (a shortcut) that encodes the value to load as a named constant.

Why this work?

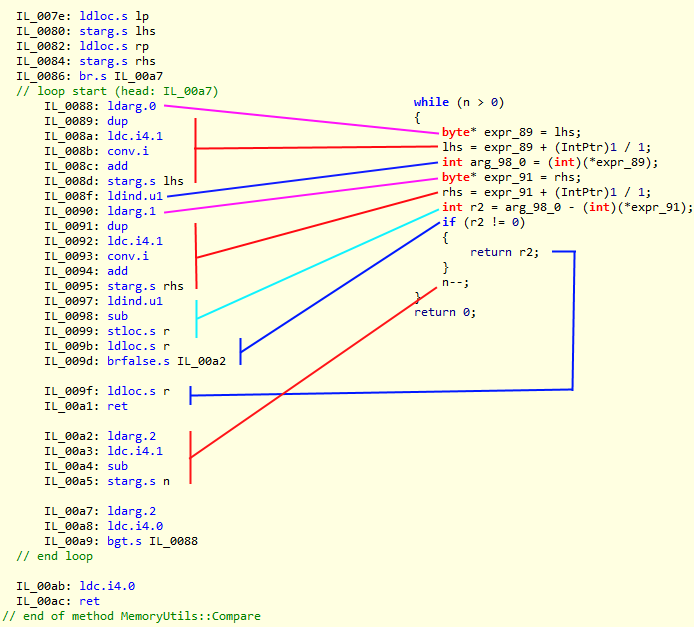

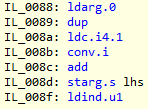

Because the MSIL pattern is unequivocal:

We push the first pointer (lhs) We load a byte from it and put it into an int32 register in the stack We push the second pointer (rhs) We load a byte from it and put it into an int32 register in the stack We subtract the two loaded int32. We store it into an stack variable (v) We load it into the stack from (v) We check if it is distinct from (0)

The JIT now can figure out how to optimize this with a load + offset instruction easily. Moreover the offset is also a constant, anyone said “special opcode”?. Now let’s compare the IL code from each approach.

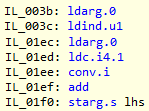

Before Optimization:

After Optimization

While the amount of instructions is the same and the avid reader would have figured out by now; the code is not that different either.

However, the former translate to far more native instructions than the latter. Why? We will have to ask the JIT or the compiler guys, but my hypothesis is that the first version requires a much more deeper analysis than the second and in an effort to keep the JIT overhead low, that pattern can’t be optimized so much.

The bottom line is: “Do not optimize pointers in C# as you do in C/C++. Translating an optimized algorithm that uses pointers from C/C++ to C# will not be optimal.”

Remember this, it will make sense soon, because in the next post, we’ll tie it all together.