![]() As we continuously optimized more and more of our code, we kept seeing faster and faster benchmarks. In fact, the more we optimized, the faster we became. One would think that there is some sort of correlation there.

As we continuously optimized more and more of our code, we kept seeing faster and faster benchmarks. In fact, the more we optimized, the faster we became. One would think that there is some sort of correlation there.

However, that is a mere theory that can be disproven, as this story will demonstrate.

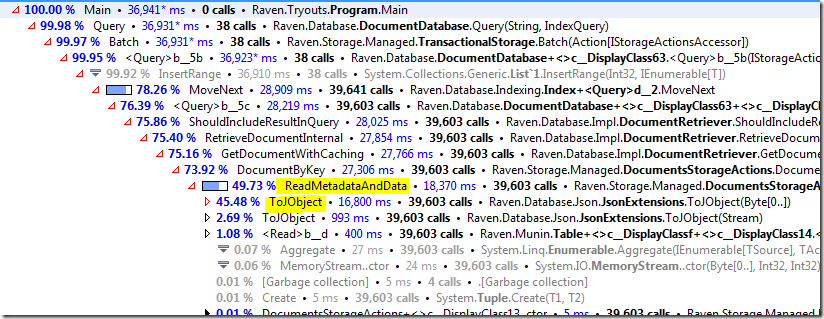

When optimizing, you eventually expects to get into the land of diminishing returns. But something very strange happened, we have made a few changes, the each should have brought our speed up by a significant percentage, we had the micro benchmarks to prove that this is the case, and we were even able to see that the code was running much faster than before, but the overall benchmark time kept growing, and we started seeing higher and higher stalls in the process.

That… sucked. Especially because we couldn’t figure out what was going on. Every single metric we could see was saying that we should be seeing higher speed, our disk usage went up, our CPU usage went up a bit, we increased our memory buffers from 32 MB to 1GB, and every single indication we had told us that we are faster on a per operation basis. But the entire thing just started slowing down more and more.

Frustratingly, there was nothing we could really sink our teeth into. The system would just go into stalls and do nothing. We got to the point it looked like we broke the operating system, but nothing helped, stuff just didn’t want to work. It looked like we were waiting for I/O, but tracing at the syscall level showed that we were getting much faster response from the hardware than we saw in the application. Somewhere, stuff was getting lost.

Eventually we managed to track it down to the offending line:

So this is pretty obvious, right? We are waiting, so we are slow. But this line is called from a completely different part of the code, and it isn’t blocking anything else in the code path that is suffering from stalls. The key here is that this line is called from:

Still fine, right? We threw that into the thread pool, so it is fine to wait. But…

The line above is responsible for releasing our threads when the I/O operation has completed. Note that it needs to run on the thread pool as well, but because we are now much faster, we now have a lot of threads that are stuck in the call to SyncEnvironment, that overloaded the thread pool, and meant that the notification that we can proceed would come very late. We missed it in all of our profiling because we didn’t look at that code path at all, since it was obviously unrelated to the issue at hand.