The topic of this post is a bug in RavenDB, a pretty serious one. The end result is that a user reported that they got an error from RavenDB that they are unable to read a stored document. In some cases, RavenDB needs to read a document on startup, which means that it wasn’t able to start up if that document had this behavior.

As you can imagine, this is one of those issues that gets our full and immediate attention. The error itself gave us a lot of information:

Dictionary mismatch on Dic #375

at Voron.Data.Tables.ZstdLib.AssertSuccess(UIntPtr v, CompressionDictionary dictionary)

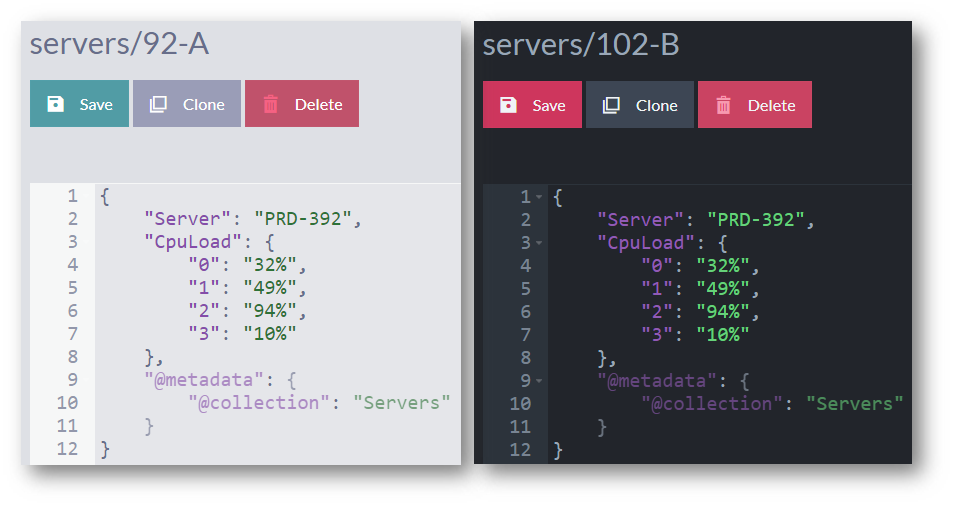

This is related to RavenDB’s document compression behavior. In order to get a great compression ratio from our documents, we train RavenDB on the recent documents that you have and generate a compression dictionary. The problem at hand is that the compression dictionary we have and the compression dictionary that was actually used are different. As you can see from the error, we are using zstd as the compression algorithm. When zstd generates a dictionary it will (by default) generate an id from that document that is mostly based on the xxhash64 of its content, rounded to 32 bits. You can see the relevant part here. This is pretty nice, since it means that there is a good chance that we’ll detect the wrong dictionary.

So now we know what is going on, but we don’t understand why.

When we wrote this feature, we were quite aware that we’ll not be able to make any sort of sense from the documents if we don’t have the right dictionary. For that reason, we store the dictionaries three times. Once inside of RavenDB itself and twice in ancillary files, which we can use during recovery. This sort of error should be utterly impossible. And yet, we had run into that in production, so we have to dig deeper still.

The primary suspect was the dictionary training portion. One of the things that RavenDB does on a continuous basis is measure the compression ratio of the documents, if we aren’t able to hit a good compression ratio, RavenDB will try to generate a new dictionary from the most recent documents and see if that new dictionary can do better. This can be very helpful in maintaining good compression rates. As your documents change, RavenDB will detect that and realize that it can do better, retrain on the recent data and compress even further. The problem is that this code path is also quite tricky, we first compress the document using the current dictionary, then we try generating a new dictionary and see if compressing with the new dictionary is better. If that is the case, we can install the new dictionary for future operations, otherwise, we need to discard it.

I suspected that the issue was somewhere around that area, we might not be handling the rejection of the new dictionary properly. So I went into the code and started digging, but I found absolutely nothing. The entire process is covered in tests and has been in production for close to 18 months, so this isn’t something that obvious.

After spending quite a bit of time on the issue, I decided that the code is perfect, it handled everything properly and taken into account all the right behaviors.

Clearly the fault was elsewhere. Before setting out to blame the nearest cat (you can never trust those), I had an idea, what if the problem wasn’t during the training process, but afterward?

Well, that doesn’t really matter, does it? RavenDB is a transactional database, if we had a failure after the training process, we’ll have to discard some of the data, for sure, but that would be about it. Unless, what if we have some state that wasn’t transactional? As part of looking at the compression training code, I ran into just such a scenario. Running the training to generate a new compression dictionary is an expensive proposition, so we don’t want to do that often. As such, we’ll do that for only about 1K document changes where we exceed the desired compression ratio by over 10%. How do we know to act every 1K documents? Well, we have a counter that we increment on every change. That value is incremented using Interlocked.Increment() and isn’t part of the transactional state. If the transaction is aborted, the value is still incremented. The actual value doesn’t matter, mind, only that it is moving forward, so that isn’t an issue.

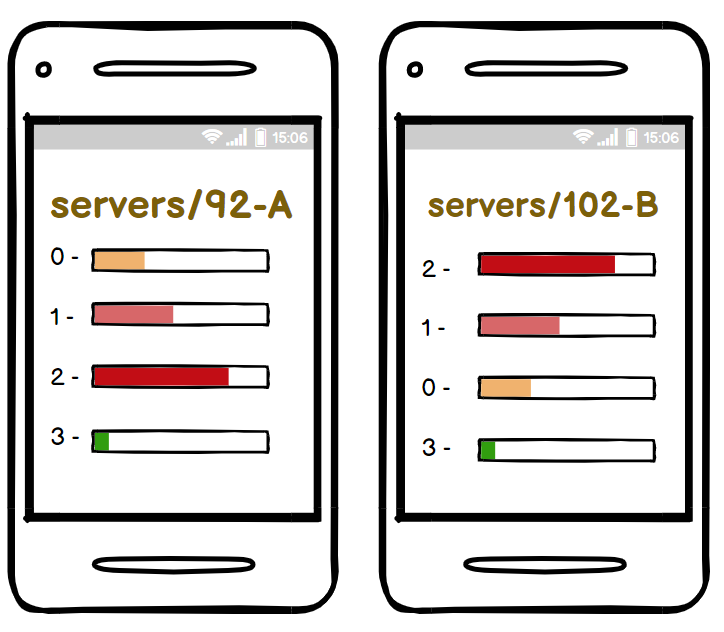

I mentioned the dictionary id before, but I should clarify that this is the zstd’s dictionary id. Internally, RavenDB uses a different value. That value is simply the sequence number of the dictionary, RavenDB counts the number of generated dictionaries and gives the new dictionary the next available value. That value, by the way, is part of the transaction. If we rollback a transaction, we’ll use the same dictionary id. But that doesn’t matter, of course.

When using compression dictionaries, we need to load them from a buffer. There is quite a bit of work that is involved in that, there is memory allocation, entropy tables to load, etc. In order to save repeated work, RavenDB caches the compression dictionaries (after all, their whole point is to be used repeatedly). That cache can be used by multiple transactions at the same time (two read transactions using the same dictionary will use the same instance).

Given all of this information, here is the sequence of events that we need to get the error in question:

- The user enabled documents compression.

- The user runs a transaction with at least four commands, which needs to satisfy the following conditions.

- A document write as the first action.

- Then a write to document whose compression ratio exceeded the expected ratio by over 10%, as a result, RavenDB tried to train a new compression dictionary.

- That dictionary had a better compression ratio and was accepted as the new default compression dictionary.

- RavenDB persisted the new dictionary and used that to compress the new document.

- Another command (in the same transaction) had stored a document in the same collection, now RavenDB will read the new dictionary and store that in a cache.

- A third command runs, but this one throws an error (such as optimistic concurrency violation).

At this point, RavenDB will rollback the entire transaction and return the error to the user. Let’s say the user has chosen to submit the same two documents again, shall we?

For the first command, we’ll again discover that the compression ratio (of the old compression dictionary) is insufficient. We will not generate a new compression dictionary, why is that? Remember the counter that we increment using Interlocked? That one was not rolled back, so we’ll need to wait for another 1K documents for the stars to properly align for us. That doesn’t impact correctness in any way, shape or form, however.

At this stage, the stage is set, but everything is still okay. The problem will happen on the next time that we’ll trigger a new dictionary. At that point, we’ll again scan the most recent documents, build a dictionary, etc. However, the dictionary id that RavenDB will use will be identical to the dictionary id that we previously discarded. The data that dictionary was trained on, however, will almost certainly be different. We persist the new dictionary to disk and everyone is happy, the new document that we wrote will use the new compression dictionary and we are perfectly fine.

The next write for this collection, however, will run into a problem. It will need to use the current (the new one) dictionary when we want to make a write. In order to do that, it will load the value using the cache, but there is already a value for that dictionary in the cache, the same dictionary that was discarded. At this point, RavenDB will start compressing documents using the in memory dictionary while the on disk dictionary is different.

If you’ll try to access the document which triggered the new dictionary, you’ll get an error, but documents that were modified later will continue working with no issue. Until you restart, of course.

On restart, we’ll read the dictionary from disk, where we wrote the new dictionary, at this point, all those documents that we wrote will give us the error above. Note that the sequence of events has to be very exact, you need to have a dictionary training as part of a multi act transaction which failed after the dictionary training has been successful and wrote additional documents. In a year and a half of production usage and very heavy load, that happened only a couple of times, it seems.

The issue has been fixed, of course and we’ll be rolling it out to both users and cloud customers. We’ll now rollback such in memory state on a transaction rollback as well, avoiding this issue entirely. It is amazing to me that despite very careful planning, it wasn’t the code itself that caused a problem, but a sequence of independent operations and failure modes that we never even considered about this.