Via DotNetKicks, I have found this post, which talks about how you can use XmlHttpRequest from SQL Server. I have to bow to the creative power of Dave, but this really frightens me. Next thing we will have a new fad.

I don't get a lot of chances to work on VB.Net, but when I do, it is almost always because someone has a problem with my code (or their code), and I need to look at it. The problem is that I like the syntax (sort of booish :-) ), but I keep trying to think about it like C#, so I keep getting wierd compiler errors. I am probably the only one who learns the VB syntax from opening reflector and switching to the VB.Net view.

Anyway, I was asked how you can mock event registration with Rhino Mocks in VB.Net, and here is the answer:

<TestFixture()> _

Public Class RhinoMocks_InVB

<Test()> _

Public Sub UsingEvents()

Dim mocks As New MockRepository()

Dim lv As ILoginView = mocks.CreateMock(Of ILoginView)()

Using mocks.Record

AddHandler lv.Load, Nothing

LastCall.IgnoreArguments()

End Using

Using mocks.Playback

Dim temp As New LoginPresenter(lv)

End Using

End Sub

End Class

Where the objects we are test are:

Public Interface ILoginView

Event Load As EventHandler

End Interface

Public Class LoginPresenter

Public Sub New(ByVal view As ILoginView)

AddHandler view.Load, AddressOf OnLoad

End Sub

Public Sub OnLoad(ByVal sender As Object, ByVal e As EventArgs)

End Sub

End Class

I just responded to a message on the Israeli .NET dev forum, where the poster asked how they can gain experiance when all the jobs require experience. The poster assumed that in order to contribute to an open source project, he would need experience as well.

Well, consider this an open invitation for anyone that can pick up an if statement, to contribute to the following open source projects.

- NHibernate

- Castle Active Record

- Castle MonoRail

- Castle Windsor

- Rhino Mocks

- Rhino Commons

- Rhino Igloo

- NHibernate Query Analyzer

- NHibernate Query Generator

- Linq for NHibernate

I don't care about grades or time in the industry, I would only care about the contributions that you can make. Get the source, and see if you can make things better. (Just to clarify, this option is opened for experienced developers as well)

What do you get from it?

- Warm fuzzy feeling :-)

- More work

- The chance to work with some really smart people on really fun projoects

- Experience that you couldn't get anywhere else.

How you can help?

- Add documentations.

- Find a bug and fix it:

- NHibernate JIRA: http://jira.nhibernate.org/secure/Dashboard.jspa

- Castle JIRA: http://support.castleproject.org/secure/Dashboard.jspa

- Add new features.

- Write sample applications.

You can talk to the development teams here:

- NHibernate-dev mailing list: nhibernate-development AT lists.sourceforge.net

- Castle-dev mailing list: castle-project-devel AT googlegroups.com

- Rhino Tools-dev mailing list: rhino-tools-dev AT googlegroups.com

NHibernate source:

Castle source:

Rhino Tools source:

From Joel Spolsky:

It just occured to me that the same can be said about Castle and Dynamic Proxy. We use that from everything from AoP to strongly typed parameters support.

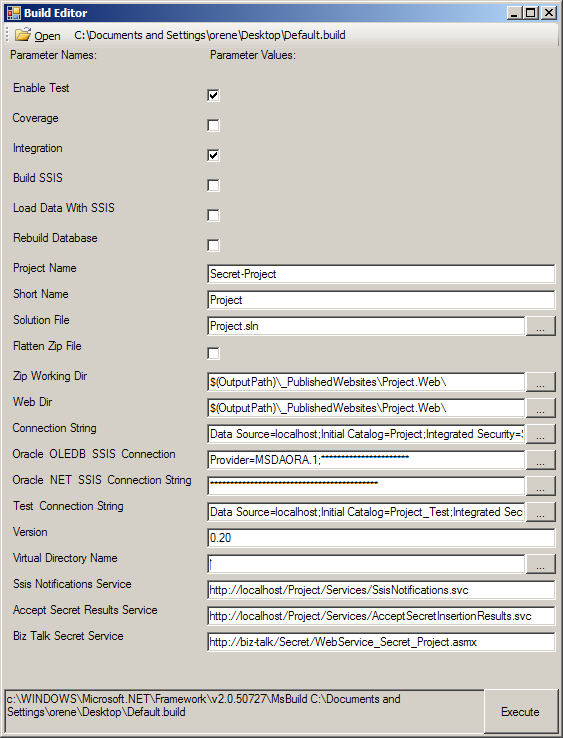

As long as I am talking about reducing pain, here is a sample from a very secret project that I am woring on. I have a lot of parameters for the build script, and it got to be a PITA to try to manage them manually. There are several kinds of builds that I can do here, test, production, DB rebuilds, deploy, etc.

This quick (and very dirty) solution took about an hour of coding to build properly, and I just added some minor details after the intial build, enough to make it even more useful.

It is a build parameters UI for MSBuild, which basically display all the PropertyGroup elements, and apply simple heuristics for trying to figure out what the values are. For instnace, it can discover boolean values (and display check boxes), and clicking on the "..." buttons will display an Open File, Browse Folder, or Connection String dialogs, as appropriate.

As I said, it took about an hour (I wanted to leave it to my team-mates when I was at DevTeach), and it paid back in time, effort and sheer pleasure many times since.

If you are a developr and you have some pain points, fix them, it will be well worth it.

(Yes, Roy, I know that Final Builder has a prettier interface :-) )

Well, when the wheel is a square, of course:

Adi asks about my seemingly inconsistent behavior regarding when to roll your own, and when to use the stuff that is already there:

What can I say, I have a Pavlovian conditioning with regards to software, I tend to avoid software that cause me pain. Let me quote David Hayden about the CAB and SCSF:

That is quite telling. And I don't think that anyone can say that David is not very well familiar with the CAB/SCSF.

Why do I promote OSS solutions such as NHibernate, Castle?

- Those solutions are (nearly) painless. In fact, they are often elegant and fun to work with. As an example, I worked with a team member today, introducing him to NHibernate Query Generator, he was very happy about it. Talk about painless persistence.

Why did I decide to write my own Mocking Framework instead of using the standard one (at the time)?

- NMock, the mocking framework that I used at the time (2005), was annoying as hell for the agile developer. Bad support for overloads, no way to Lean On The Compiler and No ReSharper. It was a pain to use. I wrote my own, and I like using it. Incidently, it looks like a lot of other people are liking it, but that isn't why I wrote it.

Removing pain points will make you a better developer, period.

Oh, and I do enjoy the discussion, although I wouldn't call it a post war.

Right now Brail has no 1st class support for generating XML, like RoR's rxml. I just run into BooML, and it looks like a really great way to handle this issue. MonoRail doesn't (yet) has the notion of multiply DSLs, just standard templates and JS generation. Looks like that is the way I would go when I next need to support XML with MonoRail.

I knew there was a reason why I liked Boo so much :-)

On Castle Dev, Chris has asked the following question, how can you build the common "empty" template with Brail? NVelocity makes it very easy:

#foreach $item in $items

#each

$item

#nodata

No Data!

#end

But Brail makes it more cumbersome. There are solutions using view components, but they are too much, all too often. So, can we find an elegant solution to the problem?

As it turn out, yes (otherwise I probably wouldn't write this post :-) ). Brail is based on Boo, and Boo has extension methods... Another concept that Brail has is "Common Scripts", a set of helper methods that can be used across all the views in the application. Here is the script that I put in /Views/CommonScripts/extensions.brail:

[Boo.Lang.ExtensionAttribute]

static def IsEmpty(val as object) as bool:

if val isa System.Collections.ICollection:

return cast(System.Collections.ICollection,val).Count == 0

end

return true

end

Now you have extended all the objects in the application, so we can use it like this:

<%

for item in items:

output item

end

output "No Data" if items.IsEmpty

%>

I like that :-) The notion can be extended to more complex issues, naturally, such as ToXml extension method, etc. In fact, you can even forward calls to C# code, so that makes it even more powerful (I assume (and suggest against) that you aren't going to write a lot of code in the extensions).

So I can eat it (No Scott, I will not go to that resturant again, I know they can probably serve both, but the salad scares me). Appernatly CodePlex is adding Subversion support. What I would really like to know if it would have the same integration that the TFS backend would have as well.

At any rate, I would like to take this opportunity to apologize to the CodePlex team, I would have never believed that you would do it, way to go!

I have several ways of categorizing code. Production quality, test, demo, and utilities. When I am talking about utilities, I am talking about little programs that I use to do all sorts of small tasks. There I rarely worry about maintainability, and today there was a gem here:

public delegate Control BuildSpecialCase(TextBox textbox);

private BuildSpecialCase GetSpecialCaseControl(string name)

{

if (name.IndexOf("ConnectionString", StringComparison.InvariantCultureIgnoreCase) != -1)

{

return delegate(TextBox t)

{

Button change = new Button();

change.Click += delegate { t.Text = PromptForConnectionString(t.Text); };

change.Dock = DockStyle.Right;

change.Text = "...";

change.Width = 30;

return change;

};

}

if (name.IndexOf("File", StringComparison.InvariantCultureIgnoreCase) != -1)

{

return delegate(TextBox t)

{

Button change = new Button();

change.Click += delegate

{

OpenFileDialog dialog = new OpenFileDialog();

dialog.Multiselect = false;

dialog.FileName = t.Text;

if (dialog.ShowDialog(this) == DialogResult.OK)

{

t.Text = dialog.FileName;

}

};

change.Dock = DockStyle.Right;

change.Text = "...";

change.Width = 30;

return change;

};

}

if (name.IndexOf("Folder", StringComparison.InvariantCultureIgnoreCase) != -1 ||

name.IndexOf("Dir", StringComparison.InvariantCultureIgnoreCase) != -1)

{

return delegate(TextBox t)

{

Button change = new Button();

change.Click += delegate

{

FolderBrowserDialog dialog = new FolderBrowserDialog();

dialog.SelectedPath = t.Text;

if (dialog.ShowDialog(this) == DialogResult.OK)

{

t.Text = dialog.SelectedPath;

}

};

change.Dock = DockStyle.Right;

change.Text = "...";

change.Width = 30;

return change;

};

}

return null;

}

Can you figure out what is going on?

As long as we are talking about unmaintainable code, here an example from code meant for production that I just wrote:

But for that I have tests :-)