I think I'll let it stand on its own:

facility Castle.MonoRail.WindsorExtension.MonoRailFacility for type in AllTypesBased of Controller("HibernatingRhinos"): component type.Name, type

Done.

I didn't believe it, to be fair.

I think I'll let it stand on its own:

facility Castle.MonoRail.WindsorExtension.MonoRailFacility for type in AllTypesBased of Controller("HibernatingRhinos"): component type.Name, type

Done.

I didn't believe it, to be fair.

One of the ways to significantly increase the scalability of our applications is to start performing more and more things in an asynchronous manner. This is important because most actions that we perform in a typical application are not CPU bound, they are I/O bound.

Here is a simple example:

public class HomeController : SmartDispatcherController { IUserRepository userRepository; public void Index() { PropertyBag["userInfo"] = userRepository.GetUserInformation(CurrentUser); } }

GetUserInformation() is a web service call, and it can take a long time to run. Now, if we have the code as above, while the web service call is being processed, we can't use this thread for any other request. This has a significant implications on our scalability.

ASP.Net has support for just those scenarios, using async requests. You can read more about it here, including a better explanation of the

I recently added support for this to MonoRail (it is currently on a branch, and will be moved to the trunk shortly).

Let us say that you have found that the HomeController.Index() method is a problem from scalability perspective. You can now rewrite it like this:

public class HomeController : SmartDispatcherController { IUserRepository userRepository; public IAsyncResult BeginIndex() { return userRepository.BeginGetUserInformation(CurrentUser, ControllerContext.Async.Callback, ControllerContext.Async.State); } public void EndIndex() { PropertyBag["userInfo"] = userRepository.EndGetUserInformation(ControllerContext.Async.Result); } }

That is all the change that you need to do to turn an action async. We are using the standard .NET async semantics, and MonoRail will infer them automatically and use the underlying ASP.Net async request infrastructure.

"BeginIndex/EndIndex" are the method pairs that compose the async method index. Note that the only thing that needs to be change is turning a synchronous action to an asynchronous action is splitting the action method into an IAsyncResult Begin[ActionName]() and void End[ActionName](). Everything else is exactly the same.

This is a major improvement for scaling an application. The more I learn about async programming, the more it makes sense to me, and I think that I am moving more and more to this paradigm.

I was asked how I would got about building a real world security with the concept of securing operations instead of data.

This is a quick & dirty implementation of the concept by marrying Rhino Security to MonoRail. This is so quick and dirty that I haven't even run it, so take this as a concept, not the real implementation, please.

The idea is that we can map each request to an operation, and use the convention of "id" as a special meaning to perform operation security that pertain to specific data.

Here is the code:

public class RhinoSecurityFilter : IFilter { private readonly IAuthorizationService authorizationService; public RhinoSecurityFilter(IAuthorizationService authorizationService) { this.authorizationService = authorizationService; } public bool Perform(ExecuteWhen exec, IEngineContext context, IController controller, IControllerContext controllerContext) { string operation = "/" + controllerContext.Name + "/" + controllerContext.Action; string id = context.Request["id"]; object entity = null; if (string.IsNullOrEmpty(id) == false) { Type entityType = GetEntityType(controller); entity = TryGetEntity(entityType, id); } if(entity==null) { if (authorizationService.IsAllowed(context.CurrentUser, operation) == false) { DenyAccessToAction(); } } else { if (authorizationService.IsAllowed(context.CurrentUser, entity, operation) == false) { DenyAccessToAction(); } } return true; } }

It just perform a security check using the /Controller/Action names, and it tries to get the entity from the "id" parameter if it can.

Then, we can write our base controller:

[Filter(ExecuteWhen.BeforeAction,typeof(RhinoSecurityFilter))] public class AbstractController : SmartDispatcherController { }

Now you are left with configuring the security, but you already have a cross cutting security implementation.

As an example, hitting this url: /orders/list.castle?id=15

Will perform a security check that you have permission to list customer's 15 orders.

This is pretty extensive, probably overly so, however. A better alternative would be to define an attribute with the ability to override the default operation name, so you can group several action into the same operation.

You would still need a way to bypass that, however, since there are some thing where you would have to allow access and perform custom permissions, no matter how flexible Rhino Security is, or you may be required to do multiply checks to verify that, and this system doesn't allow for it.

Anyway, this is the overall idea, thoughts?

Rob Conery asks how the MS MVC platform should handle XSS attacks. In general, I feel that frameworks should do their best to ensure that to be secure by default. This means that I feel that by default, you should encode everything that comes from the user to the app. People seems to think that encoding inbound data will litter your DB with encoded text that isn’t searchable and consumable by other applications.

That may be the case, but consider, what exactly is getting encoded? Assuming that this is not a field that require rich text editing, what are we likely to have there?

Text, normal text, text that can roundtrip through HTML encoding without modifications.

HTML style text in most of those form fields are actually rare. And if you need to have some form of control over it, you can always handle the decoding yourself. Safe by default is a good approach. In fact, I have a project that uses just this approach, and it is working wonderfully well.

Another approach for that would be to make outputting HTML encoded strings very easy. In fact, it should be so easy that it would be the default approach for output strings.

Here, the <%= %> syntax fails. It translate directly to Response.Write(), which means that you have to take an extra step to get secured output. I would suggest changing, for MS MVC, the output of <%= %> to output HTML encoded strings, and provide a secondary way to output raw text to the user.

In MonoRail, Damien Guard has been responsible for pushing us in this direction. He had pointed out several places where MonoRail was not secure by default. As a direct result of Damien's suggestions, Brail has gotten the !{post.Author} syntax, which does HTML encoding. This is now considered the best practice for output data, as well as my own default approach.

Due to backward comparability reasons, I kept the following syntax valid: ${post.Author}, mainly because it is useful for doing things like output HTML directly, such as in: ${Form.HiddenField("user.id")}. For the same reason, we cannot automatically encode everything by default, which is controversial, but very useful.

Regardless, having a very easy way ( !{post.Author} ) to do things in a secure fashion is a plus. I would strongly suggest that the MS MVC team would do the same. Not a "best practice", not "suggested usage", simply force it by default (and allow easy way out when needed).

And they go ahead and write Outlook Web Access behind your back...

I am going over the code, and I am simply amazed. JavaScript & HTML component, using DomainJSon Generation and some additional smarts around that idea.

One on the nicer semantics of App_GlobalResources is that it is updated on the fly. I wanted to use it with MonoRail, but I wasn't quite sure how. A minute's search turned out that this syntax works:

[Resource("msgs", "Resources.Messages",

AssemblyName = "App_GlobalResources")]

[Resource("err", "Resources.Errors",

AssemblyName = "App_GlobalResources")]

public abstract class BaseController : SmartDispatcherController

{

}Nikhil has a post about using MS Ajax with MS MVC*.

What was particulary interesting to me was that it reminded me very strongly of posts that I wrote, exploring Ajax in MonoRail. The method used was the same, the only changes were exteremely minute details, such as different method names with the same intention, etc.

* Can we please get better names for those?

Adam has an interesting discussion here about handling common actions in MonoRail. This has sparked some discussion in the MonoRail mailing list. I wanted to take the chance to discuss the idea in more detail here.

Basically, he is talking about doing this:

public class IndexAction : SmartDispatcherAction { private ISearchableRepository repos; private string indexView; public IndexAction( ISearchableRepository repos, string indexView) { this.repos = repos; this.indexView = indexView; } public void Execute(string name) { ISearchable item = repos.FindByName(name); if(item == null) { PropertyBag["UnknownSearchTerm"] = name; RenderView("common/unknown_quick_search"); } PropertyBag["Item"] = item; RenderView(indexView); } }

And then registering it with the routing engine like this:

new Route("/products/<name>", new IndexAction(new ProductRepository(), "display_product")); new Route("/categories/<name>", new IndexAction(new CategoryRepository(), "display_category"));

Now, accessing "/categories/cars" will give you all the items in the cars category.

On the face of it, it seems like a degenerated controller, no? Why do we need it? We can certainly map more than a single URL to a controller, so can't we solve that problem that way?

Let us stop for a moment and think about the MVC model. Where did it come from? From Smalltalk, when GUI was something brand new & sparkling. It is a design pattern for a connected system. In that case, the concept of a controller made a lot of sense.

But when we are talking about the web? The web is a disconnected world. What is the sense in having a controller there? An Action, or a Command, pattern seems much more logical here, no?

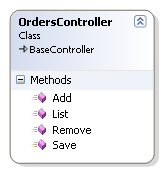

But then we have things that just doesn't fit this model. Consider the example of CRUD on orders. We can have a controller, which will handle all of the logic for this use case in a single location, or we can have four separate classes, each taking care of a single aspect of the use case.

But then we have things that just doesn't fit this model. Consider the example of CRUD on orders. We can have a controller, which will handle all of the logic for this use case in a single location, or we can have four separate classes, each taking care of a single aspect of the use case.

Personally, I would rather have the controller to do the work in this scenario, because this way I have all the information in a single place, and I don't need to hunt multiply classes in order to find it.

But, there are a lot of cases where we do want to have just this single action to happen, or maybe we want to add some common operations to the controller, without having to get in to crazy inheritance schemes.

For this, MonoRail supports the idea of Dynamic Actions, which supports seamless attachment of actions to a controller.

Hammett describe them best:

DynamicActions offers a way to have an action that is not a method in the controller. This way you can “reuse an action” in several controllers, even among projects, without the need to create a complex controller class hierarchy.

The really interesting part in this is that we have both IDynamicAction and IDynamicActionProvider. This means that we get mixin-like capabilities.

Dynamic Actions didn't get all the love they probably deserve, we don't have the SmartDispatcherAction (yet), so if we want to use them, we will need to handle with the raw request data, rather than with the usual niceties that MonoRail provides.

Nevertheless, on a solid base it is easy enough to add.

Now all we need to solve is the ability to route the requests to the correct action, right? This is notepad code, so it is ugly and not something that I would really use, but it does the job:

public class ActionRoutingController : Controller { public delegate IDynamicAction CreateDynamicAction(); public static IDictionary<string, CreateDynamicAction> Routing = new Dictionary<string, CreateDynamicAction>(); protected override void InternalSend(string action, IDictionary actionArgs) { if(Routing.ContainsKey(action) == ) throw new NoActionFoundException(action); Routing[action]().Execute(this); } }

What this means is that you can now do this:

public void AddRoutedActions() { AddRoutedAction("categories", "/categories/<name:string>", delegate { return new IndexAction(new CategoryRepository(), "display_category"); }); AddRoutedAction("products", "/products/<name:string>", delegate { return new IndexAction(new ProductRepository(), "display_product"); }); } public void AddRoutedAction(string action, string url, CreateDynamicAction actionFactory) { RoutingModuleEx.Engine.Add( PatternRule.Build(action, url, typeof(ActionRoutingController), action)); ActionRoutingController.Routing.Add(action, actionFactory); }

And get basically the same result.

Again, all of this is notepad code, just doodling away, but it is nice to see that all the building blocks are there.

Sharing common functionality across controllers is something that I have run into several times in the past. It is basically needing to offer the same functionality across different elements in the application.

Sharing common functionality across controllers is something that I have run into several times in the past. It is basically needing to offer the same functionality across different elements in the application.

Let us take for a moment a search page. In my current application, a search page has to offer rich search functionality for the user, the ability to do pattern matching, so given a certain entity, match all the relevant related entities that can fit this entity. Match all openings for a candidate. Match all candidates for an opening.

That is unique, mostly, but then we have a lot of boiler plate functionality, which moves from printing, paging, security, saving the query and saving the results, changing the results, exporting to XML, loading saved queries and saved results, etc, etc etc. Those requirements are shared among several

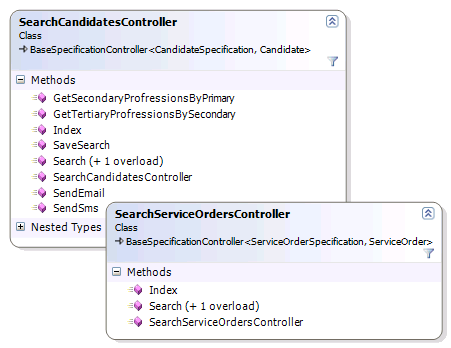

On the right you can see one solution for this problem, the Template Controller pattern. Basically, we concentrated all the common functionality into the Base Specification Controller.

What you can't see is that the declaration of the controller also have the following generic constraints:

public class BaseSpecificationController<TSpecification, TEntity> : BaseController where TSpecification : BaseSpecification<TEntity>, new() where TEntity : IIdentifable

This means that the base controller can perform most of its actions on the base classes, without needing to specialize just because of the different types.

Yes, dynamic language would makes things much easier, I know.

Note that while I am talking about sharing the controller logic here, between several controllers, we can also do the same for the views using shared views. Or not. That is useful if we want to use different UI for the search.

In fact, given that we need to show a search screen, it is not surprising that we would need a different UI, and some different behavior for each search controller, to get the data required to specify a search.

Now that we have the background all set up, let us see what we can do with the concrete search controllers, shall we.

You can see the structure of them on the right. The search candidates is doing much more than the search orders, but a lot of the functionality between the two is shared. And more importantly, easily shared.

You can see the structure of them on the right. The search candidates is doing much more than the search orders, but a lot of the functionality between the two is shared. And more importantly, easily shared.

Well, if you define the generics syntax above as easy, at least.

The main advantage of this approach is that I can literally develop a feature on the candidates controller, and then generalize it to support all the other searches in the application.

In this scenario, we started with searching for candidates, and after getting the basic structure done, I moved to start working on the search orders.

At the same time, another guy was implementing all the extra functionality (excel export, sending SMS and emails, etc).

After we I finished the search order page, we merged and refactored up most of the functionality that we needed in both places.

This is a good approach if you can utilize inheritance in your favor. But there is a kink, if you want to aggregate functionality from several sources, then you are going to go back to delegation or duplication.

Adam has interesting discussion about this issue, and an interesting proposition. But that will be another post.

This post by Ben Scheirman is interesting. He points out that the MS.MVC stuff is targeted toward a different crowd than the one who is using MonoRail, it is targeted toward the corporate developers and the All-Microsoft Shops.

The question of support has been raised again, and it prompted this post. It seems that there isn't a lot of awareness that there are commercial support options for those tools.

I am actually not very interested in getting support for those, so it is entirely possible (and likely) that I missed some. Most of the active members of the community are members of consultancies that are capable offering support, but those are the one that I am aware of.

* Full disclosure: I work there.

No future posts left, oh my!