Watch your 6, or is it your I/O? It is the I/O, yes

As I said in my previous post, tasked with having to load 3.1 million files into RavenDB, most of them in the 1 – 2 KB range.

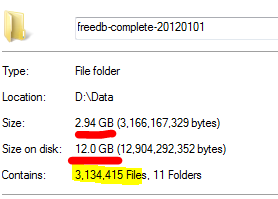

Well, the first thing I did had absolutely nothing to do with RavenDB, it had to do with avoiding dealing with this:

As you can see, that is a lot.

But when the freedb dataset is distributed, what we have is actually:

This is a tar.bz2, which we can read using the SharpZipLib library.

The really interesting thing is that reading the archive (even after adding the cost of decompressing it) is far faster than reading directly from the file system. Most file systems do badly on large amount of small files, and at any rate, it is very hard to optimize the access pattern to a lot of small files.

However, when we are talking about something like reading a single large file? That is really easy to optimize and significantly reduces the cost on the input I/O.

Just this step has reduced the cost of importing by a significant factor, we are talking about twice as much as before, and with a lot less disk activity.

Comments

Damn, I thought about that too. Now I'm sad I didn't mention it in previous thread.

My thought is that NTFS just plain sucks with that much files even if they are large. UNIX filesystem dose not have that much problems with it.

@Steve: ext* would still be a lot slower to read millions of files in sequence than using an archive, assuming you don't care about the order in which you read files. It's because a file's size is measured in pages, and you get lots of free space between the files, so even if the files are all sequential on disk there's a lot of seek time. I think even an uncompressed tar file would be faster than pretty much any OS.

Not to mention that most compression schemes (e.g. zip, gzip, but not bz2) can be decompressed faster than they are read from disk on modern machines, meaning I/O is still the bottleneck even on compressed files.

How about just getting a good SSD? :D

Yes, it's a shame modern 'high performance' filesystems (NTFS, i'm talking to you) can't handle 50 thousand small files in a single directory. Some time ago a bug in my company's application that resulted in creation of thousands of temp files brought down the whole server - IIS, the application and even file explorer. And it was hard to find too because the filesystem behaved as if the hardware was failing or the disk logical structure was corrupt.

@Dan: +1 Because if Ayende had gotton a good SSD, the Size on disk of all his files would've been less than 743 MB.

@Dan, Patrick, Darius: Are you suggesting that Ayende should tell all his customers to install SSDs on their DB servers instead of enabling his software to read tarballs?

Having such huge amount of file is a pain for every filesystem, because you have files that are smaller than the size of a cluster in the disk, and this mean wasted space and wasted time in reading and writing on disk. The picture of ayende shows how the real file size on disk is four time bigger than file size.

Having them stored as a single compressed file is a good strategy, because you are trading disk activity with CPU activity to decompress the file and this is a gain because disk is much more slower. The good part is that all compression libraries have the option to create an archive at 0 compression level, this permits to avoid spending CPU cycle for decompression, but with the advantage of handling everything as a single file.

Comment preview