How saving a single byte cost me over a day (and solved with a single byte)

I’m currently doing something that I would rather not do. I’m doing significant refactoring to the lowest level of RavenDB, preparing some functionality that I need to develop some use visible features. But in the meantime, I’m mucking about in the storage layer, dealing with how Voron actually persists and manage the information we have. And I’m making drastic changes. That means that stuff breaks, but we have got the tests to cover for us, so we are good in that regard, yeah!

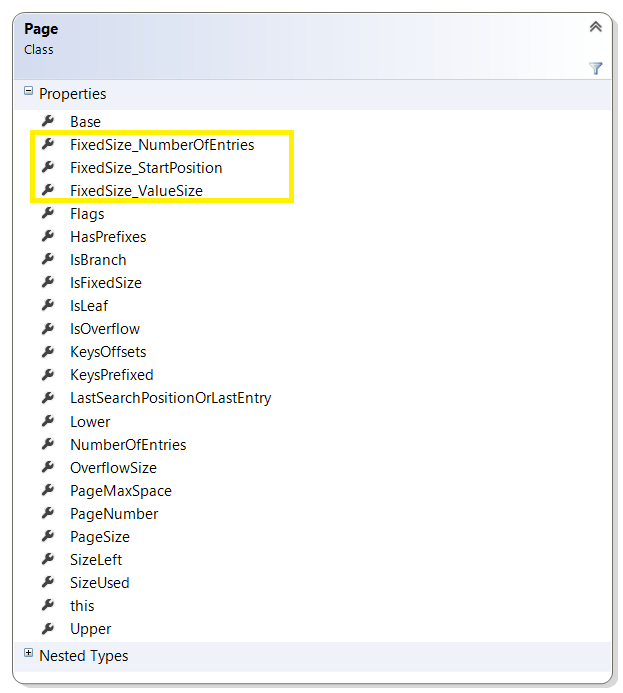

The case for this post is that I decided to break apart a class that had too many responsibilities. The class in question is:

As you can see, there are multiple concerns here. There are actually several types of Page in the system, but changing this would have been a major change, so I didn’t do that at the time, and just tucked more responsibility into this poor class.

When I finally got around to actually handling this, it was part of a much larger body of work, but I felt really great that I was able to do that.

Until I run the tests, and a few of them broke. In particular, only the tests that deal with large amount of information (over four million entries) broke. And they broke in a really annoying way, it looked like utter memory corruption was happening. It was scary, and it took me a long while to figure out what was going on.

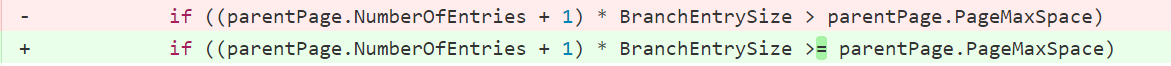

Here is the fix:

This error happens when adding a new entry to a tree. In particular, this piece of code will only run when we have a page split on a branch page (that is, we need to move from 2 levels in the tree to 3 levels).

The issue turned out to be because when we split the Fixed Size Tree from the Variable Size Tree, I was able to save a single byte in the header of the fixed size tree page. The previous header was 17 bytes in size, and the new header was 16 bytes in size.

The size of BranchEntrySize is 16 bytes… and I think you get the error now, right?

Before, with page size of 4K, we had a PageMaxSize of 4079 bytes. So on the 255 entry, we would be over the limit, and split the page. By reducing a single byte from the header, the PageMaxSize was 4080, and because we only checked from greater than, we though that we could write to that page, but we ended up writing to the next page, corrupting it.

The fix, ironically enough, was to add another byte, to check for greater than or equal ![]() .

.

Comments

The previous condition looked correct to me.

Inside this if, are you handling the logic for when you can NOT write to the parent page? If PageMaxSpace is 1024 bytes and BranchEntrySize is 1 byte, you can write 1024 entries to it. So if number of entries is 1023 you should still be able to write one more entry. Or is there some implicit requirement somewhere else that there has to be an extra byte after the last entry?

As the saying goes: "There are two hard things in computer science: cache invalidation, naming things, and off-by-one errors." :-)

@Omer and I always used to disbelieve the cache invalidation until i wrote my first custom cache. And then the invalidation policy became, one thing changes? Blow away the entire cache.

Thankfully the data very infrequently changed.

Comment preview