Silverlight and HTTP and Caching, OH MY!

We are currently building the RavenDB Siliverlight Client, and along the way we run into several problems. Some of them were solved, but others proved to be much harder to deal with.

In particular, it seems that the way Silverlight handle HTTP caching is absolutely broken. In particular, Silverlight aggressively caches HTTP requests (which is good & proper), but the problem is that it ignores just about any of the cache control mechanisms that the HTTP spec specifies.

Note: I am talking specifically about the Client HTTP stack, the Browser HTTP stack behaves properly.

Here is the server code:

static void Main() { var listener = new HttpListener(); listener.Prefixes.Add("http://+:8080/"); listener.Start(); int reqNum = 0; while (true) { var ctx = listener.GetContext(); Console.WriteLine("Req #{0}", reqNum); if(ctx.Request.Headers["If-None-Match"] == "1234") { ctx.Response.StatusCode = 304; ctx.Response.StatusDescription = "Not Modified"; ctx.Response.Close(); continue; } ctx.Response.Headers["ETag"] = "1234"; ctx.Response.ContentType = "text/plain"; using(var writer = new StreamWriter(ctx.Response.OutputStream)) { writer.WriteLine(++reqNum); writer.Flush(); } ctx.Response.Close(); } }

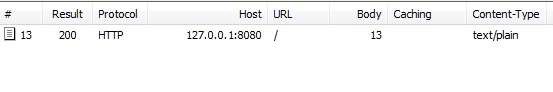

This is a pretty simple implementation, let us see how it behaves when we access the server from Internet Explorer (after clearing the cache):

The first request will hit the server to get the data, but the second request will ask the server if the cached data is fresh enough, and use the cache when we get the 304 reply.

So far, so good. And as expected, when we see the presence of an ETag in the request.

Let us see how this behaves with an Out of Browser Silverlight application (running with elevated security):

public partial class MainPage : UserControl { public MainPage() { InitializeComponent(); } private void StartRequest(object sender, RoutedEventArgs routedEventArgs) { var webRequest = WebRequestCreator.ClientHttp.Create(new Uri("http://ipv4.fiddler:8080")); webRequest.BeginGetResponse(Callback, webRequest); } private void Callback(IAsyncResult ar) { var webRequest = (WebRequest) ar.AsyncState; var response = webRequest.EndGetResponse(ar); var messageBoxText = new StreamReader(response.GetResponseStream()).ReadToEnd(); Dispatcher.BeginInvoke(() => MessageBox.Show(messageBoxText)); } }

The StartRequest method is wired to a button click on the window.

Before starting, I cleared the IE cache again. Then I started the SL application and hit the button twice. Here is the output from fiddler:

Do you notice what you are not seeing here? That is right, there is no second request to the server to verify that the resource has not been changed.

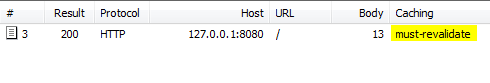

Well, I thought to myself, that might be annoying behavior, but we can fix that, all we need to do is to specify must-revalidate in the Cache-Control. And so I did just that:

Do you see Silverlight pissing all over the HTTP spec? The only aspect of Cache-Control that the ClientHttp stack in Silverlight seems to respect is no-cache, which completely ignores etags.

As it turns out, there is one way of doing this. You need to send an Expires header in the past as well as an ETag header. The Expires header will force Silverlight to make the request again, and the ETag will be used to re-validate the request, resulting in a 304 reply from the server, which will load the data from the cache.

The fact that there is a workaround doesn’t mean it is not a bug, and it is a pretty severe one, making it much harder to write proper REST clients in Silverlight.

Comments

Have you tried the Client Stack:

WebRequest.RegisterPrefix("http://", WebRequestCreator.ClientHttp);

Mat,

Did you miss the part where I am explicitly using the client stack?

WebRequestCreate.ClientHttp.Create ?

While it is of course a bug, the common practice is to use both ETag and Expires.

stackoverflow.com/.../etag-vs-header-expires

Had the exact same problem, ETag + Expires Header did it. Thanks for summing this up.

There is a new version of Silverlight 4 with some info about improved "HTTP latency": http://support.microsoft.com/kb/2505882, maybe that fixes this problem?

Comment preview