Yep, another forum question. Unfortunately, in this case all I have is the title. Even more unfortunately, I already used the stripper metaphor before.

There are some questions that I am really not sure how to answer, because there are several underlying premises that are flat out wrong in the mere asking of the question.

“Can you design a square wheel carriage?” is a good example of that, and using a service bus for queries is another.

The short answer is that you don’t do that.

The longer answer is that you still don’t do that, but also explains why the question itself is wrong. One of the things that goes along with a service bus is the concept of services.

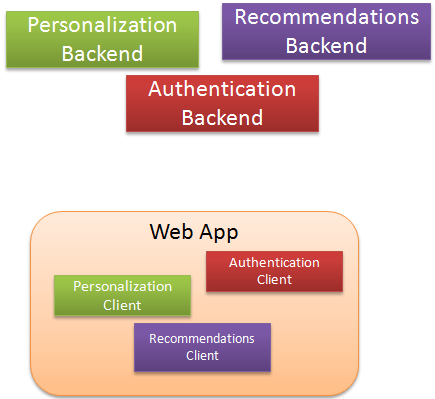

Does this ring a bell?

You don’t query a service for its state, because that would violate the autonomy tenant.

But I need the users data from the Personalization service to show the home page, I can hear you say. Well, sure, but you don’t perform queries across a service boundary.

Notice the terminology here. You don’t perform queries across a service boundary.

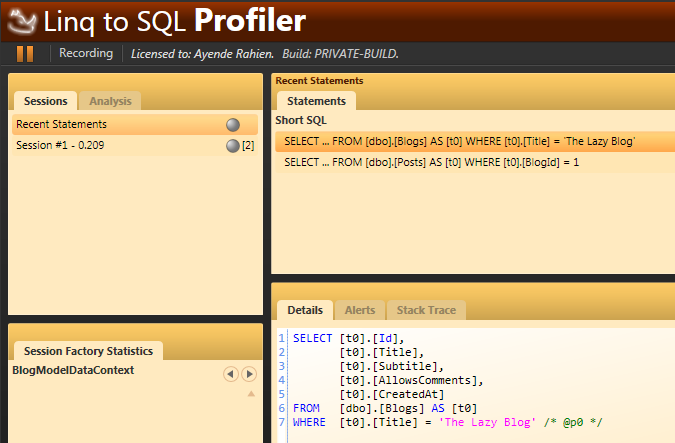

But you can perform queries inside a service boundary. The image on the right shows one such example of that.

We have several services in a single application, they communicate between services using a service bus.

But a service isn’t just something that is running in a server somewhere. The personalization service also have user interface, business logic that needs to run on the UI, etc.

That isn’t just some other part of the application that is accessing the personalization service operations. It is a part of the personalization service.

And inside a service boundary, there are no limitation to how you get the data you need to perform some operation.

You can perform a query using whatever method you like (a synchronous web service call, hitting the service database, using local state).

Personally, I would either access the data store directly (which usually means querying the service database) or use local state. I spoke about building a system where all queries are handled using local state here.