Every so often I see a group of people or a company come up with a new Thing. That new Thing is supposed to solve a set of problems. The common set of problems that people keep trying to solve are:

- Data access with relational databases

- Building applications without needing developers

And every single time that I see this, I know that there is going to be a catch involved. For the most part, I can usually even tell you what the catches involved going to be.

It isn’t because I am smart, and it is certain that I am not omnificent. It is an issue of knowing the problem set that is being set out to solve.

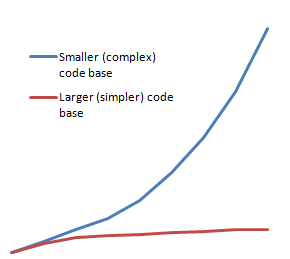

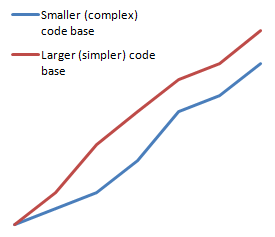

If we will take data access as a good example, there aren’t that many ways tat you can approach it, when all is said and done. There is a set of competing tradeoffs that you have to make. Simplicity vs. usability would probably be the best way to describe it. For example, you can create a very simple data access layer, but you’ll give up on doing automatic change tracking. If you want change tracking, then you need to have Identity Map (even data sets had that, in the sense that every row represented a single row :-) )

When I need to evaluate a new data access tool, I don’t really need to go too deeply into how it does things, all I need to do is to look at the set of tradeoffs that this tool made. Because you have to make those tradeoffs, and because I know the play field, it is very easy for me to tell what is actually going on.

It is pretty much the same thing when we start talking about the options for building applications without developers (a dream that the industry had chased for the last 30 – 40 years or so, unsuccessfully). The problem isn’t in lack of trying, the amount of resources that were invested in the matter are staggering. But again you come into the realm of tradeoffs.

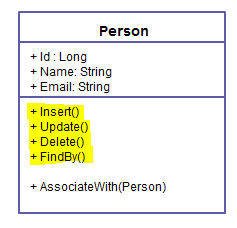

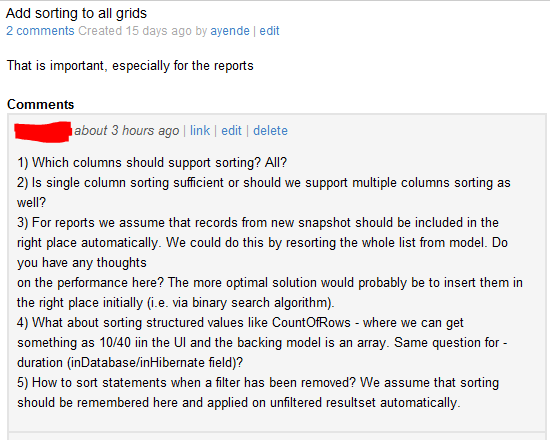

The best that a system for non developers can give you is CRUD. Which is important, certainly, but for developers, CRUD is mostly a solved problem. If we want plain CRUD screens, we can utilize a whole host of tools and approaches to do them, but beyond the simplest departmental apps, the parts of the application that really matter aren’t really CRUD. For one application, the major point was being able to assign people to their proper slot, a task with significant algorithmic complexity. In another, it was fine tuning the user experience so they would have a seamless journey into the annals of the organization decision making processes.

And here we get to the same tradeoffs that you have to makes. Developer friendly CRUD system exists in abundance, ASP.Net MVC support for Editor.For(model) is one such example. And they are developer friendly because they give you he bare bones of functionality you need, allow you to define broad swaths. of the application in general terms, but allow you to fine tune the system easily where you need it. They are also totally incomprehensible if you aren’t a developer.

A system that is aimed at paradevelopers focus a lot more of visual tooling to aid the paradeveloper achieve their goal. The problem is that in order to do that, we give up the ability to do things in broad strokes, and have to pretty much do anything from scratch for everything that we do. That is acceptable for a paradeveloper, without the concepts of reuse and DRY, but those same features that make it so good for a paradeveloper would be a thorn in a developer’s side. Because they would mean having to do the same thing over & over & over again.

Tradeoffs, remember?

And you can’t really create a system that satisfy both. Oh, you can try, but you are going to fail. And you are going to fail because the requirement set of a developer and the requirement set of a paradeveloper are so different as to be totally opposed to one another. For example, one of the things that developers absolutely require is good version control support. And by good version control support i mean that you can diff between two versions of the application and get a meaningful result from the diff.

A system for paradeveloper, however, is going to be so choke full of metadata describing what is going on that even if the metadata is in a format that is possible to diff (and all too often it is located in some database, in a format that make it utterly impossible to work with using source control tools).

Paradeveloper systems encourage you to write what amounts to Bottun1_Click handlers, if they give you even that. Because the paradevelopers that they are meant for have no notion about things like architecture. The problem with that approach when developers do that is that it is obviously one that is unmaintainable.

And so on, and so on.

Whenever I see a new system cropping up in a field that I am familiar with, I evaluate it based on the tradeoffs that it must have made. And that is why I tend to be suspicious of the claims made about the new tool around the block, whatever that tool is at any given week.