Jeremy Miller’s post about Would I use RavenDB again has been making the round. It is a good post, and I was asked to comment on it by multiple people.

I wanted to comment very briefly on some of the issues that were brought up:

- Memory consumption – this is probably mostly related to the long term session usage, which we expect to be much more short lived.

- The 2nd level cache is mostly there to speed things up when you have relatively small documents. If you have very large documents, or routinely have requests that return many documents, that can be a memory hog. That said, the 2nd level cache is limited to 2,048 items by default, so that shouldn’t really be a big issue. And you can change that (or even turn it off) with ease.

- Don’t abstract RavenDB too much – yeah, that is pretty much has been our recommendation for a while.

- I don’t see this as a problem. You have just the same issue if you are using any OR/M against an RDBMS.

- Bulk Insert – the issue has already been fixed. In fact, IIRC, it was fixed within a day or two of the issue being brought up.

- Eventual Consistency – Yes, you need to decide how to handle that. As Jeremy said, there are several ways of handling that, from using natural keys with no query latency associated with them to calling WaitForNonStaleResultsAsOfNow();

Truthfully, the thing that really caught my eye wasn’t Jeremy’s post, but one of the comments:

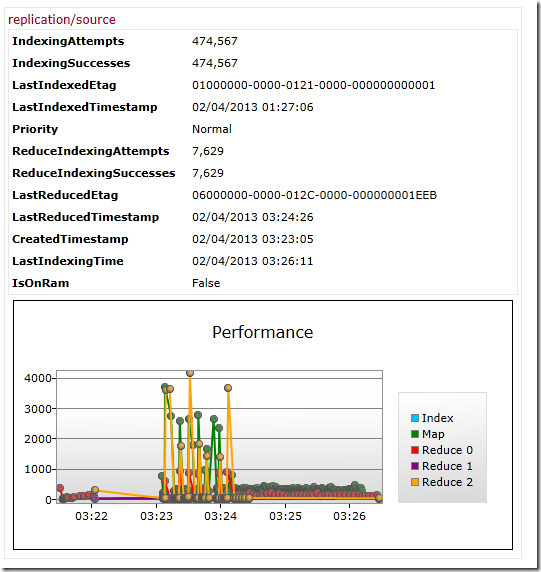

Thanks you, we spend a lot of time on that!