Reading the comments in this Reddit thread gave me a bit of a pause. Mostly because of the level of knowledge about what I consider basic stuff. Then again, I can’t do any UI that doesn’t involve the <table/> tag, so I guess that make sense. So I decided to do something about this lack of knowledge. In this series, I’m going to go over just those kind of details that I consider essential to understand how databases actually work.

Imagine that you had a program that needed to persist some state. Let us say that we need to keep track of users and how often they log into the system. You would typically use your database of choice to do so, and you’ll be flying away in no time.

But for this series, we are assuming that no such software exist, so we have to roll our own persistence solution. The simplest solution for this that is to simply store the data as a CSV file. Here is what we end up doing:

Now, this has several advantages, simplicity being a key one, but it also suffer from pretty much every possible disadvantage.

Let us consider the following simple scenarios:

- I want to update the last login date

- I want to update the user name

- I want to search by user name

- I want to get records sorted by last login date

- I want to add a record

- I want to delete a record

Oh, and I want to do all of the above when I have a million records.

CSV is a great thing, if the number of records we have is small. To be fair, on modern hardware, a million records is small, but we’ll ignore this for reasons that will be explained in the following posts.

Let us look at how the file actually looks like, shall we?

Now, if we want to update the last login date for Oren, that is pretty easy. We can do that using the following sequence of operations:

I’m intentionally using C API here, because that is what actually is going on, and we need to understand and work with that.

Notice a few things that are going on in here. We open the file, seek to the date’s position on the first data row, and then we update the date with a value that has the same size. Finally, we close the file.

Are mission has met success! Let all retire somewhere for breakfast (I’m writing this at 5:20 AM after a “night” that had no darkness while being in NDC Oslo. Looking out the window I see clear skies, great visibility and absolutely no people. It feels like the opening scene in a Zombie movie.

Anyway, before breakfast can commence, let us try to update the user name. Arava, while being an awesome German Shepherdess, has chosen a bad username. No matter what I call her when she chew a tennis ball in the bed again. Let us see what we’ll need to update her username to the more neutral “dog”.

Now, you might notice that I’m somewhat cheating here. The problem is that the size of the values are different, but there is no real way for us to just cut some part of the file, so I’m abusing the fact that the CSV parser will ignore any whitespace at the beginning of the value to “trim” the value.

But what happen if I didn’t want to shorten the username? What if I wanted the username to be shepherdess? Well, in this case, I wouldn’t be able to do that. I don’t have enough space, and if I tried, I would overwrite the next record, and probably corrupt the whole file.

Bummer.

Typically, at this point we have to abandon the simplicity of a CSV file and move over to a slightly better format. In this case, we can go with fixed size records. In other words, we’ll define the maximum size for each field. Here is what this looks like:

This format is harder for humans to generate, because if we do this manually we have to count spaces, etc. But this is actually much easier for us to work with. We have the following format:

- Full Name – Max 16 chars

- User Name – Max 12 chars

- Last Login – Exactly 19 chars

Computing where to write the last login date for a particular record is now simply the following math:

(16 + 12 + 19 + 2) * NumberOfRecords + 16 + 12 + 42

42 is the length in bytes of the two first rows. And the +2 is for the line terminator.

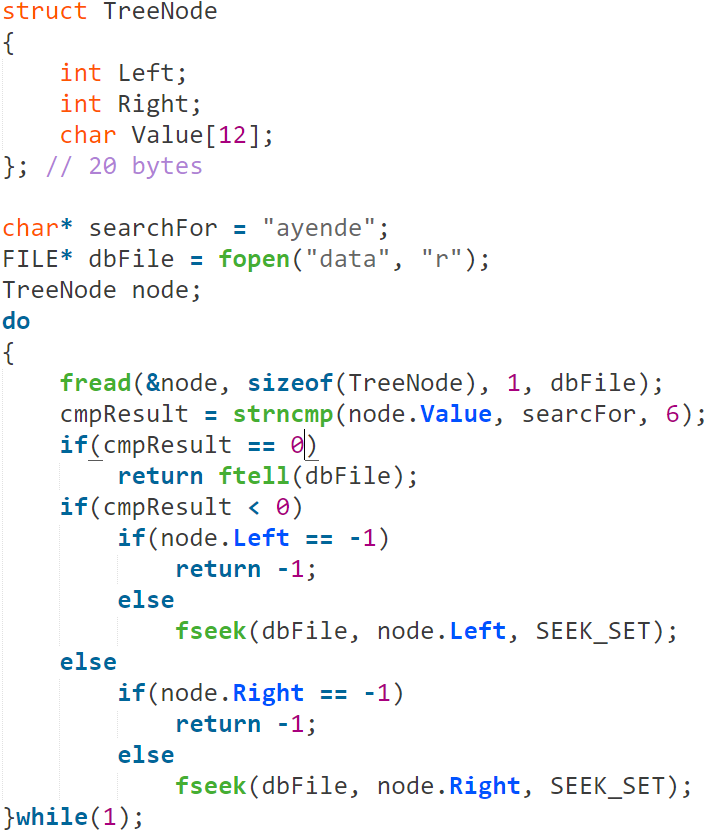

So far so good, now we have a file format, and the ability to work with it. Which is great, and even quite convenient for us. But it isn’t really suitable for doing anything but full scans of the file. Here is the logic for searching a record by username:

By the way, I’m doing this in C also because it is fun, it has been a while since I did C, so it might be wrong.

As you can see, the cost of actually doing any sort of operation in this format is simple, O(N). That isn’t going to work for us.

In the next post in this series, I’m going to talk about indexes, and how they work. After that, we will start talking about the actual on disk format, and what it is impacted by.