If has been two months since the first release candidate of RavenDB 4.0 and the team has been hard at work. Looking at the issues resolved in that time frame, there are over 500 of them, and I couldn’t be happier about the result.

If has been two months since the first release candidate of RavenDB 4.0 and the team has been hard at work. Looking at the issues resolved in that time frame, there are over 500 of them, and I couldn’t be happier about the result.

RavenDB 4.0 RC2 is out: Get it here (Windows, Linux, OSX, Raspberry PI, Docker).

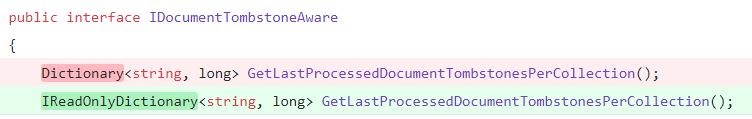

When we were going through the list of issues for this released, I noticed something really encouraging. The vast majority of them were things that would never make it into the release highlights. These are the kind of issues that are all about spit and polish. Anything from giving better error messages to improving the first few minutes of your setup and installation to just getting things done. This is a really good thing at this stage in the release cycle. We are done with features and big ticket stuff. Now it is time to finishing grinding through all the myriads of details and small fixes that make a product really shine.

That said, there are still a bunch of really cool stuff that were cooking for a long time and that we could only now really call complete. This list includes:

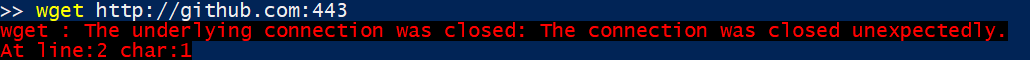

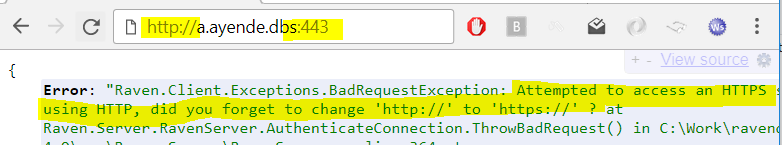

- Authentication and authorization – the foundation for that was laid a long time ago, with X509 client certificates used for authenticating clients against RavenDB 4.0 servers. The past few months had us building the user interface to manage these certificates, define permissions and access across the cluster.

- Facet and MoreLikeThis queries – this is a feature that was available in RavenDB for quite some time and is now available as a integral part of the RavenDB Query Language. I’m going to have separate posts to discuss these, but they are pretty cool, albeit specialized ways to look at your data.

- RQL improvements – we made RQL a lot smarter, allowing more complex queries and projections. Spatial support has been improved and is now much easier to work with and reason about using just raw RQL queries.

- Server dashboard – allows you to see exactly what your servers are doing and is meant to be something that the ops team can just hang on the wall and stare at in amazement realizing how much the database can do.

- Operations – the operations team generally has a lot of new things to look at in this release. SNMP monitoring is back, and significant amount of work was spent on errors. That is, making sure that an admin will have clear and easy to understand errors and a path to fix them. Traffic monitoring and live tracing of logs is also available directly in the studio now. CSV import / export is also available in the studio, as well as Excel integration for the business people. Automatic backup processes are also available now for scheduled backups for both local and cloud targets and an admin has more options to control the database. This include compaction of databases after large deletes to restore space to the sytem.

- Patching, querying and expiring UI – this was mostly exposing existing functionality and improving the amount of details that we provide by default. Allowing users to define auto expiration policy for documents with time to live. On the querying side, we are showing a lot more information. My favorite feature there is that the studio can now show the result of including documents, which allow to easily show how this feature can save you in network roundtrips. Queries & patching now has much much nicer UI and also support some really cool intellisense.

- Performance – most of the performance work was already done, but we were able to identify some bottlenecks on the client side and reduce the amount of work it takes to save data to the database significantly. This especially affects bulk inert operations, but the effect is actually wide spread enough to impact most of the client operations.

- Advanced Linq support – a lot of work has been put into the Linq provider (again) to enable more advanced scenarios and more complex queries.

- ETL Processes - are now exposed and allow you to define both RavenDB and SQL databases as target for automatic ETL from a RavenDB instance.

- Cluster wide atomic operations – dubbed cmpxchng after the similar assembly instruction, this basic building block allow to build very complex distributed behaviors in a distributed environment without any hassle, relying on RavenDB consensus to verify that such operations are truly atomics.

- Identity support – identities are now fully supported in the client and operate as a cluster wide operation. This means that you can rely on them being unique cluster wide.

Users provided really valuable feedback, finding a lot of pitfalls and stuff that didn’t make sense or flow properly. And that was a lot of help in reducing friction and getting things flowing smoothly.

There is another major feature that we worked on during this time, the setup process. And it may sound silly, but this is probably the one that I’m most excited about in this release. Excited enough that I’ll have a whole separate post for it, coming soon.

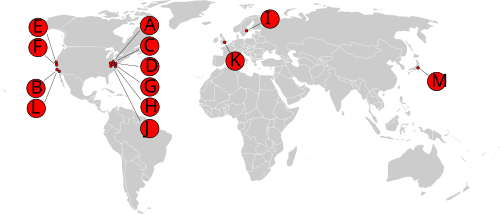

DNS is used to resolve a hostname to an IP. This is something that most developers already know. What is not widely known, or at least not talked so much is the structure of the DNS network. To the right you can find the the map of root servers, at least in a historical point of view, but I’ll get to it.

DNS is used to resolve a hostname to an IP. This is something that most developers already know. What is not widely known, or at least not talked so much is the structure of the DNS network. To the right you can find the the map of root servers, at least in a historical point of view, but I’ll get to it.