Late last year I talked about our first thoughts about implementing encryption in RavenDB in the 4.0 version. I got some really good feedback and that led to this post, detailing the initial design for RavenDB encryption in the 4.0 version. I’m happy to announce that we are now pretty much done with regards to implementing, testing and banging on this, and we have working full database encryption in 4.0. This post will discuss how we implemented it and additional considerations regarding management and working with encryption databases and clusters.

Late last year I talked about our first thoughts about implementing encryption in RavenDB in the 4.0 version. I got some really good feedback and that led to this post, detailing the initial design for RavenDB encryption in the 4.0 version. I’m happy to announce that we are now pretty much done with regards to implementing, testing and banging on this, and we have working full database encryption in 4.0. This post will discuss how we implemented it and additional considerations regarding management and working with encryption databases and clusters.

RavenDB uses ChaCha20Poly1305 authenticated encryption scheme, with 256 bits keys. Each database has a master key, which on Windows is kept encrypted via DPAPI (on Linux we are still figuring out the best thing to do there), and we encrypt each page (or range of pages, if a value takes more than a single page) using its a key derived from the master key and the page number. We are also using a random 64 bits nonce for each page the first time it is encrypted, and then increment the nonce every time we need to encrypt the page again.

We are using libsodium as the encryption library, and in general it make it a pleasure to work with encryption, since it is very focused on getting things done and getting them done right. The number of decisions that we had to make by using it is minimal (which is good). The pattern of initial random generation of the nonce and then incrementing on each use is the recommended method for using ChaCha20Poly1305. The WAL is also encrypted on a per transaction basis, with a random nonce and a derived from the master key and the transaction id.

This encryption is done at the lowest possible level, so it is actually below pretty much anything in RavenDB itself. When a transaction need to access some data, it is decrypted on the fly for it, and then it is available for the duration of the transaction. When the transaction is closed, we’ll encrypt all modified data again, then wipe all the buffered we used to ensure that there is no leakage. The only time we have decrypted data in memory is during the lifetime of a transaction.

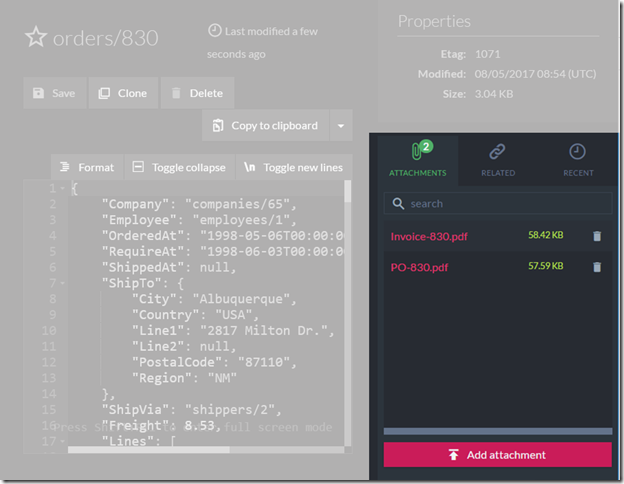

A really nice benefit of working at such low level is that all the feature of the database are automatically included. That means that adding our Lucene on Voron implementation, for example, is also part of this, and all indexes are encrypted as well, without having to take any special steps.

Encrypted database does poses some challenges. Not so much for the database author (that’s me) as to the database users (that’s you). If you take a backup of an encrypted database, restoring it pretty much requires that you’ll have the master key to enable actually accessing the data. When you are running in a cluster, that represent another challenge, since you need to make sure that all the nodes in the cluster running the database are encrypting it. Communication about the database should also be encrypted. Tasks such as periodic backup / export / etc also need special treatment. Key generation is also important, you want to make sure that the key isn’t “123456” or some such.

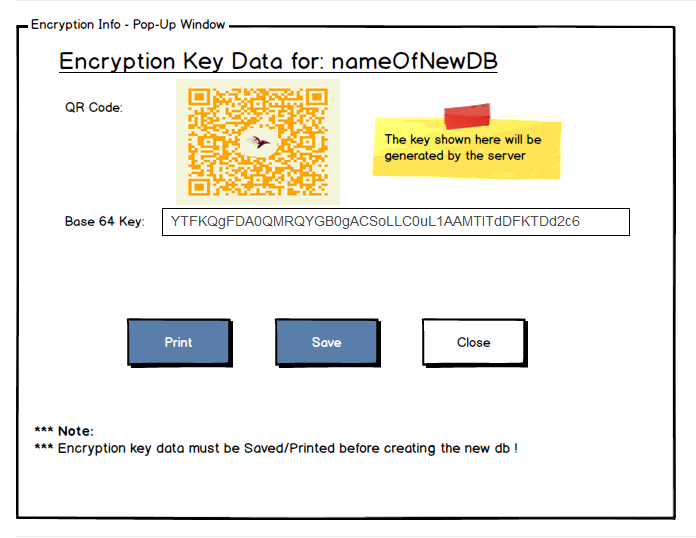

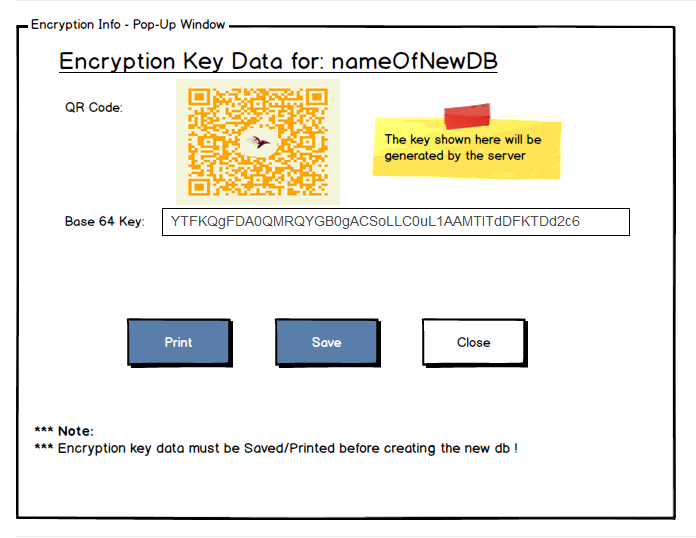

Here is what we came up with. When you create a new encrypted database, you’ll be presented with the following UI:

The idea is that you can either have the server generate the key for you (secured, cryptographically random 256 bits), and then we’ll show it to you and allow you to save it / print it / record it for later. This is the only chance you’ll have to get the key from the server.

After you have the key, you then select which nodes and how many this database is going to run on, which will cause it to create the master key on all of those for that particular database. In this manner, all the nodes for this database will use the same key, which simplify some operational tasks (recovery / backup / restore / etc). Alternatively, you can create the database on a single node, and then add additional nodes to it, which will give you the option to generate / provide a key. I’m not sure whatever ops will be happy with either option, but we have the ability to either have all of them using the same key or having separate key for each node.

Encrypted databases can only reside on nodes that communicate with each other via HTTPS / SSL. The idea is that if you are running an encrypted database, requests between the different nodes should also be private, so you’ll have protection for data at rest and as the data is being moved around. Note that we verify that at the sender, not the origin side. Primarily, this is about typical deployment patterns. We expect to see users running RavenDB behind firewalls / proxy / load balancers, we expect people to use nginx as the SSL endpoint and then talk http to ravendb (which is reasonable if they are on the same machine or using unix sockets). Regardless, when sending data over the wire, we are always talking RavenDB instance to RavenDB instance via TCP connection, and for encrypted databases, this will require SSL connection to work.

Other things, such as ETL tasks, will generate a suggestion to the user to ensure that they are also secured, but we’ll assume here that this is an explicit operation to move some of the data to another location, possibly filtering it, so we’ll not block it if it isn’t using https (leaving aside the fact that just figuring out whatever the connection to a relational database is using SSL or not is too complex to try), so we’ll warn about it and trust the user.

Finally, we have the issue of backups and exports. Those can be done on a regular basis, and frequently you’ll want them to be done to a remote location (cloud, S3, Dropbox, etc). In that case, all automated backup processes will require you to generate a public / private key pair (and only retain the public key, obviously). From then on, all the backups will be encrypted using the public key and uploaded to the cloud. That means that even if your cloud account is hacked, the hackers can’t do anything with the data, since they are missing the private key.

Again, to encourage users to actually do the right thing and save the private key, we’ll offer it in a form that is easily maintained safely. So you’ll be asked to print it and store it in some file folder somewhere (in addition to whatever digital backups you have), so you can restore it at a later point when / if you need it.

For manual operations, such as exporting an encrypted database, we’ll warn if you are trying to export without a key, but allow it (since forcing a key for a manual operation does nothing to security). Conversely, exporting a non encrypted database will also allow you to provide key pair and encrypt it, both for manual operations and automatic backup configurations.

Aside from those considerations, you can pretty much treat an encrypted database as a regular one (except, of course, that the data is encrypted at all times unless actively accessed). That means that all features would just work. The cost of actually encrypting and decrypting all the time is another concern, and we have seen about 60% additional cost in write speed and about 15% extra cost for reading.

Just to give you some idea about the performance we are talking about… Ingesting the entire Stack Overflow dataset, some 52GB in size and over 18 million documents can be done in 22 minutes with encryption. Without encryption we are faster, roughly 13 and a half minutes on that same machine, but that still gives us a rate of close to 2.5 GB per minute with encryption.

As you can tell from the mockup, while we have completed most of the encryption work around the engine, the actual UI and behavioral semantics are still in a bit of a flux. Your comments about those are welcome.

![]() We are testing RavenDB on a wide variety of software and hardware, and a few weeks ago one of our guys came to me with grave concern. We had a major regression in performance on Linux. And major as in 75% slower than what it used to be a few weeks ago.

We are testing RavenDB on a wide variety of software and hardware, and a few weeks ago one of our guys came to me with grave concern. We had a major regression in performance on Linux. And major as in 75% slower than what it used to be a few weeks ago.

![image[6] image[6]](https://ayende.com/blog/Images/Open-Live-Writer/A-financial_9E1B/image[6]_thumb.png)

When building a distributed system, one of the more interesting aspects is how you are going to distribute tasks assignment. In other words, given that you have multiple nodes, how do you decide which node will do what? In some cases, that is relatively easy, you can say “all nodes will process read requests”, but in others, this is more complex. Let us take the case where you have several nodes, and you need to have a regular backup of a database that is replicated between all those nodes. You probably don’t want to run the backup across all the nodes, after all, they are pretty much the same and you don’t want to backup the exact same thing multiple times. On the other hand, you probably don’t want to assign this work statically, if you do, and if the node that is responsible for the backup is down, you got no backup.

When building a distributed system, one of the more interesting aspects is how you are going to distribute tasks assignment. In other words, given that you have multiple nodes, how do you decide which node will do what? In some cases, that is relatively easy, you can say “all nodes will process read requests”, but in others, this is more complex. Let us take the case where you have several nodes, and you need to have a regular backup of a database that is replicated between all those nodes. You probably don’t want to run the backup across all the nodes, after all, they are pretty much the same and you don’t want to backup the exact same thing multiple times. On the other hand, you probably don’t want to assign this work statically, if you do, and if the node that is responsible for the backup is down, you got no backup.