I am not sure how to call this issue, except maddening. For a simple repro, you can check this github repository.

The story is quite simple, let us assume that you need to send a set of values from the server to the client. For example, they might be tick values, or updates, or anything of this sort.

You can do this by keeping an HTTP connection open and sending data periodically. This is a well known technique and it works quite well. Except in Silverlight, where it works, but only if you put the appropriate Thread.Sleep() in crucial places.

Here is an example of the server behavior:

var listener = new HttpListener

{

Prefixes = {"http://+:8080/"}

};

listener.Start();

while (true)

{

var ctx = listener.GetContext();

using (var writer = new StreamWriter(ctx.Response.OutputStream))

{

writer.WriteLine("first\r\nsecond");

writer.Flush();

}

Console.ReadKey();

}

In this case, note that we are explicitly flushing the response, then just wait. If you look at the actual network traffic, you can see that this will actually be sent, the connection will remain open, and we can actually send additional data as well.

But how do you consume such a thing in Silverlight?

var webRequest = (HttpWebRequest)WebRequestCreator.ClientHttp.Create(new Uri("http://localhost:8080/"));

webRequest.AllowReadStreamBuffering = false;

webRequest.Method = "GET";

Task.Factory.FromAsync<WebResponse>(webRequest.BeginGetResponse, webRequest.EndGetResponse, null)

.ContinueWith(task =>

{

var responseStream = task.Result.GetResponseStream();

ReadAsync(responseStream);

});

We start by making sure that we disable read buffering, then we get the response and start reading from it. The read method is a bit complex, because is has to deal with partial response, but it should still be fairly obvious what is going on:

byte[] buffer = new byte[128];

private int posInBuffer;

private void ReadAsync(Stream responseStream)

{

Task.Factory.FromAsync<int>(

(callback, o) => responseStream.BeginRead(buffer, posInBuffer, buffer.Length - posInBuffer, callback, o),

responseStream.EndRead, null)

.ContinueWith(task =>

{

var read = task.Result;

if (read == 0)

throw new EndOfStreamException();

// find \r\n in newly read range

var startPos = 0;

byte prev = 0;

bool foundLines = false;

for (int i = posInBuffer; i < posInBuffer + read; i++)

{

if (prev == '\r' && buffer[i] == '\n')

{

foundLines = true;

// yeah, we found a line, let us give it to the users

var data = Encoding.UTF8.GetString(buffer, startPos, i - 1 - startPos);

startPos = i + 1;

Dispatcher.BeginInvoke(() =>

{

ServerResults.Text += data + Environment.NewLine;

});

}

prev = buffer[i];

}

posInBuffer += read;

if (startPos >= posInBuffer) // read to end

{

posInBuffer = 0;

return;

}

if (foundLines == false)

return;

// move remaining to the start of buffer, then reset

Array.Copy(buffer, startPos, buffer, 0, posInBuffer - startPos);

posInBuffer -= startPos;

})

.ContinueWith(task =>

{

if (task.IsFaulted)

return;

ReadAsync(responseStream);

});

}

While I am sure that you could find bugs in this code, that isn’t the crucial point.

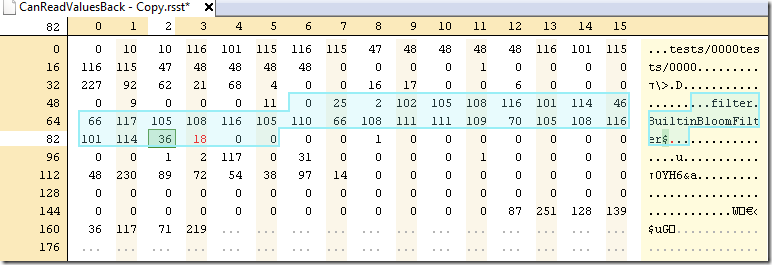

If we run the server, then run the SL client, we could see that we get just one lousy byte, and that is it. Now, reading about this, it appears that in some versions of some browsers, you need to send 4KB of data to get the connection going. But that isn’t what I have observed. I tried sending 4KB+ of data, and I still saw the exact same behavior, we got called for the first byte, and nothing else.

Eventually, I boiled it down to the following non working example:

writer.WriteLine("first");

writer.Flush();

writer.WriteLine("second");

writer.Flush();

Versus this working example:

writer.WriteLine("first");

writer.Flush();

Thread.Sleep(50);

writer.WriteLine("second");

writer.Flush();

Yes, you got it right, if I put the thread sleep in the server, I’ll get both values in the client. Without the Thread.Sleep, we get only the first byte. It seems like it isn’t an issue of size, but rather of time, and I am at an utter loss to explain what is going on.

Oh, and I am currently awake for 27 hours straight, most of them trying to figure out what the )(&#@!)(DASFPOJDA(FYQ@YREQPOIJFDQ#@R(AHFDS:OKJASPIFHDAPSYUDQ)(RE is going on.