I thought that it would be a good idea to take EF Prof for a spin one the sample MVC Music Store. It can illustrate some things about EF Prof and about the sample app.

The first step is to download EF Prof, once that is completed, extract the files, there is no install necessary.

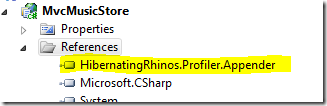

We need to reference the HibernatingRhinos.Profiler.Appender in the application:

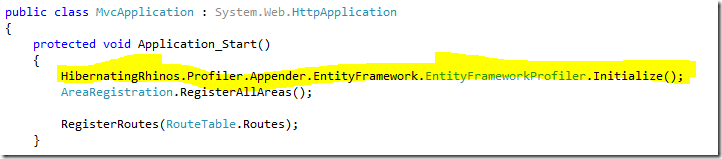

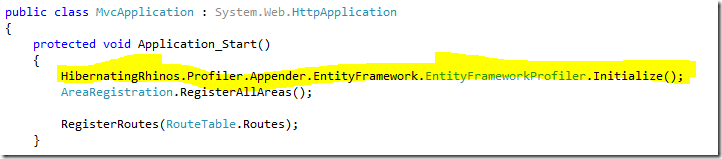

Then, from the Global.asax Application_Start, we initialize the profiler:

That is all the setup that you need to do :-)

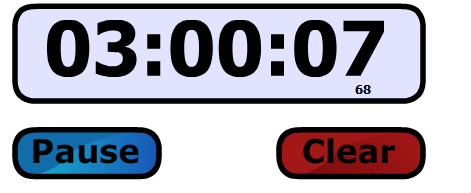

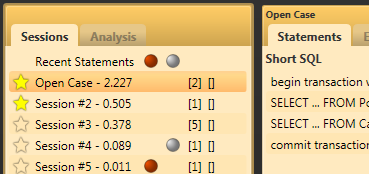

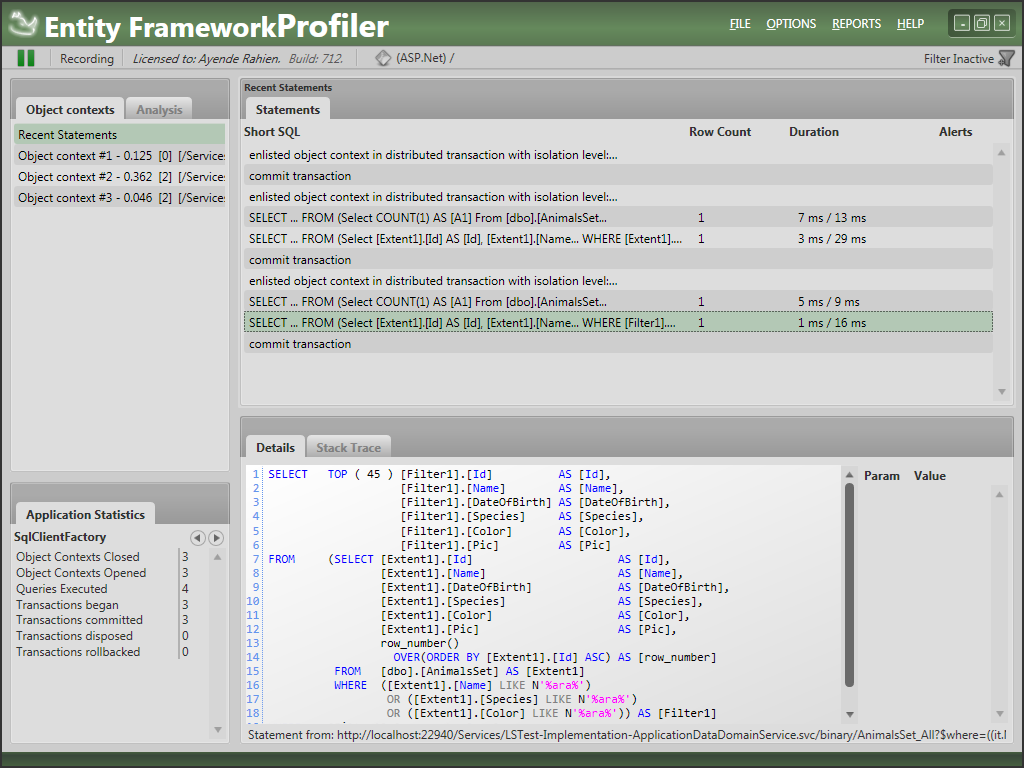

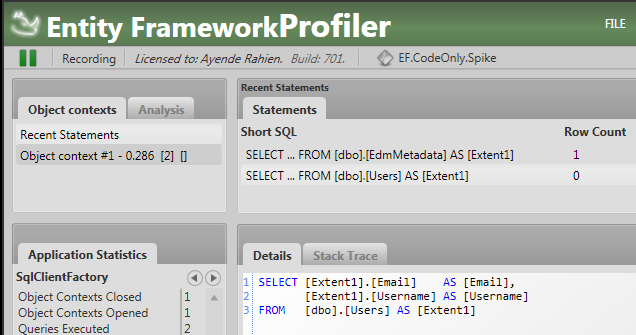

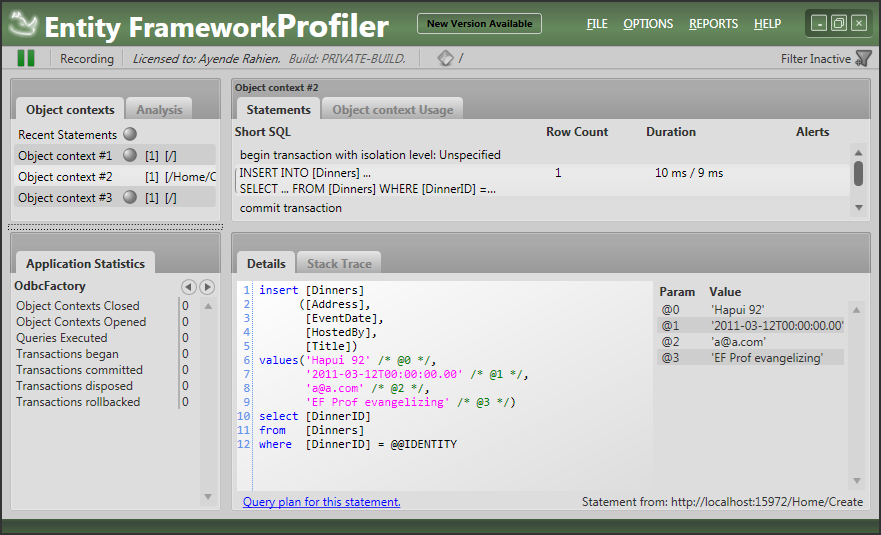

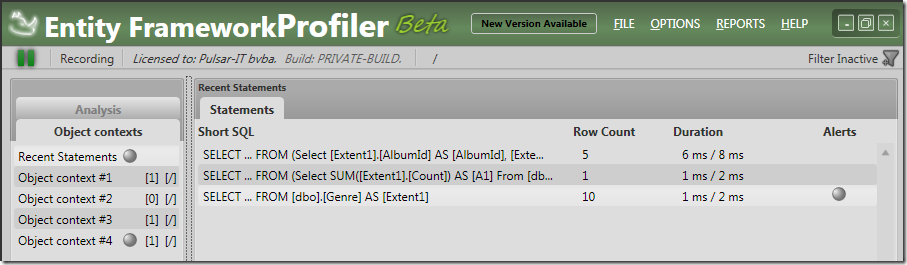

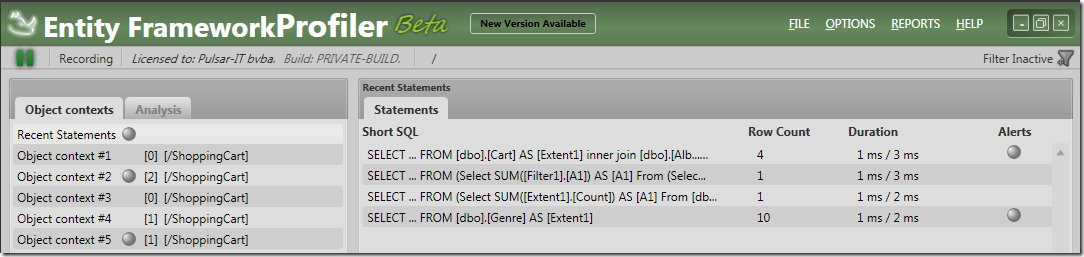

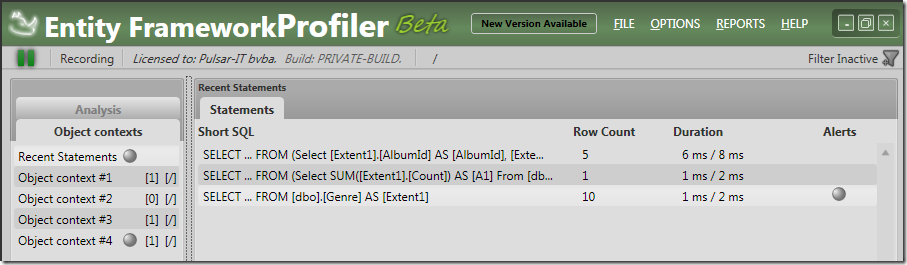

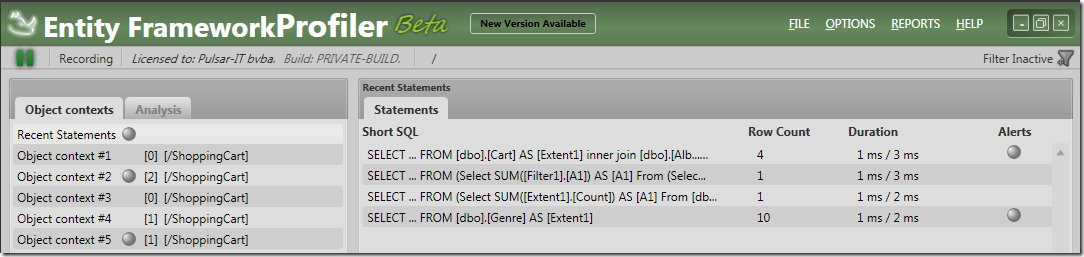

Now, we go to http://localhost:1397/, and we can see the following in EF Prof:

There are several interesting this to see here.

- We executed three queries to load the main page.

- We actually opened four object contexts to handle this request.

- We have a query that generate a warning.

Let us deal with each one in turn:

Multiple queries for the same request. This requires analysis because often we are querying too much. But in this instance there is no problem, we are querying different things and we are doing so efficiently.

Four object contexts to handle a single request is bad. We can see that each query was actually executed through a different object context (and one was idle). There are several problems with having multiple object contexts per request:

- Each object context would open its own connection to the database. I am not sure, but I think that they do so lazily, which means that a single page request resulted in three connections to the database.

- Each object context implements its own unit of work. You might get two different instances that represent the same row in the database.

- You can’t aggregate all your operations into a single SaveChanges() call, you have to make multiple trips to the database.

- You require using System.Transactions and distributed transactions if you want to ensure a transaction boundary around your code.

In short, there are a lot of good reasons to go with request scoped object context, you should do so.

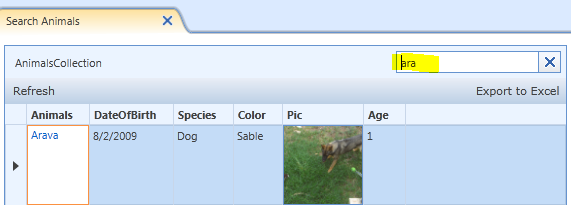

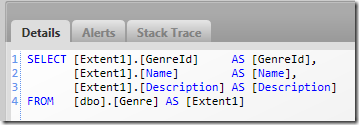

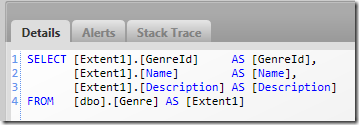

Now, let us look at that query with the warning:

EF Prof generated a warning about unbounded result set for this query. What does this mean? It means that if you had 100,000 genres, this query would attempt to load all of them. I am not an expert on music, but even I think that 100,000 genres are unlikely. The problem with these sort of queries is that it is likely that the number of genres will grow, and not adding a TOP or LIMIT clauses to the query means that you are open to problems when the data does grow.

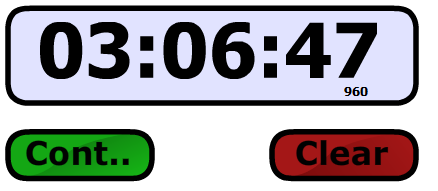

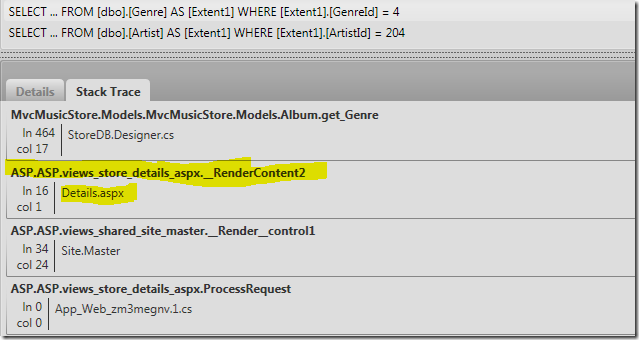

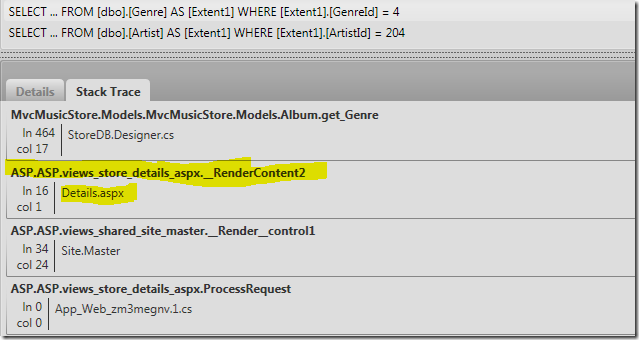

And with that, let us see what happens when we look at a single album (http://127.0.0.1:1397/Store/Details/392):

This is pretty much the same as the before, with 5 queries required to process this required, but let us dig just a tiny bit deeper:

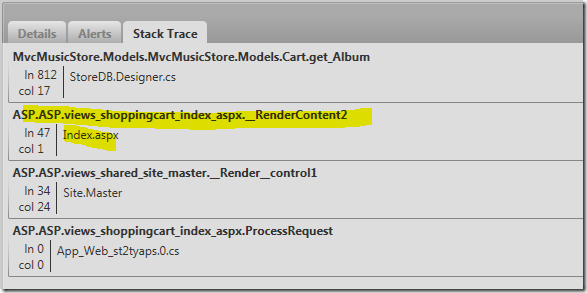

What we see here is that we have queries that are being generated from rendering the views. That usually trips a warning flag with me, because queries that are being generated from the view are likely to cause problems down the road, data access patterns should rarely change because of view changes, and that is usually and indication that at some point, we will have a SELECT N+1 here.

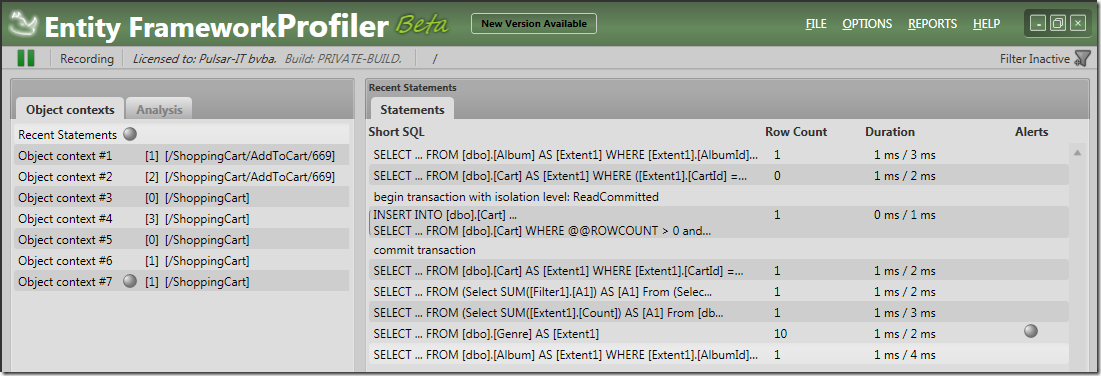

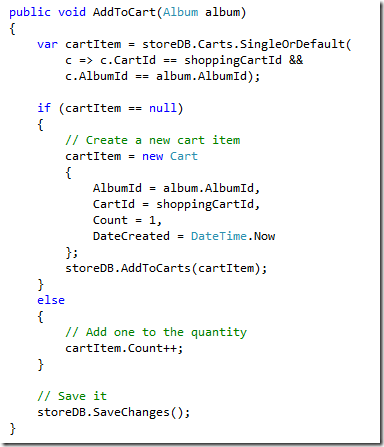

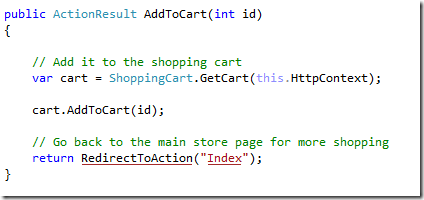

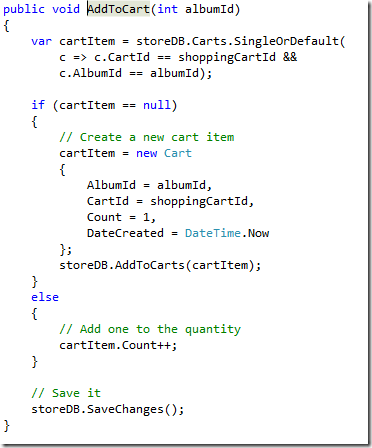

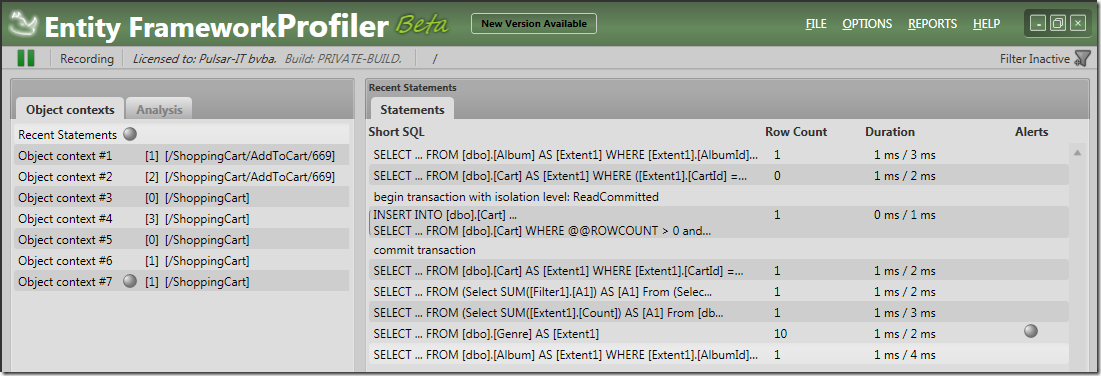

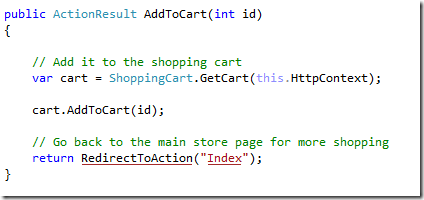

Now, let us try to add a new item to the cart (http://127.0.0.1:1397/ShoppingCart/AddToCart/669):

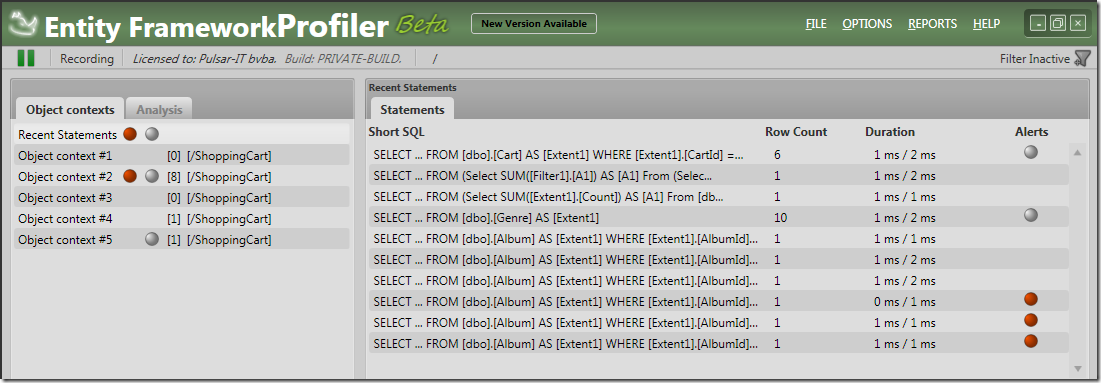

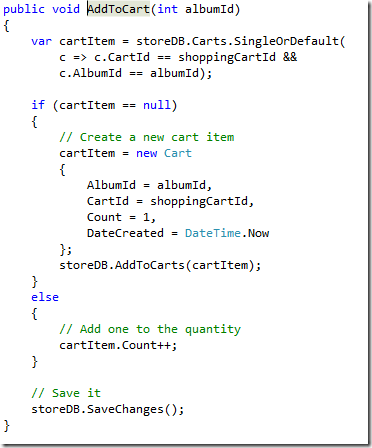

We can see that adding an item to the cart is a two steps process, first, we go to /ShoppingCart/AddToCart/669, then we are redirected to /ShoppingCart. Overall, we require 8 queries in two requests.

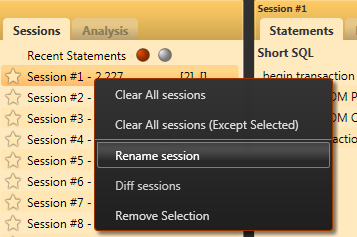

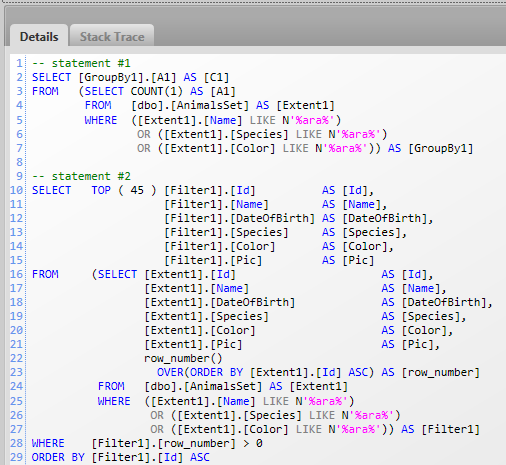

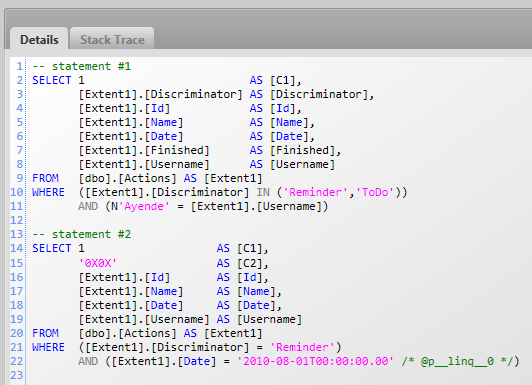

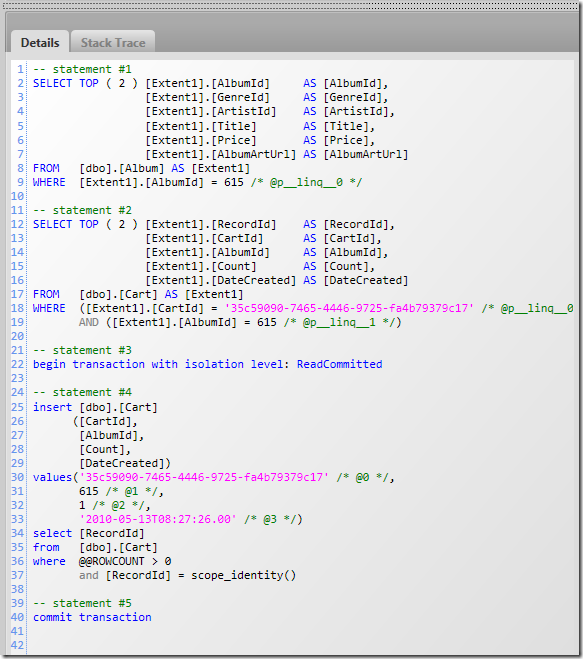

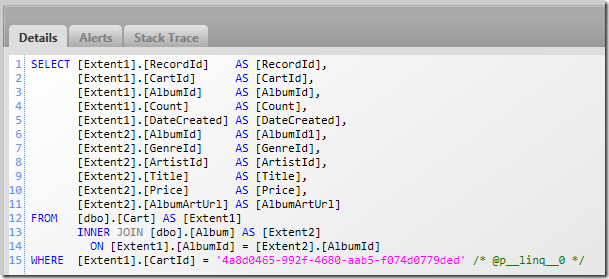

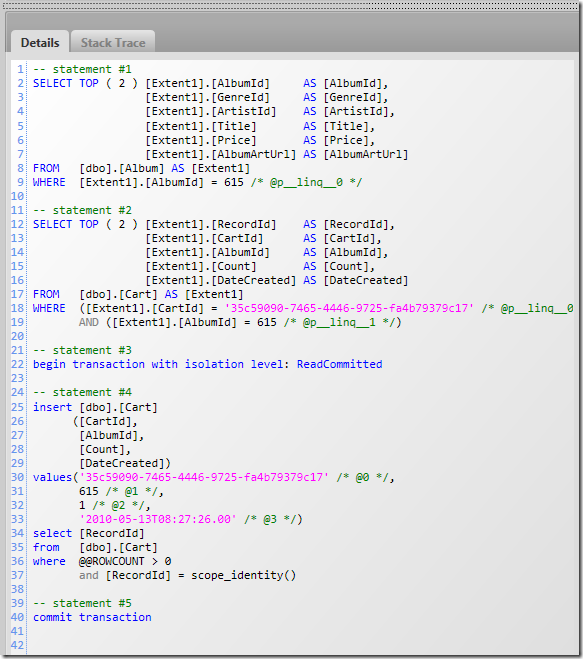

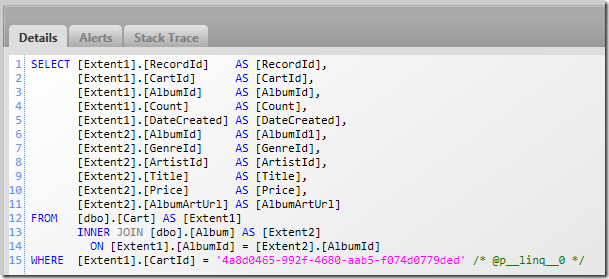

Let us look at the actual queries required to process AddToCart (note, however, that we are talking about two different object contexts):

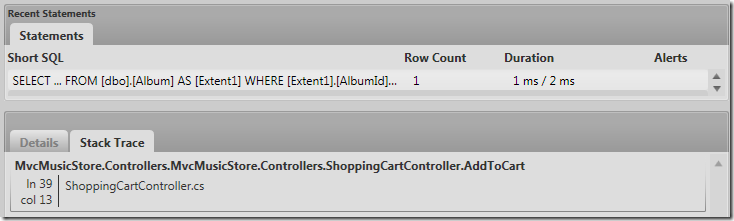

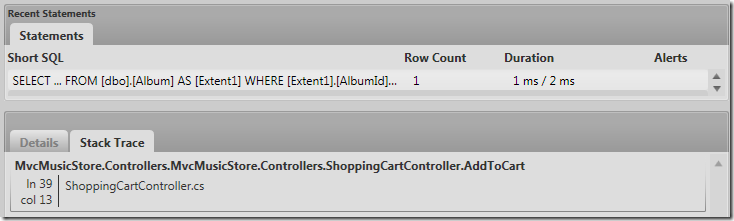

Look at the first query, I don’t see us doing anything with the data here. Let us look a bit more closely on where it is generated:

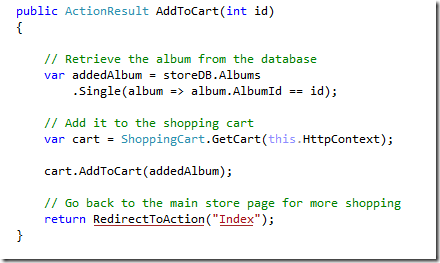

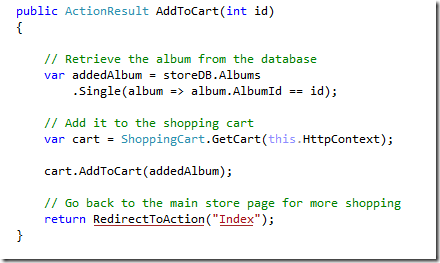

And AddToCart looks like this:

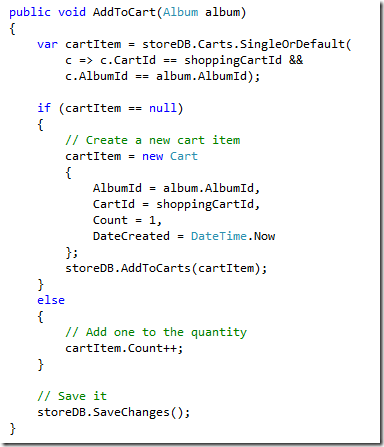

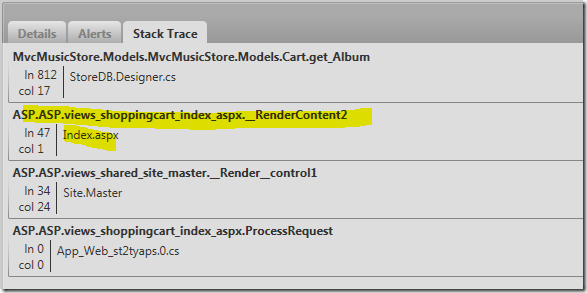

It seems that the ShoppingCart.AddToCart requires an album instance, take a look at it, and see what it does with it. The only thing it does is to use the album id. But we already know the album id, we used that to get the album instance in the first place.

It seems that the first query is there solely to check that the value exists and throw an error from the Single() method. I think we can optimize that:

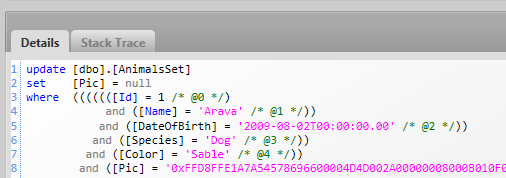

We removed that query, but we preserve the same behavior (if the application tries to save a new cart item with a missing album id, a FK violation would be thrown). Querying the database is one of the most expensive things that we can do, so it pays to watch out to where we can save in queries.

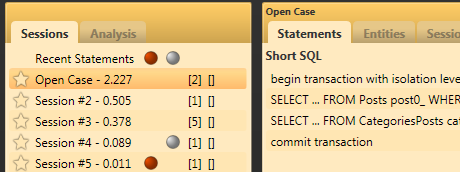

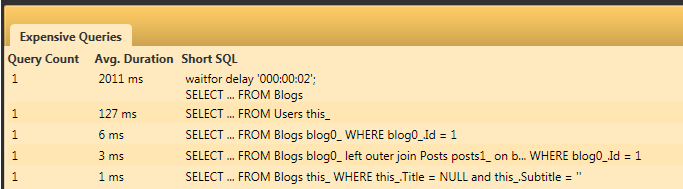

Now, let us add a few more items to the shopping cart and see what happens :-) I added 6 albums to my cart, and then went to (http://127.0.0.1:1397/ShoppingCart):

Wow! We require 10 queries to process this request, and you can see that EF Prof is urgently requesting that you’ll take a closer look at the last three.

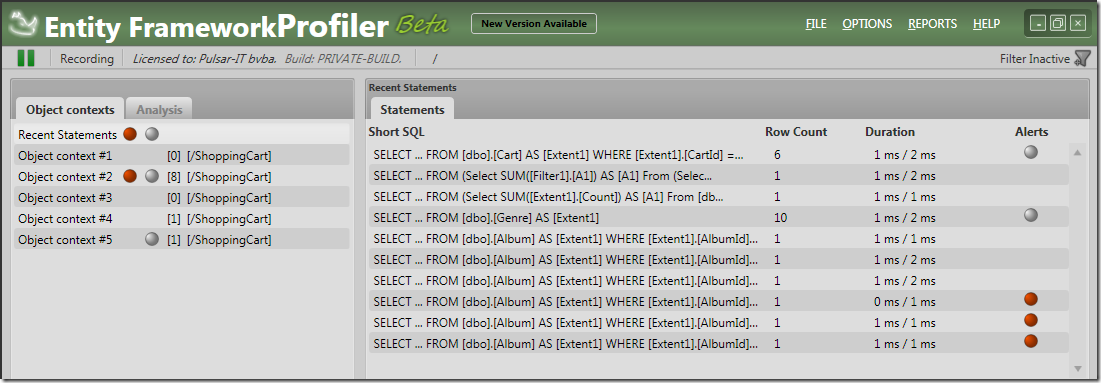

EF Prof has detected that we have a SELECT N+1 error here, where we issue a query per album in the shopping cart. If we will look at the stack trace, we will find a familiar sight:

We have queries being generated from the views, in this case, from this code:

You can see that on line 47, as shown in the profiler, we are access the title property of the album, forcing a lazy load.

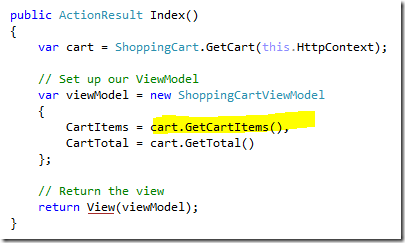

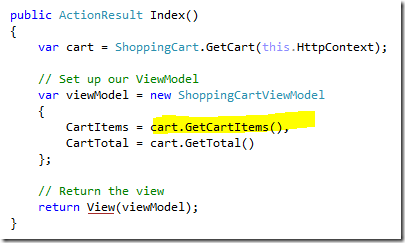

Tracking it further, we can see that Model.CartItems is set here:

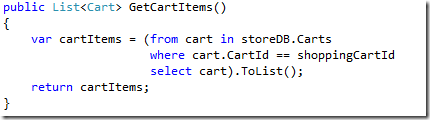

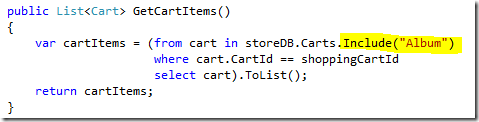

And that GetCartItems is defined as:

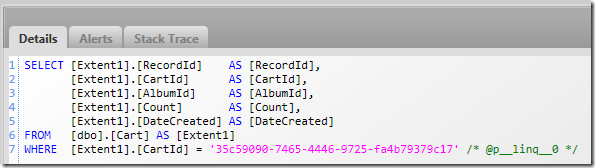

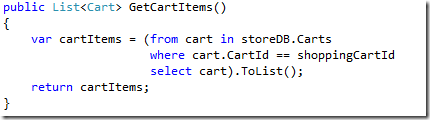

And this generate the following query:

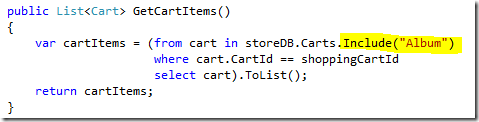

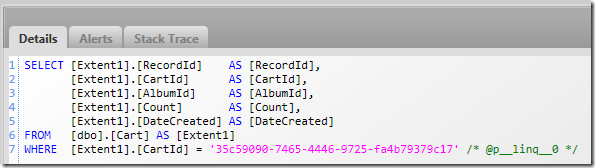

Well, that is easy enough to fix :-)

Which result in this query:

We now bring all the related albums in a single query. Which means that viewing the shopping cart result in:

Just four queries, instead of 10!

Not too shabby, for 15 minutes work with the profiler, even if I say so myself.

In general, when I am developing applications, the profiler is always running in the background, and I keep an eye on it to see if I am doing something that it warns me about, such as SELECT N +1, multiple contexts in the same request, too many calls to the database, etc.