We have been testing RavenDB in the harshest possible ways we can envision. Anything from simulating hardware failures to corrupting the network data to putting as much load as possible on the system. This is done as part of a long running test suite that has been running for the last few months. We have been stepping up the kind of things that we are doing in attempt to identify the weak points in RavenDB.

We have been testing RavenDB in the harshest possible ways we can envision. Anything from simulating hardware failures to corrupting the network data to putting as much load as possible on the system. This is done as part of a long running test suite that has been running for the last few months. We have been stepping up the kind of things that we are doing in attempt to identify the weak points in RavenDB.

One of the interesting failure modes that we need to handle is what happens when the amount of work that is asked from the system exceeds the amount of resources that are available to it. At this point, what we want to do is to start failing gracefully. What we don’t want to do is to have the entire system grind to a halt of the server crashing.

There are problems with the idea that we can detect when we re in low resource mode and react accordingly. To start with, it is really hard. If the user paid for a fast machine, and we are running at 99% CPU, should we start rejecting requests? The users are actually getting their money’s worth from the hardware, so it make no sense to do that. Second, what is low resource mode? No space in hard disk is quite easy, actually. We detect that and error and everything is fine. High CPU is not something that we want to react to, it might be that we are actively handling a spike of traffic, or just making full use of the system.

Memory is another constrained resource, and here we run into our toughest problems. RavenDB uses memory mapped files for a lot of its storage needs, which means that high memory usage is something that we want, because it means that we are actually using the memory of the machine. Now, the OS can choose to evict such data from memory at any time very cheaply, so if there is a true memory pressure, we don’t need to worry, since there is a good degradation path for us.

The problem is that this isn’t the only cause for high memory usage. In additional to the actual memory we are using (the working set) there is also the commit charge for the system. I’m probably going to have a separate post to talk about the details of memory management from the OS point of view. The commit charge is how much memory the OS promised all the applications in the system. It is very common for applications to ask for a lot more memory than they actually need, which mean that the OS will usually not actually allocate the memory immediately. Instead, it will just record the promise to give it at a future date.

On Windows, the maximum commit charge is the size of the RAM and the page file(s) and Windows will flat out refuse to commit memory beyond that limit. When you are working on a system that is heavily overburdened, it is very possible to hit that limit, which is when… interesting things will happen.

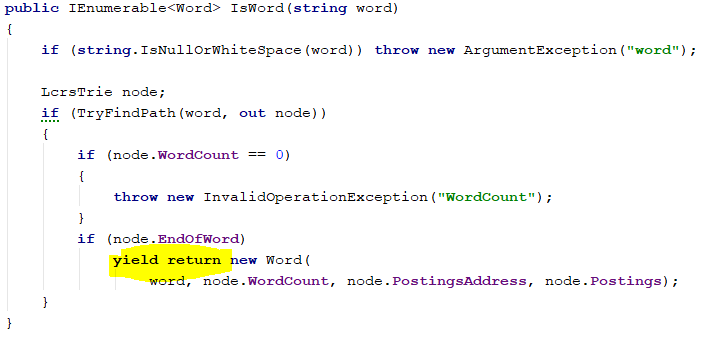

In particular, we need to consider the behavior of failure to commit memory when we need to increase the size of the thread stack. In this case, even though the size of the stack is reasonable, there is no way to get more memory for the stack, and we’ll get a a fatal Stack Overflow exception. It looks like this exact behavior is very explicitly called in the code. This means that under low memory conditions (which may be low committed memory, not real low memory) opening a new thread, which may need to allocate / expand its stack, is a very dangerous behavior.

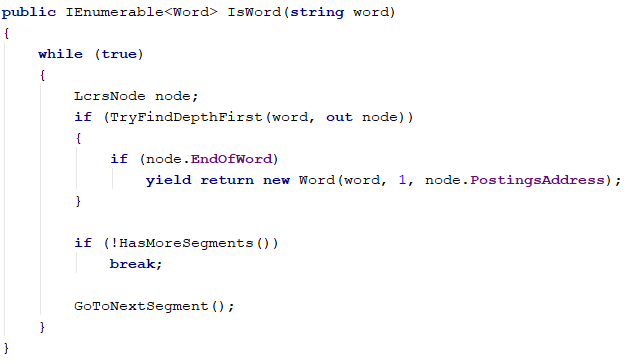

We have some code in RavenDB that spawn a new thread per connection for certain types of very long running server to server connections. Combine that we the fact that under such high load you’ll typically see disconnection and recovery by establishing a new connection (requiring a new thread) and you can see the problem. Under such load we’ll hit both conditions. Low committed memory and spawning of new threads, and then it is just a game of whatever it will be regular (and handled) allocation that fails or if it would be the stack extension that would fail, resulting in fatal error.

We are handling this by reusing the threads now, which seems to offer much greater stability in our test case.