This is a review of RavenBurgerCo, created as a sample app for RavenDB spatial support by Simon Bartlett. This is by no means an unbiased review, if only because I had laughed out load and crazily when I saw the first page:

What is this about?

Raven Burger Co is a chain of fast food restaurants, based in the United Kingdom. Their speciality is burgers made with raven meat. All their restaurants offer eat-in/take-out service, while some offer home delivery, and others offer a drive thru service.

This sample application is their online restaurant locator.

Good things about this project? Here is how you get started:

- Clone this repository

- Open the solution in Visual Studio 2012

- Press F5

- Play!

And it actually works! It uses embeddable RavenDB to make it super easy and stupid to run it, right out of the box.

We will start this review by looking at the infrastructure for this project, starting, as usual, from Global.asax:

Let us see how RavenDB is setup:

1: public static void ConfigureRaven(MvcApplication application)

2: { 3: var store = new EmbeddableDocumentStore

4: { 5: DataDirectory = "~/App_Data/Database",

6: UseEmbeddedHttpServer = true

7: };

8:

9: store.Initialize();

10: MvcApplication.DocumentStore = store;

11:

12: IndexCreation.CreateIndexes(typeof(MvcApplication).Assembly, store);

13:

14: var statistics = store.DatabaseCommands.GetStatistics();

15:

16: if (statistics.CountOfDocuments < 5)

17: using (var bulkInsert = store.BulkInsert())

18: LoadRestaurants(application.Server.MapPath("~/App_Data/Restaurants.csv"), bulkInsert); 19: }

So use embedded RavenDB, and if there isn’t enough data in the db, load the default data set using RavenDB’s new Bulk Insert feature.

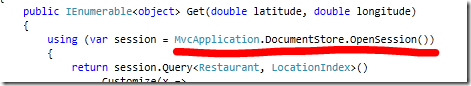

Note that we set MvcApplication.DocumentStore property, let us see how this is used.

Simon did a really nice thing here. Note that UseEmbeddedHttpServer is set to true, which means that RavenDB will find an open port and use it, this is then exposed in the UI:

So you can click on the link and land right in the studio for your embedded database, which gives you the ability to view, debug & modify how things are actually going. This is a really nice way to expose it.

Now, let us move to the actual project code itself. Exploring the options in this project, we have map browsing:

And here I have to admit ignorance. I have no idea on how to use maps, so this is quite nice for me, something new to learn. The core of this page is this script:

1: $(function () { 2:

3: var gmapLayer = new L.Google('ROADMAP'); 4: var resultsLayer = L.layerGroup();

5:

6: var map = L.map('map', { 7: layers: [gmapLayer, resultsLayer],

8: center: [51.4775, -0.461389],

9: zoom: 12,

10: maxBounds: L.latLngBounds([49, 15], [60, -25])

11: });

12:

13: var loadMarkers = function() { 14: if (map.getZoom() > 9) { 15: var bounds = map.getBounds();

16: $.get('/api/restaurants', { 17: north: bounds.getNorthWest().lat,

18: east: bounds.getSouthEast().lng,

19: south: bounds.getSouthEast().lat,

20: west: bounds.getNorthWest().lng,

21: }).done(function(restaurants) { 22: resultsLayer.clearLayers();

23: $.each(restaurants, function(index, value) { 24: var marker = L.marker([value.Latitude, value.Longitude])

25: .bindPopup(

26: '<p><strong>' + value.Name + '</strong><br />' +

27: value.Street + '<br />' +

28: value.City + '<br />' +

29: value.PostCode + '<br />' +

30: value.Phone + '</p>'

31: );

32: resultsLayer.addLayer(marker);

33: });

34: });

35: } else { 36: resultsLayer.clearLayers();

37: }

38: };

39:

40: loadMarkers();

41: map.on('moveend', loadMarkers); 42: });

You can see that loadMarkers method, which is getting called whenever the map is moved, and on startup. This end up calling this method with the boundaries of the visible UI on the server:

1: public IEnumerable<object> Get(double north, double east, double west, double south)

2: { 3: var rectangle = string.Format(CultureInfo.InvariantCulture, "{0:F6} {1:F6} {2:F6} {3:F6}", west, south, east, north); 4:

5: using (var session = MvcApplication.DocumentStore.OpenSession())

6: { 7: return session.Query<Restaurant, LocationIndex>()

8: .Customize(x => x.RelatesToShape("location", rectangle, SpatialRelation.Within)) 9: .Take(512)

10: .Select(x => new

11: { 12: x.Name,

13: x.Street,

14: x.City,

15: x.PostCode,

16: x.Phone,

17: x.Delivery,

18: x.DriveThru,

19: x.Latitude,

20: x.Longitude

21: })

22: .ToList();

23: }

24: }

Note that in this case, we are doing a search for items inside the rectangle. But the search options are a bit funky. You have to send the data in WKT format. Luckily, Simon already create a better solution (in this case, he is using the long hand method to make sure that we all understand what he is doing). The better method would be to use his Geo library, in which case the code would look like:

1: .Geo("location", x => x.RelatesToShape(new Rectangle(west, south, east, north), SpatialRelation.Within))

So that was the map, now let us look at another example, the Eat In example. In that case, we are looking for restaurants near our location to be figure out where to eat. This looks like this:

Right in the bull’s eye!

Here is the server side code:

1: public IEnumerable<object> Get(double latitude, double longitude)

2: { 3: using (var session = MvcApplication.DocumentStore.OpenSession())

4: { 5: return session.Query<Restaurant, LocationIndex>()

6: .Customize(x =>

7: { 8: x.WithinRadiusOf(25, latitude, longitude);

9: x.SortByDistance();

10: })

11: .Take(250)

12: .Select(x => new

13: { 14: x.Name,

15: x.Street,

16: x.City,

17: x.PostCode,

18: x.Phone,

19: x.Delivery,

20: x.DriveThru,

21: x.Latitude,

22: x.Longitude

23: })

24: .ToList();

25: }

26: }

And on the client side, we just do the following:

1: $('#location').change(function () { 2: var latlng = $('#location').locationSelector('val'); 3:

4: var outerCircle = L.circle(latlng, 25000, { color: '#ff0000', fillOpacity: 0 }); 5: map.fitBounds(outerCircle.getBounds());

6:

7: resultsLayer.clearLayers();

8: resultsLayer.addLayer(outerCircle);

9: resultsLayer.addLayer(L.circle(latlng, 15000, { color: '#ff0000', fillOpacity: 0.1 })); 10: resultsLayer.addLayer(L.circle(latlng, 10000, { color: '#ff0000', fillOpacity: 0.3 })); 11: resultsLayer.addLayer(L.circle(latlng, 5000, { color: '#ff0000', fillOpacity: 0.5 })); 12: resultsLayer.addLayer(L.circleMarker(latlng, { color: '#ff0000', fillOpacity: 1, opacity: 1 })); 13:

14:

15: $.get('/api/restaurants', { 16: latitude: latlng[0],

17: longitude: latlng[1]

18: }).done(function (restaurants) { 19: $.each(restaurants, function (index, value) { 20: var marker = L.marker([value.Latitude, value.Longitude])

21: .bindPopup(

22: '<p><strong>' + value.Name + '</strong><br />' +

23: value.Street + '<br />' +

24: value.City + '<br />' +

25: value.PostCode + '<br />' +

26: value.Phone + '</p>'

27: );

28: resultsLayer.addLayer(marker);

29: });

30: });

31: });

We define several circles of different opacities, and then show up the returned markers.

It is all pretty simple code, but the result it quite stunning. I am getting really excited by this thing. It is simple, beautiful and quite powerful. Wow!

The delivery tab does pretty much the same thing as the eat-in mode, but it does so in a different way. First, you might have noticed the LocationIndex in the previous two examples, this looks like this:

1: public class LocationIndex : AbstractIndexCreationTask<Restaurant>

2: { 3: public LocationIndex()

4: { 5: Map = restaurants => from restaurant in restaurants

6: select new

7: { 8: restaurant.Name,

9: _ = SpatialGenerate(restaurant.Latitude, restaurant.Longitude),

10: __ = SpatialGenerate("location", restaurant.LocationWkt) 11: };

12: }

13: }

Before we look at this, we need to look at a sample document:

I am note quite sure why we have in LocationIndex both SpatialGenerate() and SpatialGenerate(“location”). I think that this is just a part of the demo. Because the data is the same, and both lines should produce the same results.

However, for deliveries, the situation is quite different. We don’t just deliver to a certain distance, as you can see, we have a polygon that determines where do we actually delivers to. On the map, this looks like this:

The red circle is where I am located, the blue markers are the restaurants that delivers to my location and the blue polygon is the delivery area for the selected burger joint. Let us see how this works, okay? We will start from the index:

1: public class DeliveryIndex : AbstractIndexCreationTask<Restaurant>

2: { 3: public DeliveryIndex()

4: { 5: Map = restaurants => from restaurant in restaurants

6: where restaurant.DeliveryArea != null

7: select new

8: { 9: restaurant.Name,

10: _ = SpatialGenerate("delivery", restaurant.DeliveryArea, SpatialSearchStrategy.GeohashPrefixTree, 7) 11: };

12: }

13: }

So we are indexing just restaurants that have a drive through polygon, and then we query it like this:

1: public IEnumerable<object> Get(double latitude, double longitude, bool delivery)

2: { 3: if (!delivery)

4: return Get(latitude, longitude);

5:

6: var point = string.Format(CultureInfo.InvariantCulture, "POINT ({0} {1})", longitude, latitude); 7:

8: using (var session = MvcApplication.DocumentStore.OpenSession())

9: { 10: return session.Query<Restaurant, DeliveryIndex>()

11: .Customize(x => x.RelatesToShape("delivery", point, SpatialRelation.Intersects)) 12: // SpatialRelation.Contains is not supported

13: // SpatialRelation.Intersects is OK because we are using a point as the query parameter

14: .Take(250)

15: .Select(x => new

16: { 17: x.Name,

18: x.Street,

19: x.City,

20: x.PostCode,

21: x.Phone,

22: x.Delivery,

23: x.DriveThru,

24: x.Latitude,

25: x.Longitude,

26: x.DeliveryArea

27: })

28: .ToList();

29: }

30: }

This basically says, give me all the Restaurants who delivers to a locations that includes me. And then the rest all happens on the client side.

Quite cool.

The final example is the drive thru mode, which looks like this:

Given that I am driving from the green dot to the red dot, what restaurants can I stop at?

Here is the index:

1: public class DriveThruIndex : AbstractIndexCreationTask<Restaurant>

2: { 3: public DriveThruIndex()

4: { 5: Map = restaurants => from restaurant in restaurants

6: where restaurant.DriveThruArea != null

7: select new

8: { 9: restaurant.Name,

10: _ = SpatialGenerate("drivethru", restaurant.DriveThruArea) 11: };

12: }

13: }

And now the code for this:

1: public IEnumerable<object> Get(string polyline)

2: { 3: var lineString = PolylineHelper.ConvertGooglePolylineToWkt(polyline);

4:

5: using (var session = MvcApplication.DocumentStore.OpenSession())

6: { 7: return session.Query<Restaurant, DriveThruIndex>()

8: .Customize(x => x.RelatesToShape("drivethru", lineString, SpatialRelation.Intersects)) 9: .Take(512)

10: .Select(x => new

11: { 12: x.Name,

13: x.Street,

14: x.City,

15: x.PostCode,

16: x.Phone,

17: x.Delivery,

18: x.DriveThru,

19: x.Latitude,

20: x.Longitude

21: })

22: .ToList();

23: }

24: }

We get the driving direction from the map, convert it to a line string, and then just check if our path intersects with the drive thru area for the restaurants.

Pretty cool application, and some really nice UI.

Okay, enough with the accolades, next time, I’ll talk about the things that can be better.

![]() .

.