I am a strong believer in automated unit tests. And I read this post by Phil Haack with part amusement and part wonder.

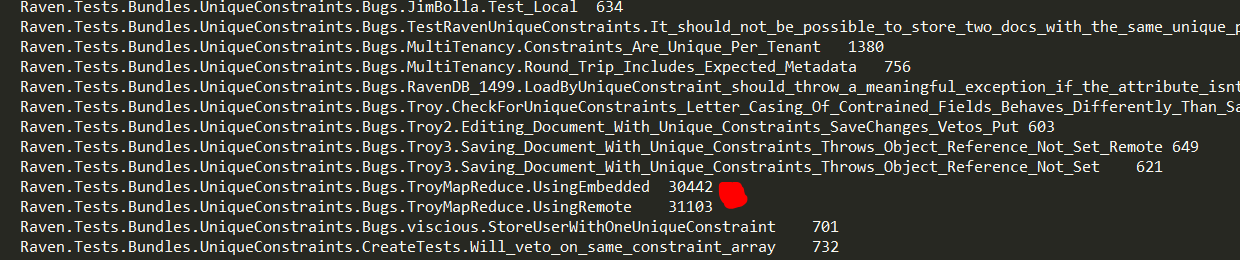

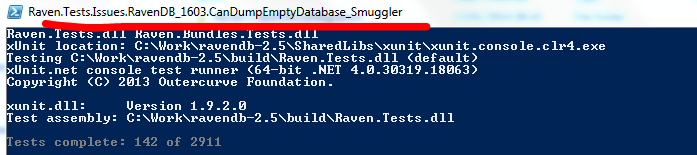

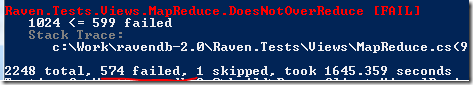

RavenDB currently has close to 1,400 tests in it. We routinely ask for failing tests from users and fix bugs by writing tests to verify fixes.

But structuring them in terms of source code? That seems to be very strange.

You can take a look at the source code layout of some of our tests here: https://github.com/ayende/ravendb/tree/master/Raven.Tests/Bugs

It is a dumping ground, basically, for tests. That is, for the most part, I view tests as very important in telling me “does this !@#! works or not?” and that is about it. Spending a lot of time organizing them seems to be something of little value from my perspective.

If I need to find a particular test, I have R# code browsing to help me, and if I need to find who is testing a piece of code, I can use Find References to get it.

At the end, it boils down to the fact that I don’t consider tests to be, by themselves, a value to the product. Their only value is their binary ability to tell me whatever the product is okay or not. Spending a lot of extra time on the tests distract from creating real value, shippable software.

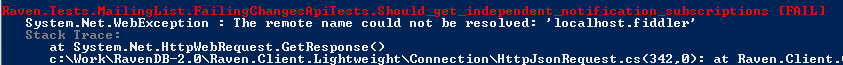

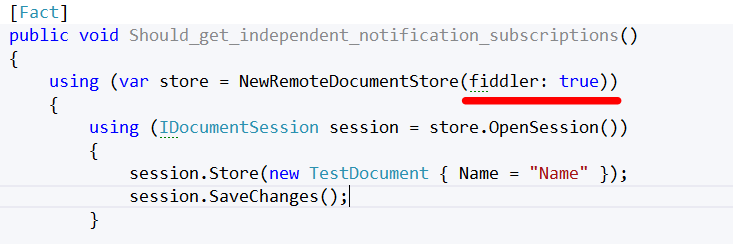

What I do deeply care about with regards to structuring the tests is the actual structure of the test. It is important to make sure that all the tests looks very much the same, because I should be able to look at any of them and figure out what is going on rapidly.

I am not going to use the RavenDB example, because that is system software and usually different from most business apps (although we use a similar approach there). Instead, here are a few tests from our new ordering system:

[Fact]

public void Will_send_email_after_trial_extension()

{

Consume<ExtendTrialLicenseConsumer, ExtendTrialLicense>(new ExtendTrialLicense

{

ProductId = "products/nhprof",

Days = 30,

Email = "you@there.gov",

});

var email = ConsumeSentMessage<NewTrial>();

Assert.Equal("you@there.gov", email.Email);

}

[Fact]

public void Trial_request_from_same_customer_sends_email()

{

Consume<NewTrialConsumer, NewTrial>(new NewTrial

{

ProductId = "products/nhprof",

Email = "who@is.there",

Company = "h",

FullName = "a",

TrackingId = Guid.NewGuid()

});

Trial firstTrial;

using (var session = documentStore.OpenSession())

{

firstTrial = session.Load<Trial>(1);

}

Assert.NotNull(ConsumeSentMessage<SendEmail>());

Consume<NewTrialConsumer, NewTrial>(new NewTrial

{

TrackingId = firstTrial.TrackingId,

Email = firstTrial.Email,

Profile = firstTrial.ProductId.Substring("products/".Length)

});

var email = ConsumeSentMessage<SendEmail>();

Assert.Equal("Hibernating Rhinos - Trials Agent", email.ReplyToDisplay);

}

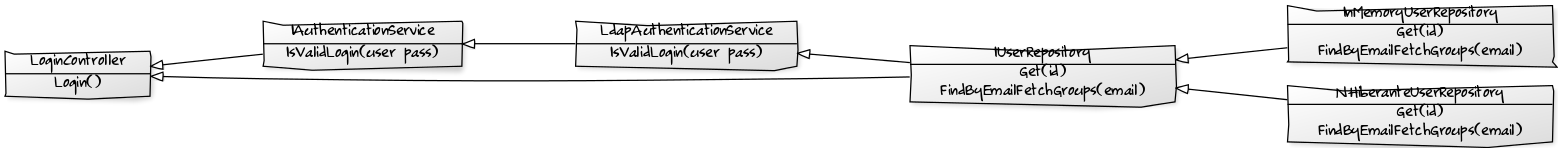

As you can probably see, we have a structured way to send input to the system, and we can verify the output and the side affects (creating the trial, for example).

This leads to a system that can be easily tested, but doesn’t force us to spend too much time in the ceremony of tests.

![]()