Dror from TypeMock has managed to capture the essence of my post about unit tests vs. integration tests quite well:

Unit tests runs faster but integration tests are easier to write.

Unfortunately, he draws an incorrect conclusion out of that;

There is however another solution instead of declaring unit testing as hard to write - use an isolation framework which make writing unit test much easier.

And the answer to that is… no, you can’t do that. Doing something like that put you back in the onerous position of unit test, where you actually have to understand exactly what is going on and have to deal with that. With an integration test, you can assert directly on the end result, which is completely different than what I would have to do if I wanted to mock a part of the system. A typical integration test looks something like:

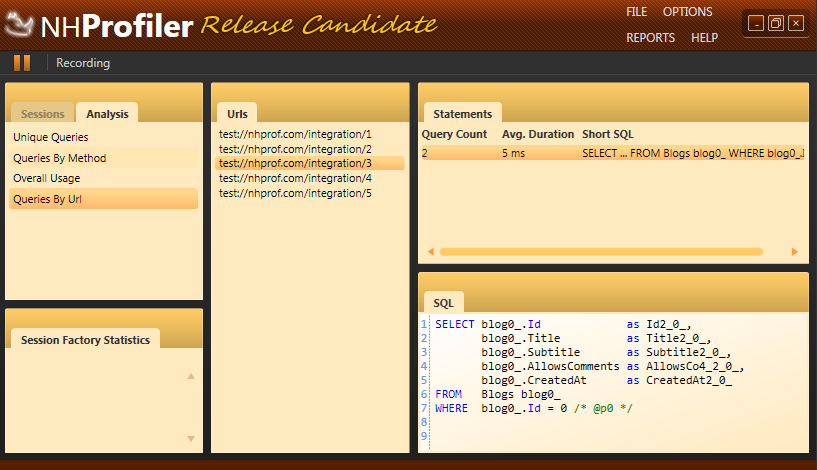

public class SelectNPlusOne : IScenario { public void Execute(ISessionFactory factory) { using (var session = factory.OpenSession()) using (var tx = session.BeginTransaction()) { var blog = session.CreateCriteria(typeof(Blog)) .SetMaxResults(1) .UniqueResult<Blog>();//1 foreach (var post in blog.Posts)//2 { Console.WriteLine(post.Comments.Count);// SELECT N } tx.Commit(); } } } [Fact] public void AllCallsToLoadCommentsAreIdentical() { ExecuteScenarioInDifferentAppDomain<SelectNPlusOne>(); var array = model.Sessions[0] .SessionStatements .ExcludeTransactions() .Skip(2) .ToArray(); Assert.Equal(10, array.Length); foreach (StatementModel statementModel in array) { Assert.Equal(statementModel.RawSql, array[0].RawSql); } }

This shows how we can assert on the actual end model of the system, without really trying to deal with what is actually going on. You cannot introducing any mocking to the mix without significantly hurting the clarity of the code.