Reviewing LevelDBPart IX- Compaction is the new black

After going over the VersionSet, I understand how leveldb decide when to compact and what it decide to compact. More or less, at least.

This means that I now mostly can figure out what this does:

Status DBImpl::BackgroundCompaction()

A user may force manual compaction of a range, or we may have reasons of our own to decide to compact, based on leveldb heuristics. Either way, we get the Compaction object, which tells us what files we need to merge.

There is a check there whatever we can do a trivial compaction, that is, just move the file from the current level to level+1. The interesting thing is that we avoid doing that if this is going to cause issues in level+2 (require more expensive compaction later on).

But the interesting work is done in DoCompactionWork, where we actually do compaction of complex data.

The code refers to snapshots for the first time that I see. We only merge values that are in a valid snapshot. So data doesn't “go away” for users. While holding a snapshot active.

The actual work starts in here:

This give us the ability to iterate over all of the entries in all of the files that need compaction.

And then we go over it:

But note that on each iteration we do a check if we need to CompactMemTable(); I followed the code there, and we finally write stuff to disk! I am quite excited about this, but I'll handle that in a future blog post. I want to see how actual compaction works.

We then have this:

This is there to stop a compaction that would force a very expensive compaction next time, I think.

As a side note, this really drive me crazy:

Note that we have current_output() and FileSize() in here. I don't really care what naming convention you use, but I would really rather that you had one. If there is one for the leveldb code base, I can't say that I figured it out. It seems like mostly it is PascalCase, but every now & then we have this_style methods.

Back to the code, it took me a while to figure it out.

Will return values in sorted key order, that means that if you have the same key in multiple levels, we need to ignore the older values. After this is happening, we now have this:

This is where we are actually writing stuff out to the SST file! This is quite exciting :-). I have been trying to figure that out for a while now.

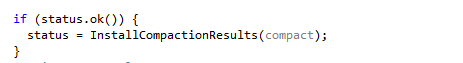

The rest of the code in the function is mostly stuff related to stats book keeping, but this looks important:

This generate the actual VersionEdit, which will remove all of the files that were compacted and add the new file that was created as a new Version to the VersionSet.

Good work so far, even if I say so myself. We actually go to where we are building the SST files. Now it is time to look at the code that build those table. Next post, Table Builder...

More posts in "Reviewing LevelDB" series:

- (26 Apr 2013) Part XVIII–Summary

- (15 Apr 2013) Part XVII– Filters? What filters? Oh, those filters…

- (12 Apr 2013) Part XV–MemTables gets compacted too

- (11 Apr 2013) Part XVI–Recovery ain’t so tough?

- (10 Apr 2013) Part XIV– there is the mem table and then there is the immutable memtable

- (09 Apr 2013) Part XIII–Smile, and here is your snapshot

- (08 Apr 2013) Part XII–Reading an SST

- (05 Apr 2013) Part XI–Reading from Sort String Tables via the TableCache

- (04 Apr 2013) Part X–table building is all fun and games until…

- (03 Apr 2013) Part IX- Compaction is the new black

- (02 Apr 2013) Part VIII–What are the levels all about?

- (29 Mar 2013) Part VII–The version is where the levels are

- (28 Mar 2013) Part VI, the Log is base for Atomicity

- (27 Mar 2013) Part V, into the MemTables we go

- (26 Mar 2013) Part IV

- (22 Mar 2013) Part III, WriteBatch isn’t what you think it is

- (21 Mar 2013) Part II, Put some data on the disk, dude

- (20 Mar 2013) Part I, What is this all about?

Comments

What is RaveDB? Have you already forked LevelDB?

Frank, Typo when writing the title. This is about leveldb.

Comment preview